dynamic GUI, load abstraction dynamically

Hi,

I'm running into two issues I can't seem to solve:

-

Is there a way to dynamically load and clear abstractions?

Let’s say I have multiple synthesizer engines with different GOP settings and sliders.

I’d like to switch between them by always loading just one engine at a time and replacing the GOP of the previous engine. -

I’d like to create a virtual display/dynamic GUI. I know that I can’t hide objects in Pure Data, but I could move the GOP to different sections to give the impression of switching display pages.

The problem is that I have 16 tracks, and each track might need 6–8 pages. That’s already over 100 pages — not including the different synthesizer engines with their own settings for each page.

Altogether, that could easily add up to 400+ pages, which is way too much to handle just by shifting the GOP.

I also considered using one abstraction per track. But that would require showing a GOP inside another GOP, which looks like that won`t work either?

Are there any other options I could try?

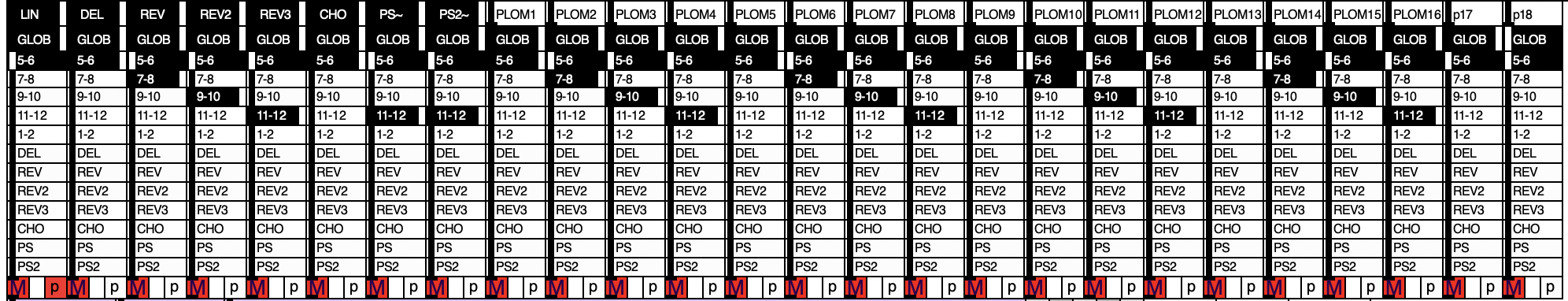

how would you recreate this mixer channelstrip abstraction?

@oid said:

@esaruoho If you are trying to save CPU than I would just separate UI and DSP, run the UI in its own instance of PD and DSP in another, communicate between them with [netsed]/[netreceive], there comes a time in PD when you just have to either separate UI and DSP or sacrifice. Beyond that it is difficult to say, I do not know what your needs are and what sacrifices are acceptable. What are your requirements? I can optimize these patches in a dozen different ways but I have no idea if any of them will meet your needs since I do not know what your needs are or even why you are trying to change things beyond a vague notion of there possibly being something better.

ok. i have heard about this UI DSP separation. so.. is it a case of every single slider for instance needs to do a [netsend] to some other abstraction that only does DSP? or have two separate patches altogether, one for UI and one for DSP? sorry, i'm really vague on this.

So, my main painpoints with the current channelstrip is that when I set up 16 "LFOs" to "breathe" up and down the volume slider (which also has slider-color-changing while it is moved from 0 to 1) - when i have more than 4 of these LFO counters cycling from 0...1 on the channelstrips, all sliders stop updating. it might be 4, 8 or 12, but by the time i try to get all 16 of the looper volumes to cycle from 0...1 (my "breather" has a setup of cycling from "min-max every 2 seconds" to "min-max every 60 seconds") -- and that to me makes me think that the UI is not as optimized as possible.

i keep hearing that ELSE library supposedly has some larger crossmatrix/channelstrip that i could use, but i have not looked at it.

so ideally i'd prefer to take the channelstrip even further, i.e. have all effect sends be modulatable (well, that word where the effects have LFO modulation) so i could have even larger more complex things going on where the channelstrips that receive audio from loopers, are sent to specific sends like reverbs, delays and so on. this kind of larger modulation on all parameters would be really useful.

the way the channelstrip is built is that the dwL and dwR go to a separate [diskwrite~] abstraction which i use to record audio. the "p" (and p1-p8) defines that any channelstrip that has p enabled, is sending to all the other loopers - so in that case, any audio that goes to a channelstrip, can be sampled into the 64kb loopers (16 of them).

also i have thechannelstrip labels (i.e. volume slider name) dynamically written to, so i can always have them easily understandable / informative.

here's the "init state". i use bunches and bunches of loadbang -> message -> senders to get them to a specific state. .

one thing i've been toying around as an idea is to incorporate highpass and lowpass filters to the channelstrips, and EQ. but i haven't figured out how to solve the EQ issue so that the single band peak EQ could be incorporated into such a small formfactor ( the width is only 38 and height is 189 so it's hard to conceive how an EQ could be usable there).

i also have for the 16 channelstrip looper instances, BCR2000 + BCF2000 volume slider control and so on. but i'm willing to take the whole setup "to the dock" and rewrite it, if i could be sure that it will be lighter on the cpu..

so yeah, i do only have a vague notion of "maybe it could be better, i'm not sure?".. but i've not yet seen much netsend + netreceive stuff so i'm not entirely familiar how it could be done and how much i need to rewrite (i.e. does everything need to be netsend+netreceived with a complete split of DSP and UI, to reap the benefits, or can i just try it with the 16 loopers and see what happens?

Collection of Pd internal messages, dynamic patching

An explanation of [donecanvasdialog( for canvas properties, including and most important graph-on-parent:

@Maelstorm wrote:

You can use [donecanvasdialog( to change the gop properties. When you right-click on a patch and choose Properties, all of that stuff is contained in the [donecanvasdialog( message. The format is this:

[donecanvasdialog <x-units> <y-units> <gop> <x-from> <y-from> <x-to> <y-to> <x-size> <y-size> <x-margin> <y-margin>(

The gop argument is 0 = gop off; 1 = gop on, show arguments; and 2 = gop on, hide arguments.

And there is a phenomenal patch-demo reposted by @whale-av

And there is a phenomenal patch-demo reposted by @whale-av

https://forum.pdpatchrepo.info/topic/5746/change-graph-on-parent-dimentions-from-inside/2

thx

Copying data from one buffer array to another buffer array

@emviveros said:

Seems like maxlib/arraycopy have a special implementation for copy entire arrays.

I think it depends on the number of elements being copied.

maxlib/arraycopy accepts start/size parameters with every copy request -- so it has to validate all the parameters for every request (https://github.com/electrickery/pd-maxlib/blob/master/src/arraycopy.c#L81-L166). This is overhead. The copying itself is just a simple for loop, nothing to see here.

[array get] / [array set], AFAICS, copy twice: get copies into a list, and set copies the list into the target.

So we could estimate the cost of arraycopy as: let t1 = time required to validate the request, and t2 = time required to copy one item, then t = num_trials * (t1 + (num_items * t2)) -- from this, we would expect that performance would degrade for large num_trials and small num_items, and improve the other way around.

And we could estimate the get/set cost as num_trials * 2 * t2 * num_items. Because both approaches use a simple for loop to copy the data, we can assume t2 is roughly the same in both cases. (Although... the Pd source de-allocates the list pointer after sending it out the [array get] outlet so I wonder if the outlet itself does another copy! No time to look for that right now.)

If you have a smaller number of trials but a much larger data set, then the for loop cost is large. Get/set incurs the for cost twice, while arraycopy does it once = arraycopy is better.

In the tests with 100000 trials but copying 5 or 10 items, the for cost is negligible but it seems that arraycopy's validation code ends up costing more.

hjh

samplerate~ delayed update

@seb-harmonik.ar said:

@alexandros in one case you're telling pd to start DSP

In the other pd is telling you that it has indeed been started

"telling pd to start DSP" = [s pd]

But here, the message is coming out of [r pd] -- so it isn't telling pd to start DSP. (Don't get confused by the "; pd dsp 1" -- to demonstrate the issue, it is of course necessary to start DSP. But the troublesome chain begins with an [r].)

@alexandros said:

So this:

[r pd] | [route dsp] | [sel 1]gives different results than this?

[r pd-dsp-started]I guess this should be fixed as well? Shouldn't it?

Not necessarily.

"dsp 1" / "dsp 0," as far as I can see, only reflect the state of the DSP switch.

The pd-dsp-started "bang" is sent not only when starting DSP, but also any time that the sample rate might have changed -- for example, creating or destroying a block~ object. That is, they correctly recognized that "dsp 1" isn't enough. If you have an abstraction that depends on the sample rate, and it has a [block~] where oversampling is controlled by an inlet, then it may be necessary to reinitialize when the oversampling factor changes.

It turns out that this is documented, quite well actually! I missed it at first because [samplerate~] seems like such a basic object, why would you need to check the help?

hjh

Autogenerate onparent gui from abstraction patch

@FFW donecanvas.txt

You can use [donecanvasdialog( to change the gop properties. When you right-click on a patch and choose Properties, all of that stuff is contained in the [donecanvasdialog( message. The format is this:

[donecanvasdialog <x-units> <y-units> <gop> <x-from> <y-from> <x-to> <y-to> <x-size> <y-size> <x-margin> <y-margin>(

The <gop> argument is 0 = gop off; 1 = gop on, show arguments; and 2 = gop on, hide arguments.

You can [send] the message to the abstraction just like you would with dynamic patching. If it's an abstraction, I'd recommend using [namecanvas] so you can give it a unique $0 name. Also, you should immediately follow it with a [dirty 0( message, otherwise you'll get some annoying "do you want to save" dialogs popping up:

[donecanvasdialog <args>, dirty 0( [namecanvas $0-myabs]

|

[send $0-myabs]

VVVVVVVVVVVVVVVVVVVVVVVVVVVVVVVVVVVV

AND [coords( can be more easily used than donecanvasdialog for graphs.

https://puredata.info/docs/developer/PdFileFormat

But you will still need to place the guis that you want to show through the gop window within its range.

I can't see how that could be automated...... but most things are possible in Pd one way or another.

Maybe using [find( [findagain( messages to the abstraction followed by an edit+move, but then the parent would need to know the names of the guis required.

The Python parse method might work better for that?

David.

20 mode pole mixing and morphing filter. Updated

@oid said:

What are you using to measure CPU?

I am just counting on pd's native [cputime] object as you can see in the screenshot. I also have a widget in my task bar that graphs cpu cores and memory usage, but I only use that to tell me when it's time to close some fans in my browser and whatever programs I have left open. I just realized I can mouse hover the widget and get a reading

with htop I get ~8% cpu use (6% being pd's DSP) for [lop~] and ~14% for [rpole~].

That is when running the entire morphing filter, right?

Is your computer that ancient or is the CPUs governor not kicking up to the next step with the low demands of your test patch?

Nah. Bought it last christmas- 3.5gHz / 4 cores / 16gb ram. I just have a lot of stuff going on at the same time (gimp, geogebra, pd, multiple tabs in firefox, various documents.)

I tend to test audio stuff with the governor set on performance since most any practical audio patch will kick it up to the highest settings anyways, gives a more accurate result.

I rarely run into problems even with all my excess of open garbage haha. I did have pd freeze on me a couple of times yesterday while experimenting- but that was due to sheer recklessness

s~/r~ throw~/catch~ latency and object creation order

IIRC (sorry if i'm wrong).

Building the DSP chain means doing a topological sort to linearize the graph of the DSP objects.

For that all the DSP objects of the topmost patch are collected in reverse order of their creations. Then the first node in the collection that has no DSP entry is fetched. The graph is explored depth-first (outlet by outlet) appending the DSP method/operation of the connected objects to the DSP chain. For that all the inlets of the tested node must have been visited. Otherwise the algorithm stop and fetches the next outlet, or the next object in the collection, and restart the exploration from it. That process is recursively done in subpatches discovered.

Notice that it is not easy to explain (and i don't have it fresh in mind). The stuff in Spaghettis is there. AFAIK it is more or less the same that in Pure Data (linked in previous post).

I can bring details back in my head, if somebody really cares.

.

.

.

You can also watch the beginning of that video of Dave Rowland about Tracktion topological process.

EDIT: The first ten minutes (the rest is not relevant).

WARNING: the way things are done in that talk is NOT how it is done in Pd.

It is just an illustration of the problem needed to be solved!

Introducing Tracktion Graph: A Topological Processing Library for Audio - Dave Rowland - ADC20

.

.

.

@whale-av said:

@jameslo I agree. I did report a bug once, but have never dared ask a question on the list.

The list is the domain of those creating Pd. I often read what they have to say, but I would not dare to show my face.

David.

It is really sad to see experienced users intimidated by developers.

But at the same time i totally understand why (and i still have the same felling).

Paradigms useful for teaching Pd

@ingox Ha, I guess this highlights the number 0 paradigm for Pd-- always measure first!

It looks to me like message-passing overhead dwarfs the time it takes to copy a list once into [list store]. That single full list-copy operation (a for loop if you look in the source) is still significant-- about 10% of total computation time when testing with a list of 100,000 elements.

However, if you remove all message-passing overhead and just code up [list drip] as a core class, you consistently get an order of magnitude better performance.

So we have:

- original list-drip: slow, but I can't find the code for it. But I hope it was just copying a list once before iteration, and not on every pass.

- matju's insane recursive non-copy list-drip: faster than the original implementation

list store]implementation of[list-drip]: looks to be consistently 3 times faster than number 2 above, lending credence to the theory that message-passing overhead is the significant factor in performance. (Well, as long as you aren't copying the entire list on every pass...)- Add core class

[list drip]to x_list.c and use a for loop to output each element from the incoming vector of atoms without copying anything. I quickly coded this up and saw a consistent order of magnitude performance improvement over the[list store]implementation. (Didn't test with gpointers, which will probably slow down every single implementation listed here.)

I think Matt Barber even had a case where removing a [trigger] object and replacing it with bare wires (and just depending on creation order) generated enough of a performance difference in a complex patch to warrant doing it. (Edit: this may have been in an abstraction, in which case the speedup would be multiplied by the total number of abstractions used...)

It's too bad that message-passing overhead makes that much of a difference. And while I can think of a potential speedup for the case of [trigger], I'm not sure there's a simple way to boost message passing performance across the board.

Few questions about oversampling

Thanks guys for the tips and links I will look into that in order to figure out the best trick to get a cleaner sound with the less CPU usage possible. I've actualy understood why my soustractive/additive bandlimited oscilators had some noise/clicking and it has nothing to do with aliasing but bad signal use in my design that I could fix easily.

Then while running my osc's with antialising/oversampling I did'nt notice an audible difference with or without antialising/oversampling, at least for the soustractive and additive synthesis. For FM synthesis I ran different test and got a good CPU use/antialising solution when oversampling two times. In order to get the best performance possible I could only apply the antialising method when using my FM osc and not applying it to my banlimited oscilators.

I've also tested inscreasing my block values and the result are interesting though I've heard that doing so leads to increase latency and since I want to make a patch meant for live performance it could became an issue if I rely on that to lower my CPU use. Though I might find a solution to the aliasing within use of a low pass filter which could offer a good alternative to the antialising method I used.

@gmoon I've used once pd~ and I don't know if I poorly implemented it or if the object isn't ready yet to deliver an interesting use of multiple cores but when I used it pd~ managed to multiply by four the CPU use of the patch I was working on. From my experience I won't recomend to anyone using pd~ for CPU optimization but maybe there's someone out there that knows how to use it properly and had succesfully devided his sound processing within pd.