-

ddw_music

posted in technical issues • read moreExternal loading is one of those things where there are a hundred ways to mess it up.

After struggling with it for a while myself, I came up with a few practices that remove some variables, thus remove some risk.

- Only install externals in the "pd externals install directory" (found in the preferences panel). Do not ever install externals in any other location. Deken (IIRC) download externals here, so that's fine.

- Actually, on my system, Gem is an exception since I'm using the Ubuntu package instead of installing from deken. But to avoid the types of complications that I ran into before, I symlinked the Gem into my pd externals install location: under Documents/Pd/externals/, there is a Gem directory, but it's a link pointing to /usr/local/lib/pd/extra/Gem. So I access it through the externals install directory.

- Pd "path" should list only the externals install directory -- my path list is "/home/xxx/Documents/Pd/externals" and nothing else. So I always know where to start looking.

- Use [declare -path xxx] to add library paths, and "-lib yyy" to init libraries like Gem.

- Isn't that a pain to have to do that in every patch? Well, not really... and the benefit is that you can search the patch for any declare objects used in the main patch file or abstractions to quickly get an inventory of which externals need to be transferred.

If you follow some streamlined practices for external management, then it removes a lot of speculation and worry. Recompiling? No... Or, "Mac is doing things to make the folder not findable" -- it's a user folder under Documents; if Mac is hiding folders under Documents without the user's permission, that would be pretty bad -- so that's a concrete example showing how you benefit from putting externals in a consistent location that is expected to be under your control, not the system's control.

My "rules" might seem too strict but I arrived at this because being haphazard about external installation caused a ton of headaches for me early on. Those problems don't exist for me anymore.

hjh

- Only install externals in the "pd externals install directory" (found in the preferences panel). Do not ever install externals in any other location. Deken (IIRC) download externals here, so that's fine.

-

ddw_music

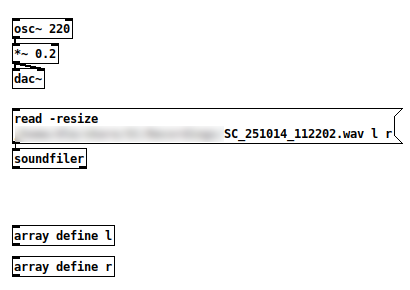

posted in technical issues • read moreWell, if you load a large audio file while sound is playing, you can get dropouts then too.

That's a 97.3 MB file -- "audio I/O error." Only vanilla objects in the patch. Sending that read message does glitch the audio.

So this isn't "a Gem issue."

Since Pd is single-threaded and the control message layer runs within the audio loop, heavy activities in the control message layer can cause audio processing to be late. Reading a hundred MB of audio?

Opening a window and preparing it for graphics rendering?

Opening a window and preparing it for graphics rendering?

AFAIK the available solutions are: A/ structure your environment so that all heavy initialization takes place before you start performing -- i.e., start Gem before your show, not during. Or B/ Put Gem in a different Pd process (which doesn't have to be [pd~] -- you could also run a second, completely independent Pd instance and use OSC to communicate between them). (Smart-alecky suggestion: Or C/ Move your audio production over to SuperCollider, whose audio engine shunts heavy loading off to lower-priority threads and whose control layer runs in a separate process, so it doesn't suffer this kind of dropout quite so easily.)

"Except that dropout when you create and destroy. Wish that could be changed" -- sure, but the issue is baked into Pd's fundamental design, and would require a radical redesign (of Pd) to fix properly.

hjh

-

ddw_music

posted in technical issues • read more@jamcultur said:

It looks like the feature request on github that @oid mentioned would fix the problem in a much cleaner way.

I would not be too optimistic about that feature request -- its current state seems to be: OP: "hey, this would be nice"; dev: "here are some reasons why this won't work"; OP: "oh come on, it would be nice"; dev: "here's another couple reasons why it won't work" ... which is kind of the same form as the thread here.

Well, the github issue ends with "let me try it in an external" but my prediction is that this effort will quickly run into trouble. It's not an easy problem.

hjh

-

ddw_music

posted in technical issues • read more... whereby, a reliable solution would be that an abstraction using another abstraction needs to initialize the sub-abstraction by sending messages to it. That is:

- Main patch loads abstraction xyz

- xyz loads abstraction abc

- abc initializes itself by [savestate] using the defaults saved in xyz.pd

- xyz gets data from [savestate]

- xyz, as part of its init, sends messages to the [abc] instance to override the earlier init

- xyz loads abstraction abc

Then xyz.pd is responsible for sending the right data to the right instances, avoiding ambiguity.

hjh

- Main patch loads abstraction xyz

-

ddw_music

posted in technical issues • read more@jamcultur said:

If that abstraction is imbedded within another abstraction, the state isn't saved and restored properly. This seems like a bug to me.

Whether it's a bug or not, I don't know, but it certainly is a data structure design problem, and I don't think it has an easy solution without radically redesigning the pd patch file structure.

When you use an abstraction in the top level of a patch, and that abstraction contains a [savestate], then the saved file includes two instructions for that abstraction, for instance:

#X obj 568 309 fader~ helpSrcA; #A saved helpSrcA -18.5355 0 helpMain 0;"#X obj" is an instruction to load the "fader~" abstraction from disk. "#A saved" contains the list that will be sent to [savestate] for initialization.

The scenario here, then, is that "fader~" loads an inner-level abstraction from disk, and this inner-level abstraction itself has a [savestate]. So somewhere there needs to be a unique "saved" instruction that may be different the default "saved" message in the abstraction file.

You can't save a "different from the abstraction file" saved-list in the abstraction file itself, for obvious reasons.

If you assume (as this thread seems to assume) that every [savestate] all the way down the chain should put a "saved" line into the top level file, how do you find the target for each of these "saved" lines?

If my "xyz" abstraction contains 4 references to "abc.pd" and "abc.pd" has a [savestate], then (per the assumption) there should be 1 "saved" line for xyz (let's label it s1) and 4 of them for abc (s2 - s5). S1's target is easy, but for s2-s5, you have 4 data lists and 4 targets. How to match them up? A naive solution might be to go by file order, but saving a pd patch can sometimes change the order in which objects are written out. If you modify the xyz abstraction in this way, this will not automatically reorder the saved lines in the top level file -- after that point, then, when you load your top level file, you will find the wrong data associated with the inner-level abstraction instances.

If Miller Puckette were to change the file structure so that each object has an explicit ID to which "saved" can refer, this still wouldn't solve the problem because, upon saving "xyz.pd," there is no way to seek out every pd patch that depends on xyz and redo the "saved" IDs.

It could perhaps be solved if pd copied all of the objects from abstractions into the top level file when saving, but then, if you fix a bug in an abstraction, the top level file won't pick up the change.

I think Puckette looked the complexity of this problem, and decided that the best solution was to limit [savestate] to one level of abstraction.

Intuitively, you would think "of course this should work," but when you really look at how to implement it, it's very difficult or maybe even impossible.

hjh

-

ddw_music

posted in output~ • read moreShort clip -- perpetual live-audio granular time stretching, inspired by Shepard tones. An afternoon to build in SuperCollider, but then I found PluginCollider's automation features to be not quite mature. So, today, rebuilt in PlugData (which was a long way 'round just to get proper DAW automation, but we do what we must).

hjh

-

ddw_music

posted in technical issues • read moreMost devices expect a clock-start message (250), and then the 24ppq series of 248 clock ticks, and 252 to stop.

Here's a patch I had made awhile back, to test a MIDI-clock-in class I hacked up in SuperCollider. [pd runclock] has all the MIDI logic. You can ignore the OSC control and just click the toggle at the top. Set BPM at right.

It uses pddp/ezoutput~ for an audio metronome; you can just delete that bit, if you don't need to hear a metronome.

hjh

-

ddw_music

posted in technical issues • read more@witteruis said:

i get an inlet: expected 'float' but got 'bang' error.

One problem is the connection from [sel] to the right inlet of [spigot]. This right inlet must be 0 to close it, or nonzero to open it. "Bang" doesn't mean anything to it.

You'll have to use the [sel] outputs to trigger message boxes with 0s and 1s, or work out a different kind of modulo logic.

hjh

-

ddw_music

posted in technical issues • read more@playinmyblues said:

In my op, I provide what I used to install it but I think that was just for Ruby.

Actually not:

$ apt show gem ... skipped some stuff... Description: Graphics Environment for Multimedia - Pure Data librarySo it's definitely gem for pd.

I could not find the library anywhere on my Raspberry Pi...

I always forget this and I always have to do a google search for "linux apt list installed files," but:

$ dpkg -L gem ... long list of files, including a lot of them like this: /usr/lib/pd/extra/Gem/GEMglBegin-help.pd /usr/lib/pd/extra/Gem/GLdefine-help.pd /usr/lib/pd/extra/Gem/Gem-meta.pd /usr/lib/pd/extra/Gem/Gem.pd_linux... where I pasted these specifically because the last one, Gem.pd_linux, is the actual library that gets loaded when you

[declare -lib Gem].Then you can infer that

/usr/lib/pd/extra/Gemis the location to which the package is installed. (The point being that even in a command-line environment, there are ways to find out what was installed where. You might have to do some searching to find the commands. BTW I'm not using rpi now but its OS is based on debian, so these commands "should" be OK.)As I see it, there are just a few possibilities:

- (1) Maybe

sudo apt install gemdidn't install it properly. That seems unlikely (but, worth it toapt show gemto check the package metadata). - (2) Or, maybe

sudo apt install gemdid install it properly. That divides into a couple of sub-cases:- (2a) Maybe Pd can't find the package. If that's the case, then what I would do is: go to the pd path preferences and make sure

/usr/lib/pd/extrais in the list. Then [declare -lib Gem] should find it. - (2b) Or maybe the package is incompatible with RPi. In that case, everything would look right environmentally but the package simply wouldn't load.

- (2a) Maybe Pd can't find the package. If that's the case, then what I would do is: go to the pd path preferences and make sure

For 2a, everybody -- everybody -- gets tripped up on pd external loading. For me, the best strategy has been to keep the Pd "path" preferences as simple as possible --

/home/your_user_name/Documents/Pd/externalsfor user-specific externals, and maybe add/usr/lib/pd/extrafor systemwide packages -- and nothing else. Then the Gem/ root folder would go directly into one of these, e.g./usr/lib/pd/extra/Gem. I rigorously avoid putting externals in any other locations.Then, use a [declare] in the patch. [declare -lib Gem] tries

path ++ "/Gem"for each of the entries in your path preferences. If you have kept things in standardized locations, this will work.Maybe try this with a different external package first, one that is RPi compatible, to make sure that you understand the mechanics. (I've seen a lot of threads where people were haphazard about external installation and got tied up in knots. It's really worth some time to get it right, to be certain that this case can be ruled out.)

For 2b, if there is no pre-compiled package available for RPi, the only alternative is to compile it yourself, unfortunately. The

./configurescript should tell you if that's supported for your machine or not.sudo installs gem for you

Well, not exactly.

sudois "superuser do," which runs a command with elevated privileges so that, for instance, files can be installed in system executable locations that are normally write-protected.sudoitself doesn't install anything.sudo apt install gemwould install Gem.aptis the package manager -- this is the component that actually installs it.I mean, now I see what you were trying to say, but there were enough pieces left out of your comment that the meaning was unclear.

hjh

- (1) Maybe

-

ddw_music

posted in technical issues • read more@willblackhurst said:

I only ever got gem to work from sudo

With all due respect, but... whaaa? That's not how Gem runs.

If you install a VST plugin, you don't run it at the command line. It will never work that way. Gem is a Pd plugin. It can't run without a Pd host. Sudo isn't a Pd host.

the cia people dont even do anything someone else installs it for them

I'm at some pains to understand the relevance, but, oh-kay.

hjh