I remember someone posting a link to a site that had this compiled executable labelled as an "experimental release", but I can't find it now. Maybe it was it on an IEM page? If you know, please tell me where I could download it from.

-

Pd compiled for double-precision floats and Windows

-

yes

12 years ago:

[PD] why does PD round numbers? (in tables, in messageboxes, etc)

https://lists.puredata.info/pipermail/pd-list/2012-04/095892.html -

I wouldn't treat Pd as a 'proper language'

-

@porres Why not? Or do you just mean it is not safe to expect what is generally safe to expect in programming languages?

-

-

@porres said:

I also did, and I think we can raise a discussion on the list on why can't we have the best precision possible for both single and double

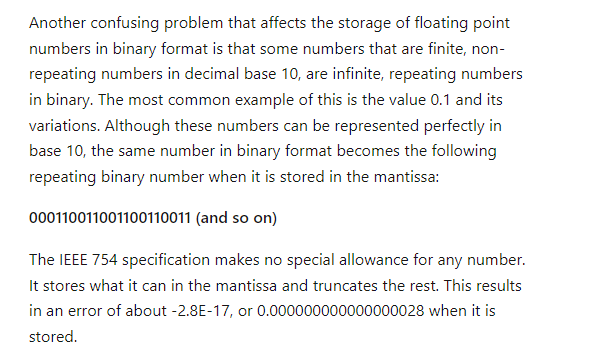

FWIW: Some years ago, SuperCollider changed double-to-string rendering to 17 digits of precision (sc language uses double precision, while audio is single precision), and... users freaked out because suddenly e.g. 1/10 would print out as 0.10000000000000001. "But it's not exact, where are those extra digits coming from" -- many many user complaints, until we reverted that change and now print 14 digits instead.

That is, "best precision" may not be the same as maximum precision.

Though I'd agree that clamping doubles to single precision in object boxes seems a pointless restriction.

hjh

-

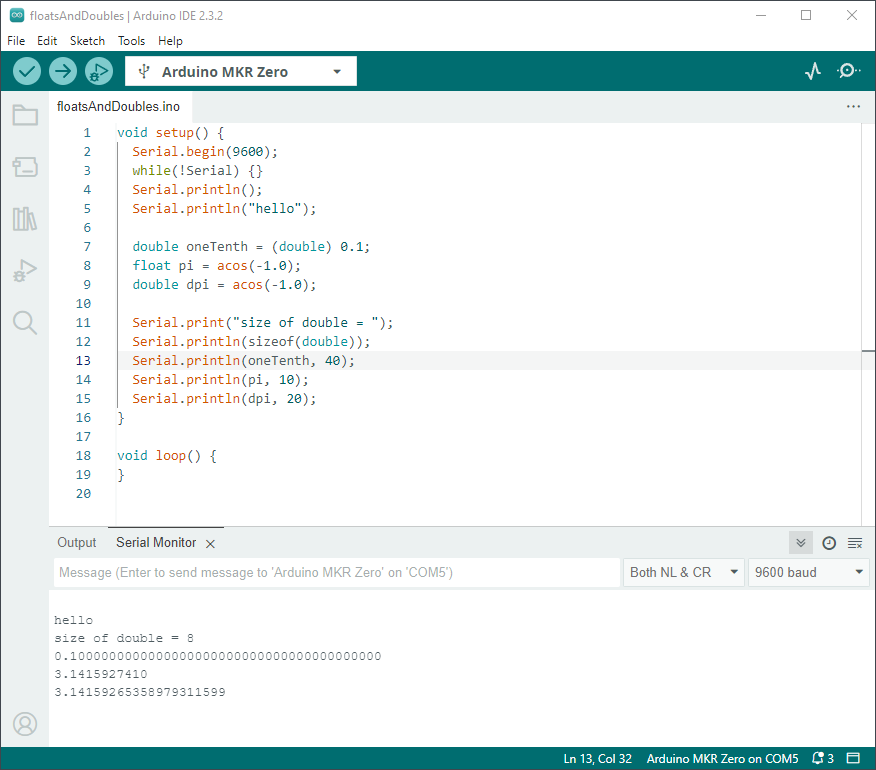

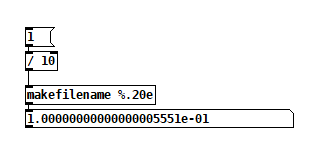

@ddw_music I love that story but am scratching my head over the 1/10 example you gave. Here's a test I made in Arduino c++:

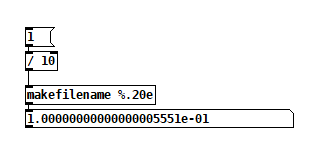

I went out 40 digits and didn't see anything unexpected. Was that example you gave just a metaphor for the issue, or is my test naive?Getting back to how Pd seems to differ from other programming languages, I'm going to hazard a guess and say that Pd hasn't separated the value of a float from its display/storage format. In my Arduino code I declare values, but it's not until the print statements that I specify their display formats. It's similar in Java and c#. I wonder if Pd could do something similar without upsetting users or making it too unfriendly? Maybe number boxes and arrays have an extra property that is a numeric format string? Or set messages for messages end in a numeric format string? Format strings shouldn't be too unfamiliar to those who've used makefilename. And if the format string is missing, then Pd defaults to it's current single-precision truncation strategy?

PS I hope my attempt at humor didn't discourage @porres from responding to @oid's question. I'm sure he would have something more meaningful to contribute.

Edit: hmm, but here's Pd64.

Yet another edit: I found this in Microsoft Excel help, but it suggests the value should be slightly less, not more that 0.1. Plus, Excel shows 0.1 exactly.

Yet another edit edit: disregard my comment about expecting it to be less, I didn't account for normalization of the mantissa. Carry on. -

@jameslo said:

@ddw_music I love that story but am scratching my head over the 1/10 example you gave. Here's a test I made in Arduino c++: ... I went out 40 digits and didn't see anything unexpected. Was that example you gave just a metaphor for the issue, or is my test naive?

Not a metaphor at all:

[16, 17, 40].do { |prec| "% digits: %\n".postf( prec, 0.1.asStringPrec(prec) ) }; 16 digits: 0.1 17 digits: 0.10000000000000001 40 digits: 0.1000000000000000055511151231257827021182As for Arduino, the float datatype reference says "Unlike other platforms, where you can get more precision by using a double (e.g. up to 15 digits), on the Arduino, double is the same size as float" -- so my guess here is that Serial places a limit on the number of digits it will try to render, and then fills the rest with zeros.

I went out 40 digits and didn't see anything unexpected.

Seeing zeros all the way out to 40 digits is unexpected! Arduino's output here is more comforting to end-users (which might be why they did that), but it isn't accurate.

Considering that Arduino calculates "double" using single precision, the output should deviate from the mathematically true value even earlier:

// 0.1.as32Bits = single precision but as an integer // Float.from32Bits = double precision but based on the 32 bit float Float.from32Bits(0.1.as32Bits).asStringPrec(40) -> 0.100000001490116119384765625The most reasonable conclusion I can draw is that Arduino is gussying up the output to reduce the number of "what the xxx is it printing" questions on their forum. That should not be taken as a standard against which other software libraries may be judged.

Edit: hmm, but here's Pd64.

Pd64 is doing it right, and Arduino is not.

Getting back to how Pd seems to differ from other programming languages, I'm going to hazard a guess and say that Pd hasn't separated the value of a float from its display/storage format.

The value must be stored in standard single/double precision format. You need the CPU to be able to take advantage of floating point instructions.

It's rather that Pd has to render the arguments as text, and this part isn't syncing up with the "double" compiler switch.

PS I hope my attempt at humor didn't discourage @porres from responding to @oid's question. I'm sure he would have something more meaningful to contribute.

Of patchers as programming languages... well, I got a lot of opinions about that. Another time. For now, just to say, classical algorithms are much harder to express in patchers because patchers are missing a few key features of programming languages.

hjh

-

@ddw_music This doesn't invalidate your larger point, but RE double precision floating point on Arduino, that reference is needs updating. I used a MKR Zero because it supports 8 byte doubles. You can see in my code that line 12 reports that the size of a double is 8 bytes, and that line 15 generates 16 correct digits of pi.

For fun I tested c# (which agrees with SC and Pd64) and Java (which agrees with Arduino) so I'm not quite ready to join the "Arduino is wrong" team. I also can't coax Excel into the former camp, but that doesn't mean that it can't be done because I found several examples of how to surface that base 2/base 10 mismatch, e.g. (43.1 - 43.2) + 1.

My apologies to the folks who came here to read about Pd

Edit: Both Java and Arduino display non-zero digits past the 7th digit when 0.1 is stored as a single-precision float, so I find it hard to believe that there's special-purpose code to suppress what I'm gonna refer to from now on as "SuperCollider panic"

-

@jameslo said:

@ddw_music This doesn't invalidate your larger point, but RE double precision floating point on Arduino, that reference is needs updating.

Ok.

For fun I tested c# (which agrees with SC and Pd64) and Java (which agrees with Arduino) so I'm not quite ready to join the "Arduino is wrong" team.

At the end of the day, 0.1 stored as any precision binary fraction doesn't actually equal 0.1, because the denominator of 1/10 includes a prime factor (5) that is not a prime factor of the base (2). If you ask for a decimal string for this imperfect binary representation and you ask for more (decimal) digits than are actually stored, then the trailing digits are by definition fake. They're not real.

"I find it hard to believe that there's special-purpose code to suppress..." but that is exactly what it is: the float-to-string function is supplying junk data, in a way that gives you an illusion of precision. Those zeros (the ones past the precision limit) are all fake. They don't exist in the binary number, so they must be manufactured by the "-to-string" function.

The standard C library way has the advantage of reminding the user that the trailing digits are garbage.

hjh

-

@ddw_music So in the case of c#, Pd64 and SC, are the non-zero numbers past the precision limit real?

-

@jameslo

https://en.wikipedia.org/wiki/Floating-point_arithmetic#Representable_numbers,_conversion_and_rounding"[...] Any rational with a denominator that has a prime factor other than 2 will have an infinite binary expansion. This means that numbers that appear to be short and exact when written in decimal format may need to be approximated when converted to binary floating-point. For example, the decimal number 0.1 is not representable in binary floating-point of any finite precision; the exact binary representation would have a "1100" sequence continuing endlessly:

e = −4; s = 1100110011001100110011001100110011...,where, as previously, s is the significand and e is the exponent.

When rounded to 24 bits this becomes

e = −4; s = 110011001100110011001101,which is actually 0.100000001490116119384765625 in decimal. [...]"

And as being said here about SC and Arduino, and on the mailling-list on Max or JSON: Pd is not the only user-friendly (scripting/patching) language/environment that had to deal with this.

Althought backward-compabillity is the most precious thing

and long-term maintaince would become more complicated if PD single and double would differ in such an elementary part, my vote goes for more Pd64 developement, if I had a voice.But for now, it seems like there are several easy experimental improvements, already doable when self-compiling Pd64!?

%.14lg mentioned by @katjav

https://lists.puredata.info/pipermail/pd-list/2012-04/095940.html

or that

http://sourceforge.net/tracker/?func=detail&aid=2952880&group_id=55736&atid=478072Also we could have a look (for %.14lg

) in the code of Katja's Pd-double, and Pd-Spagetties is double, too. (dev stopped, I never tried this)

) in the code of Katja's Pd-double, and Pd-Spagetties is double, too. (dev stopped, I never tried this)

@jancsika Is Purr-Data double now? https://forum.pdpatchrepo.info/topic/11494/purr-data-double-precision I don't know if or how they care about printing and saving. -

@jameslo said:

@ddw_music So in the case of c#, Pd64 and SC, are the non-zero numbers past the precision limit real?

lacuna beat me to the punch, but I already wrote some stuff up from a slightly different perspective. Maybe this will fill in some gaps.

In short: Yes, those "extra digits" accurately reflect the number that's being stored.

Sticking with single precision... you get 1 sign bit, 8 exponent bits, and 23 mantissa bits (for 24 bits of precision in the mantissa, because in scientific notation, there's one nonzero digit to the left of the point, and in binary, there's only one nonzero digit, so it doesn't have to be encoded).

Binary long division: 1 over 1010 (1010 = decimal 8+2 = decimal 10).

0.00011001100.... __________ 1010 ) 1.0000 - 16/10 1010 ---- 1100 - remainder 6, then add a digit = 12/10 1010 ---- 00100 - remainder 2, then add a digit = 4/10 1000 - 8/10 10000 - 16/10 repeating from here= 1.10011001100110011001100 * 2^(-4)

The float encoding has a finite number of digits -- therefore it must be a rational number. Moving the binary point 23 places to the right, and compensating in the exponent:

110011001100110011001100 * 2^(-27)

= 0xCCCCCC / 2^27

= 13421772 / 134217728Just to be really pedantic, I went ahead and coded step-by-step decimal long division in SC, and I get:

~longDiv.(13421772, 134217728, maxIter: 50); -> 0.0999999940395355224609375... which is below the IEEE result. That's because I just naively truncated the mantissa -- I think IEEE's algorithm (when populating the float in the first place, or when dividing) recognizes that the next bit would be 1, and so, rounds up:

~longDiv.(13421773, 134217728, maxIter: 50); -> 0.100000001490116119384765625... matching lacuna's post exactly.

Division by large powers of two, in decimal, produces a lot of decimal digits below the point -- but it will always terminate.

hjh

-

@lacuna @ddw_music Thank you both for great explanations, and sorry to have made you write and research so much when "yes, it's the actual stored value in decimal, look it up in Wikipedia" would've shut me up

. I'll take this up on the forums of those other softwares, now with a much stronger understanding.

. I'll take this up on the forums of those other softwares, now with a much stronger understanding. -

@jameslo I'm not a native speaker, no offense intended.

Just was hoping, we would overcome those (non)issues, making courageous decisions or find some better workaround/patch/fix.

Some day, I am going to try the mentioned ones. I am learning, too, thank you all!!! -

@lacuna Oh man! In no way was offense taken! I hereby grant everyone the authority to call me out when they think I'm being cranky! I try to use lots of smiley emojis because I learned a long time ago that the way I and my friends talk is probably more snarky and sarcastic than is customary in other parts of the world. To us, it's all just good-natured silliness, us trying to make each other laugh.

The discussion I've been having on the Arduino forum has clarified my thinking a lot, but it's also made clear how the gaps in my education make this a topic that needles a part of my brain. It doesn't help that I'm inbetween music gigs

-

okok wasn't sure if my "as being said" sounded arrogant ... allright!

On the question, how to calculate exact: As far as I understand (not): Fixed point arithmetic can be exact but has no headroom.

-

@lacuna said:

Fixed point arithmetic can be exact...

You and I are on the same wavelength. Someone on the Arduino forum gave me a link to the Arduino printFloat() routine, so I rewrote it in Q4x60 fixed point (i.e. 4 bits of integer, 60 bits of fraction). It now prints out those excess digits correctly. So the issue with their library routine is that it uses doubles to calculate what to print for doubles, which just happens to print zeroes forever past the 10ths place in the case of 0.1 (but not other values, like 1.1). It's a simplistic algorithm due to resource constraints, i.e. why waste limited resources on correctly displaying digits beyond the precision limit? Someone else on the Arduino forum linked to a 15 page academic paper on how hard the fp print problem is--I'm only up to page 4