snapshotting and restoring the state of a [phasor~] exactly

@jameslo A very different approach (which seems to work) by suspending dsp processing in the sub-patch and re-starting when you wish to repeat the [phasor~] sequence (it should be accurate but could need better testing by external graphing..!!)....... phasor-store.pd

In 32-bit Pd it seems to be accurate for value only to 1/1000 but in 64-bit 1/10000 or greater.

This method does not restore the master [phasor~] so a switch to using the slave would be necessary as the sequence is restored.... and it is single use.

I cannot fathom for now how the same accuracy could be saved and recalled for a future start of a patch, apart from saving an [array] of a [phasor~] output.

David.

snapshotting and restoring the state of a [phasor~] exactly

@lacuna said:

Don't know about phasor's right inlet, but I guess for more precision on the outside of objects we'd have to use 'Pd double precision'.

Yes, I confirmed that my test patch runs without issue under Pd64. Unfortunately, my real patch doesn't

Also remember @ddw_music different approach than [phasor~]:

I think that "different approach" is a different issue--I'm not multipying its output to index into a large table. I'm just using it as an oscillator/LFO. Are you suggesting that there's a way to build an oscillator with [rpole~] that is as frequency-accurate as [phasor~] but without the single vs double precision issue?

On one sample late: It is [snapshot~] who is early, not [phasor~]:

...

I would call this a bug of [snapshot~] !%§$*(

I don't understand your conclusion about the timing of [snapshot~]. Here's how I would analyze your demonstration patch: the top bang is processed in-between audio blocks. Therefore, [tabplay~] and [fexpr~] run in the following audio block. [snapshot~] is different though, because it's a bridge between control rate and audio rate--like bang, it also runs between audio blocks. Therefore, the best it can do (without having to predict the future) is to return the last sample of the previous audio block. So it's not surprising for [fexpr~] to have to reach back into the previous audio block to get the same value as [snapshot~]. If [snapshot~] were to return the first sample of the following audio block, it couldn't pass that value to control rate code until that audio block's processing is complete. Do you see my point?

Also, I'm not saying [phasor~] is early, I'm just saying its right inlet isn't a mechanism for restoring its state. It's just for what the documentation says it's for: to reset its phase to a given value.

snapshotting and restoring the state of a [phasor~] exactly

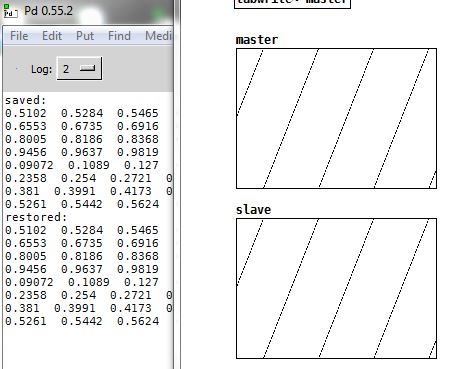

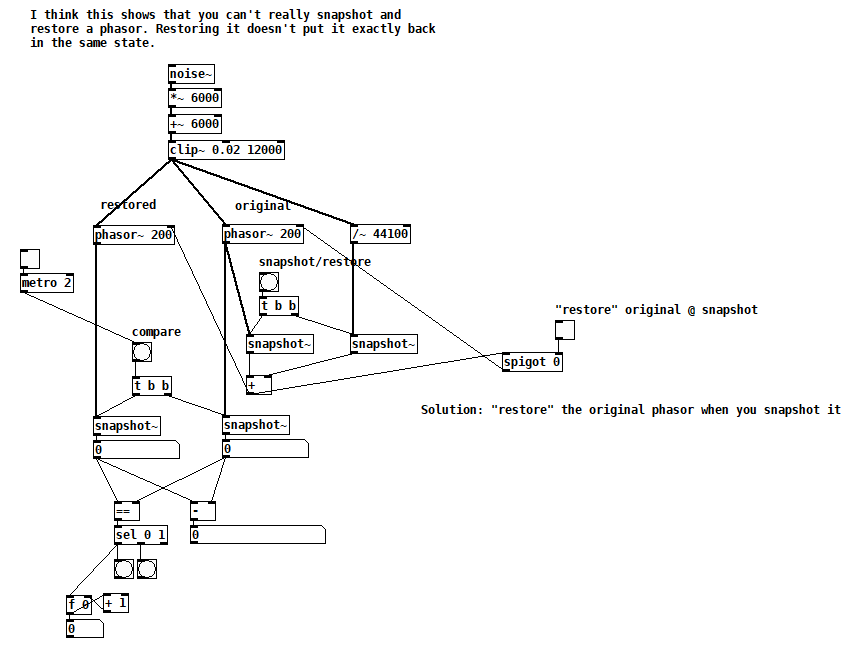

Suppose you want to capture the state of a [phasor~] in order to return to it later, i.e. you want it to output the exact same sequence of samples as it did from the moment you captured its state. As a first attempt, you might [snapshot~] the [phasor~]'s output and then send that value to the [phasor~]'s right inlet sometime later to restore it. Of course you also want to capture the state of its frequency inlet (and record it if it's changing). But there are two subtleties to consider. Firstly, the value you write to the right inlet is the next value that the [phasor~] will output, but when you [snapshot~]ed it, the next value it output was a little greater, greater by the amount of the last frequency divided by the current sample rate. So really, this greater value (call it "snap++") is the one you should be writing to the right inlet.

The second subtlety has to do with the limits of Pd's single precision floats. Internally, [phasor~] is accumulating into a double precision float, so in a way, the right inlet overwrites the state of the phasor with something that can only approximate its state. The only solution I could find is to immediately write snap++ to the right inlet of the running [phasor~], so that the current and all future runs output the exact same sequence of samples. This might not be acceptable in your application if you are reliant on [phasor~]'s double precision accumulation because you'd be disrupting it in exchange for repeatability.

Here's a test patch that demonstrates the issue:

phasor snapshot restore test.pd

Looking for advice on using phasor~ to randomize tabread4~ playback

@nicnut said:

There is a number box labeled "transpose." The first step is to put a value in there, 1 being the original pitch, .5 is an octave down, 2 is an octave up, etc.

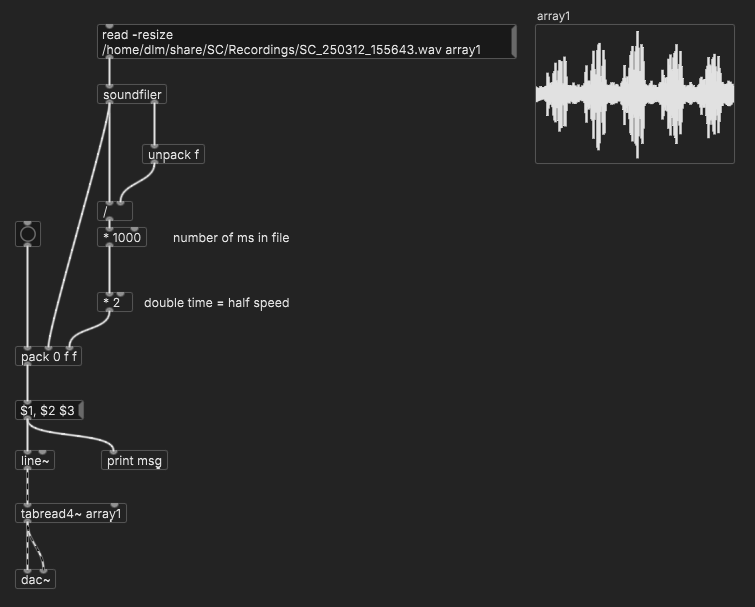

Here, you'd need to calculate the phasor frequency corresponding to normal playback speed, which is 1 / dur, where dur is the soundfile duration in seconds. Soundfile duration = soundfile size in samples / soundfile sample rate, so the phasor~ frequency can be expressed as soundfile sample rate / soundfile size in samples. Then you multiply this by a transpose factor, ok.

But I don't see you accounting for the soundfile's size or sample rate, so you have no guarantee of actually hitting normal playback speed.

After that you can change the frequency of the phasor in the other number box labled "change phasor frequency without changing pitch."

If you change the phasor frequency here, you will also change the transposition.

This way, I can make the phasor frequency, say .5 and the transposition 2,

Making the eventual playback speed 1 (assuming it was 1 to begin with), undoing the transposition.

which I don't think I can do using line~ with tabread4~.

The bad news is, you can't do this with phasor either.

hjh

Looking for advice on using phasor~ to randomize tabread4~ playback

@nicnut said:

Yes line~ would be better, but one thing I am doing is also playing the file for longer periods of time, by lowering the phaser~ frequency, and doing some math the transpose the pitch of the playback that I would like to keep. With line~ I don't know how to separate the pitch and the playback speed.

If I understand you right, separating pitch and playback speed would require pitch shifting...?

With phasor~, you can play the audio faster or slower, and the pitch will be higher or lower accordingly.

With line~, you can play the audio faster or slower, with the same result.

To take a concrete example -- if you want to play 0-10 seconds in the audio file, you'd use a phasor~ frequency of 0.1 and map the phasor's 0-1 range onto 0 .. (samplerate*10) samples. If instead, the phasor~ frequency were 0.05, then it would play 10 seconds of audio within 20 seconds = half speed, one octave down.

With line~, if you send it messages "0, $1 10000" where $1 = samplerate*10, then it would play the same 10 seconds' worth of audio, in 10 seconds.

To change the rate, you'd only need to change the amount of time: 10000 / 0.5 (half speed) = 20000. 10 seconds of audio, over 20 seconds -- exactly the same as the phasor~ 0.05 result.

frequency = 1 / time, time = 1 / frequency. Whatever you multiply phasor~ frequency by, just divide line~ time by the same amount.

(line~ doesn't support changing rate in the middle though. There's another possible trick for that but perhaps not simpler than phasor~.)

hjh

Buffer delay loop

@DesignDefault Welcome to the Forum...!

Sorry, this will be a lot for a newbie.

You will see in the video @ricky has linked to that the [phasor~] object controls the speed at which the array is being read.

So you will need to increase the number that it receives at its left inlet in order to increase the speed.

It is an audio rate inlet, so you will need [sig~] to convert a control rate 1 to a signal rate 1.

1 will play at normal speed, 2 at double that speed etc.

Unfortunately you will need to "catch" a moment in the [phasor~] loop using [edge~] and then increment that value to [sig~] by whatever increase you want each time that [edge~] outputs a bang.

Catching the exact moment that it loops can be awkward and you might well need to add or subtract a value from the output of [phasor~] before sending it into [edge~]

A more reliable method would be to use a [metro] to increase the [sig~] with the metro rate set by the value from [timer].

Also, before playback (it only needs to be done once for the array after it has been filled) extra data points should be added.

That will stop clicks as [phasor~] wraps back to the beginning of the array.

https://forum.pdpatchrepo.info/topic/14301/add-delete-guard-points-of-an-array-for-4-point-interpolation-of-tabread4-ect

That will add 3 data points to the array, allowing [tabread4~] to correctly interpolate the samples at wrap around.

For that to work properly you should then add 3.... [+ 3] to the total number of samples after the [* 44.1]

Also, if you are running Pd at 48000Hz the [* 44.1] should be changed to [* 48] for the initial playback speed to be correct.

David.

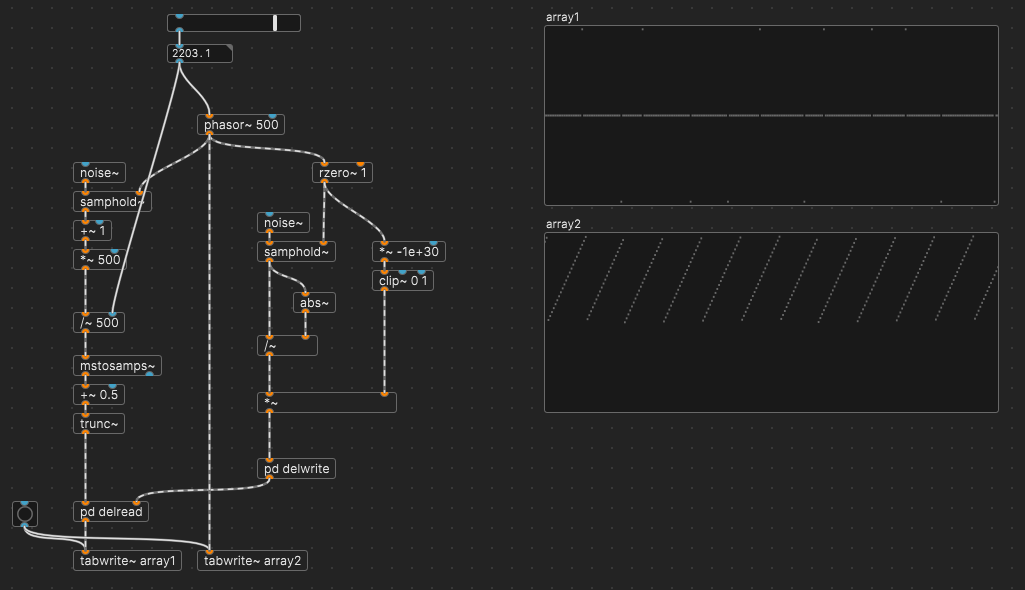

looking for velvet noise generator

@porres said:

to never miss a period, but I also think this is a job for a C code.

To atone for my earlier SC jibe, here's a delay-based approach. (Also, indeed you're right -- I hadn't read the thread carefully enough. Dust / Dust2 don't implement exactly "one pulse per regular time interval, randomly placed within each interval.")

Uses else's del~ because delread~ requires a message-based delay time, which can't update fast enough, and delread4~ interpolates, which smears the impulses. With del~, I could at least round the delay time to an integer number of samples, and AFAICS it then doesn't interpolate.

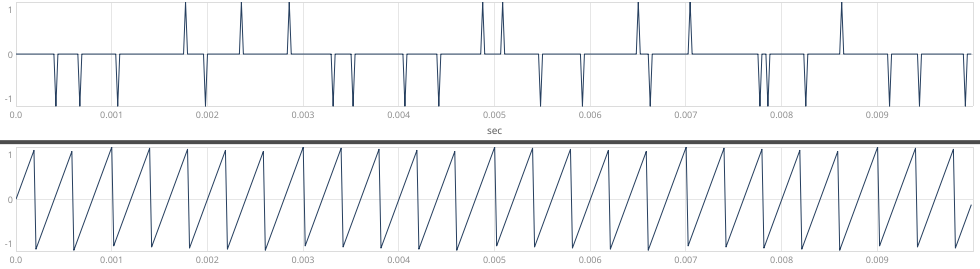

It looks to me in the graphs like there is exactly one nonzero sample per phasor~ cycle.

hjh

PS FWIW -- one nice convenience in SC is that non-interpolating DelayN doesn't require the delay time to be rounded

(

a = { |density = 2500|

// var pulse = Impulse.ar(density);

// for similarity to Pd graphs, I'll phasor it

// but the Impulse is sufficient for the subsequent logic

var phasor = LFSaw.ar(density);

var pulse = HPZ1.ar(phasor) < 0;

var delayTime = TRand.ar(0, density.reciprocal - SampleDur.ir, pulse);

var rand = TRand.ar(-1, 1, pulse).sign;

[DelayN.ar(rand * pulse, 0.2, delayTime), phasor]

}.plot;

)

Sync Audio with OSC messages

@earlyjp There might well be a better way than the two options that I can think of straight away.

You could play the audio file from disk, and send a bang into [delay] objects as you start playback, with the delays set to trigger the messages at the correct time.

I am not sure how long a [delay] you can create though because of the precision limit of a number size that can be saved in Pd. You might need to use [timer] banged by a [metro]

Or as you say you could load the file into RAM using [soundfiler] and play the track in the array using [phasor~] and trigger the messages as the output of [phasor~] reaches certain values.

Using [phasor~] the sample will loop though.

To play just once you could use [line~] or [vline~]

You would need to catch the values from [phasor~] [line~] or [vline~] using [snapshot~].

[<=] followed by [once] would be a good idea for catching, as catching the exact value would likely be unreliable...... once.pd

The same will be true if you use [timer] as the [metro] will not make [timer] output every value.

Again, number precision for catching the value could be a problem with long audio files.

Help for the second method....... https://forum.pdpatchrepo.info/topic/9151/for-phasor-and-vline-based-samplers-how-can-we-be-sure-all-samples-are-being-played

The [soundfiler] method will use much more cpu if that is a problem.

If there is a playback object that outputs it's index then use that, but I have not seen one.

David.

simple polyphonic synth - clone the central phasor or not?

@manuels That's clever, thanks! I especially like how you get the slope of the input phasor (compensating for that downward jump every cycle) and how you construct the output phasor. But I think there are two issues with it. Firstly, a sync will reset the phase of the output phasor at an arbitrary point unless the output phasor frequency is an exact multiple of the input frequency. Secondly, without sync, I think the output phasor may drift WRT the input phasor due to floating point roundoff error. Agree?

Backing out to look at the big picture again, i.e. Brendan's question, this means that it couldn't be used to make either a "hardsync" synthesizer (disclaimer: I don't really know what that is) or a "phasor~-synchronized system", assuming that both require a precise phase relationship between the input and output phasors and that the output phasor doesn't contain discontinuities. What do you think?

90-second limit on audio buffers?

@seb-harmonik.ar said:

internally phasor~ uses something like 32-bit fixed-point. So the comparative error is really introduced when multiplying by the buffer size I guess.

Must be.

Also looking at what I think is the Phasor code, it looks to me like it outputs 64-bit doubles.

SC UGens are free to use doubles internally, but the interconnection buffers are single precision, so we do run into trouble at that 24 bit mantissa limit.

I think the difference is that the output of Phasor is already scaled and output as a 64-bit value before being converted to 32-bit...

I think it's a bit more than that. When the phase increment is 1 buffer frame per output sample, then there is no inaccuracy at all, because integer values are never repeating fractions, and up to 2^24, are not subject to truncation (2^24 - 1 is ok but 2^24 + 1 is not). It avoids the problem of scaling inaccuracy by not scaling at all -- which may be what you meant, just being clear about it. rpole's similar results are because my example is integrating a stream of 1's, so everything is integer valued.

It struck me interesting how the correct observation "buffer playback by scaling phasor~ runs into trouble quickly" slips into a generalized caution. Agreed that the phasor~ way isn't sufficient for many use cases; perhaps a new object would be useful, or, the accumulator technique would be useful as a more prominent part of pd culture.

hjh