Le Grand Incendie de Londres

Hi guys, here is my new solo project. Interaction between synthesis sounds (karplus strong) and piezo feedback. The feedback sounds are actually produced via fft cross synthesis between the feedback itself and the synthesis sounds. The idea is to create an "organic" quality from synthesis sounds. I'll be touring with this project, contact me if you're interested!

Lissa Executable / ofxOfelia compile error (Solved)

Here is the Executable: https://we.tl/guYbiuftrX

Lissa.exe is the Executable, and in the path /data/pd is the pd patch. The left mouse button pressed on the ofelia window changes the pattern, the right the texture and the soundlength. + and - changes the number of edges. Also Audio is working (but no midi). For sound synthesis I borrowed and modified this synth: https://patchstorage.com/additive-synthesis/

i like the sound but i think about changing it or making it slower without slowing down the animation.

@cuinjune thanks for ofxOfelia, nice work. I think now I understand the concept.

have fun

Spectral convolution

Hi everyone,

Here is a patch I developed for a specific project, but I think it's quite interesting in itself to get strange soundscapes and so I thought I'd share it.

The idea developed from this spectral delay patch, with the difference that the selected frequency bands of the input signal, rather than being delayed, are sent to two convolution reverbs (which can be set to work in series or parallel). With the right kind of impulse responses, and with the ability to select which part of the spectrum is being convoluted. the results can be very interesting, though hard to predict most of the time.

The patch can save presets, which include all settings, the frequency band tables and the impulse responses.

spectral.convolution1.0.zip

(requires zexy and bsaylor externals.)

Here is a recording I made while working on it (with the addition of a pitched delay on the output)

16 parameters for 1 voice, continued...

@whale-av hey, so I made this patch, it doesnt have abstractions, i havent practiced them yet, but would this be considered additive synthesis? 5 sinewaves, different frequencies, and start at the same volume but end differently...additive synthesis.zip :0,

for additive synthesis each sine must be at a different frequency and volume right? so I could be making glissandi in pitch and volume and it would still be considered additive synthesis?

The Harmonizer: Communal Synthesizer via Wifi-LAN and Mobmuplat

The Harmonizer: Communal Synthesizer via Wifi-LAN and Mobmuplat

The Harmonizer

The Harmonizer is a single or multi-player mini-moog synthesizer played over a shared LAN.

(credits: The original "minimoog" patch is used by permission from Jaime E. Oliver La Rosa at the the New York University, Music Department and NYU Waverly Labs (Spring 2014) and can be found at: http://nyu-waverlylabs.org/wp-content/uploads/2014/01/minimoog.zip)

One or more players can play the instrument with each player contributing to one or more copies of the synthesizer (via the app installed on each handheld) depending on whether they opt to play "player 1" or "player 2".

By default, all users are "player 1" so any changes to their app, ex. changing a parameter, playing a note, etc., goes to all other players playing "player 1".

If a user is "player 2", then their notes, controls, mod-wheel etc. are all still routed to the network, i.e. to all "player 1"'s, but they hear no sound on their own machine.

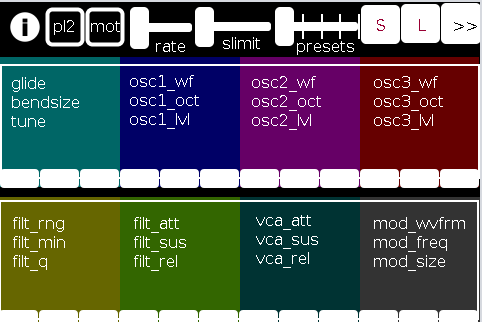

There are 2 pages in The Harmonizer. (See screenshots below.)

PAGE 1:

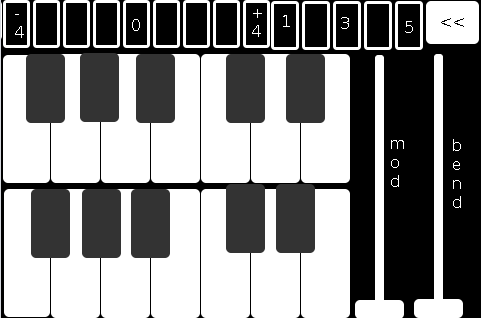

PAGE 2:

The first page of the app contains all controls operating on a (more or less) "meta"-level for the player: in the following order (reading top-left to bottom-right):

pl2: if selected (toggled) the user is choosing to play "player 2"

mot(ion): triggers system motion controls of the osc1,2&3 levels (volume) based on the accelerometer inside the smartphone (i.e as you twist and turn the handheld in your hand the 3 oscs' volumes change)

rate: how frequently should the handheld update its accelerometer data

slimit: by how much should the app slow down sending the (continuous) accelerometer data over the network

presets: from 1 to 5 preset "save-slots" to record and reload the Grid 1 and Grid 2 settings that are currently active

S: save the current Grid1 and Grid2 selections to the current "save slot"

L: load the currently selected preset into both Grids

">>": go the the next page (page 2 has the reverse, a "<<" button)

Grid 1: the settings, in 4 banks of 3 parameters per-, which are labeled top-down equating to left-right

Grid 2: the same as Grid 1, but with a different set of parameters

The second page comprises:

the 2-octave keyboard (lower notes on top),

a 9-button octave grid (which can go either up or down 4 octaves),

a quick-preset grid which loads one of the currently saved 5 presets

the "<<" button mentioned above, and

both a mod and pitch-bend wheel (as labeled).

SETUP:

All players install Mobmuplat;

Receive The Harmonizer (in the form of a .zip file either via download or thru email, etc.)

When on your smartphone, click on the zip file, for example, as an attachment in an email.

Both android and iphone will recognize (unless you have previously set a default behavior for .zip files) the zip file and ask if you would like to open it in Mobmuplat. Do so.

When you open Mobmuplat, you will be presented with a list of names, if in android click the 3 dots in the top right of the window and on the settings window , click "Network" Or on an iphone click "Network" just below the name list;

On the Network tab, click "LANDINI".

Switch "LANDINI" from "off" to "on".

(this will allow you to send your control data over your local area network with anyone else who is on that same LAN).

From that window, click "Documents".

You will be presented again, with the previous list of names.

Scroll down to "TheHarmonizer" and click on it.

The app will open to Page 1 as described and shown in the image above.

Enjoy with Or without Friends, Loved, Ones, or just folks who want to know what you mean "is possible" with Pure Data  )!

)!

Theories of Thought on the Matter

My opinion is:

While competition could begin over "who controls" the song, in not too great a deal of time, players will see first hand, that it is better (at least in this case) to work together than against one another.

If any form of competition emerges in the game, for instance loading a preset when a another player was working on a tune or musical idea, the Overall playablilty and gratitude-level will wain.

However, on the other hand, if players see the many, many ways one can constructively collaborate I think the rewards will be far more measurable than the costs, for instance, one player plays notes while the other player plays the controls.

p.s. my thinking is:

since you can play solo: it will be fun to create cool presets when alone then throw them into the mix once you start to play together. (Has sort of a card collecting fee  ).

).

Afterward:

This was just too easy Not to do.

It conjoins many aspects of pure data together (I have been working on lately (afterward: i did this app a long time ago but for some reason and am only now thinking to share it) both logistical and procedural into a single whole.

I think it does both quite well, as well as, offer the user an opportunity to consider or perhaps even wonder: What is 'possible'?"

Always share. Life is just too damn short not to.

Love only.

-svanya

early questions on abstractions and polyphony

Hallo team

I am currently dissecting a very nice spectral delay external --

https://guitarextended.wordpress.com/2012/02/07/spectral-delay-effect-for-guitar-with-pure-data/ -- and I would like to know if any PD veterans here can point me to a transparent and comprehenisve tutorial on using abstractions in PD, and parsing Array data in a polyphonic context.

I am asking because I am a little unclear about how/when an abstraction becomes 'polyphonic' in PD. I can pass single data streams to a lower level abstraction, yet I cannot see how PD 'knows' if a given abstraction is polyphonic, requiring a $0 prefix, parsing etc.

(Coming from Max, I expect to declare voice-numbers, voice-targets, pack/unpack etc)

Pointers very much welcomed friends (PD-vanilla, with cyclone and zexy libraries)

Brendan

ps, I have been here -- http://www.pd-tutorial.com/english/ch03s08.html -- as part of my studies, but it lacks a transparent discussion on polyphony, voice-targeting and Array data parsing

Ewolverine 4 U

New version 6.2

- added TARGET DRIVE

(you need [timbreID] !)

(you need [timbreID] !) - changed designations of control assemblies to fit better with their functionality

- improved GUI functions for mouse control

- added display that shows the distance of evolved sounds to the target sample

Now you can use Ewolverine to re-synthesize a sample with any synth you own. Of course not every synth is able to convincingly synthesize any sound, but you can now get as close as possible with it. Just loop back the audio of your DAW into PureData using a virtual audio cable and send MIDI from PureData to your DAW using a virtual MIDI cable and let Ewolverine do the rest!

The re-synthesizing of samples using Ewolverine can take VERY long and I don't guarantee you that it works good, but as it is a free patch, see it as a Bonus!

EWOLVERINE v.6.2 by Henry Dalcke.pd

BEST POSSIBLE BREEDING FUNCTIONALITY NOW ENSURED!

Plans:

- storage for user SPLICER pattern

- 4 more random sounds per set (A/B) to select from (to save time)

- discontinuous MIDI messaging interrupted by assignment switching CC events to enable breeding especially for FMheaven VST

- possibility to interpolate between new random population sounds to smoothly re-direct the modwheel-morphing path while morphing

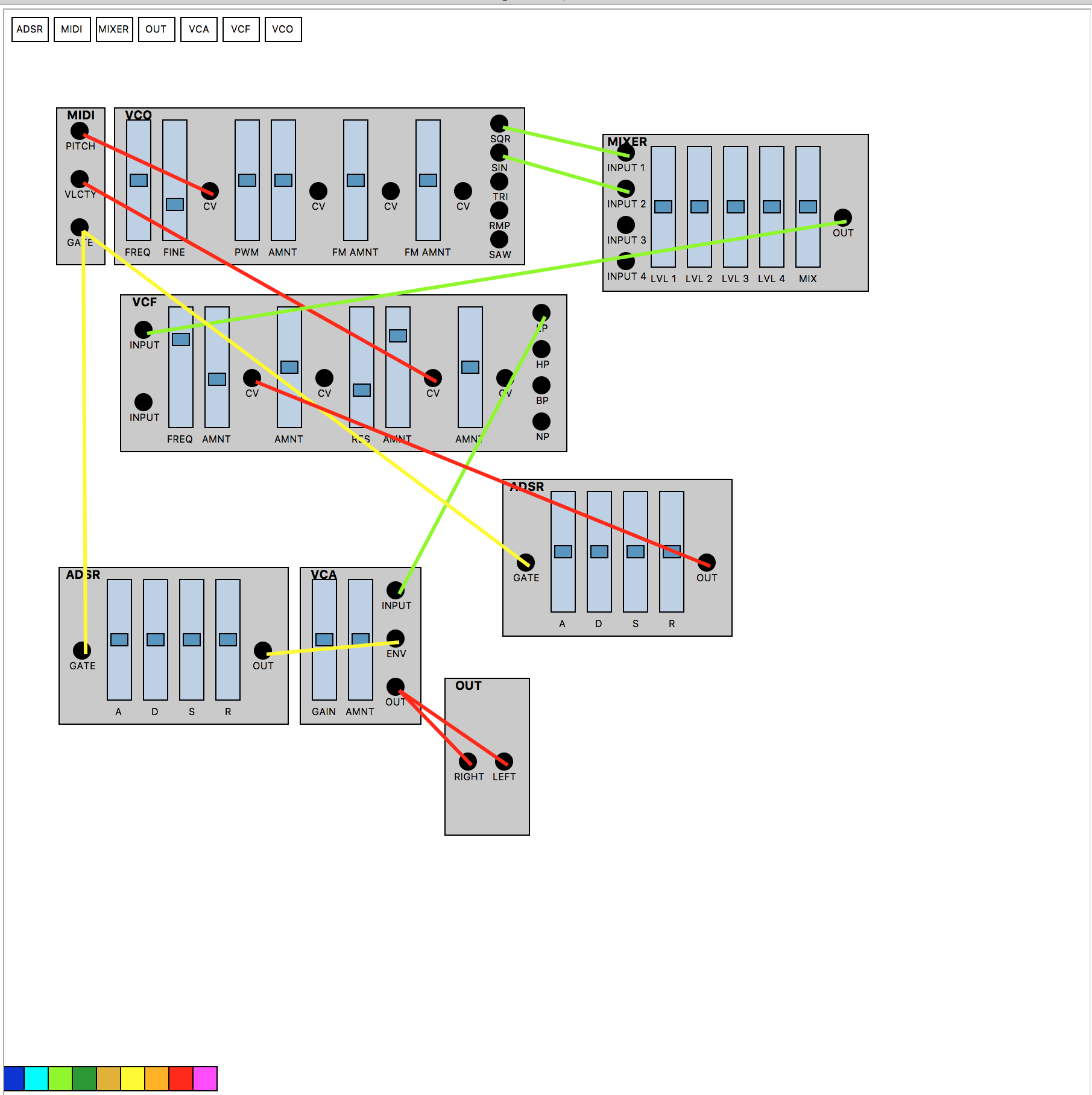

PdModular

So I'm working on a project right now and I'm interested in getting some input on the project as I'd like to open source it when I finish. Its a similar idea to the Nord modular but implemented in PD, Python and C. I will try to keep this up to date as the project continues and if there is interest I would be happy to upload some of the code to git

Parts:

Python GUI for building synthesizer utilizing pyata and tkinter

PD objects for each module(VCO, VCA, ENV, MIX, VCF, MIDI, OUTPUT)

Raspberry Pi Cluster(1 conductor and 4 voices) connected via an ethernet switch a d voices ran through a passive mixer

C program for converting midi to OSC messages and sending them to each voice for polyphony as well as uploading your patch of choice

The main idea is that you build a synthesizer patch in the GUI which runs on the conductor which is converted to a PD patch. This patch is then chosen on the conductor raspberry pi when in play mode and is distributed to the 4 voice raspberry pi. All pi's are connected through a switch. This signal for each voice is sent through a passive 4 channel stereo mixer which is sent to a speaker or headphones. A midi controller is plugged into the conductor via usb and its knobs/sliders can be mapped to slider objects within the patch

Adding Modules:

A module consists of a pd object and a python array that represents the inputs and outputs and generates the module object within the application. This will allow for the simple addition of new modules as long as they adhere to the input and output specifications. I hope to add substantially more modules once I get the minimal set needed to build a basic synthesizer.

Would people be interested in something like this at all?

help synthesis

@alexandros

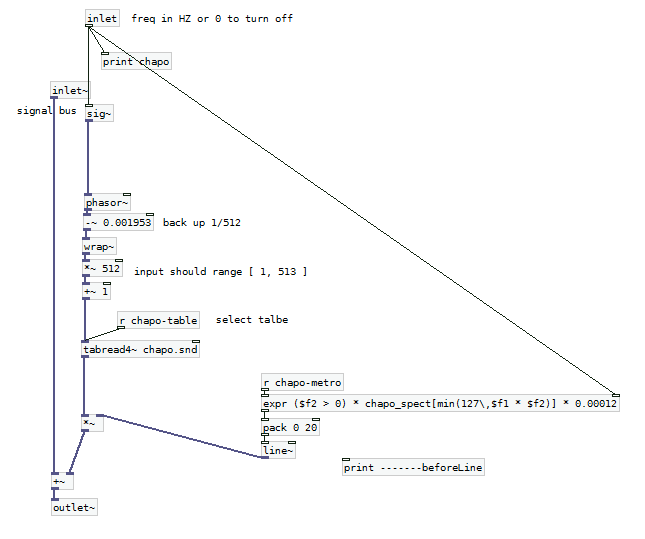

[expr] does read the "chapo_spect' table, but always at index 127,

we are agree  , ( I said it does not read the table because it only read one value, whereas the table has 128 value... so there is a mistake somewhere).

, ( I said it does not read the table because it only read one value, whereas the table has 128 value... so there is a mistake somewhere).

edit : the synthesis is suppose to be one voice of "28 voices of additive synthesis with spectral envelope applied to each partial ...

@whale-av : strange !

I will ask to the mailing list to have information on the desired synthesis

thanks again

help synthesis

Hello,

let me try again  , (I removed all the details) .

, (I removed all the details) .

I have some problems with the synthesis name "chapo" inside Jupiter patch.

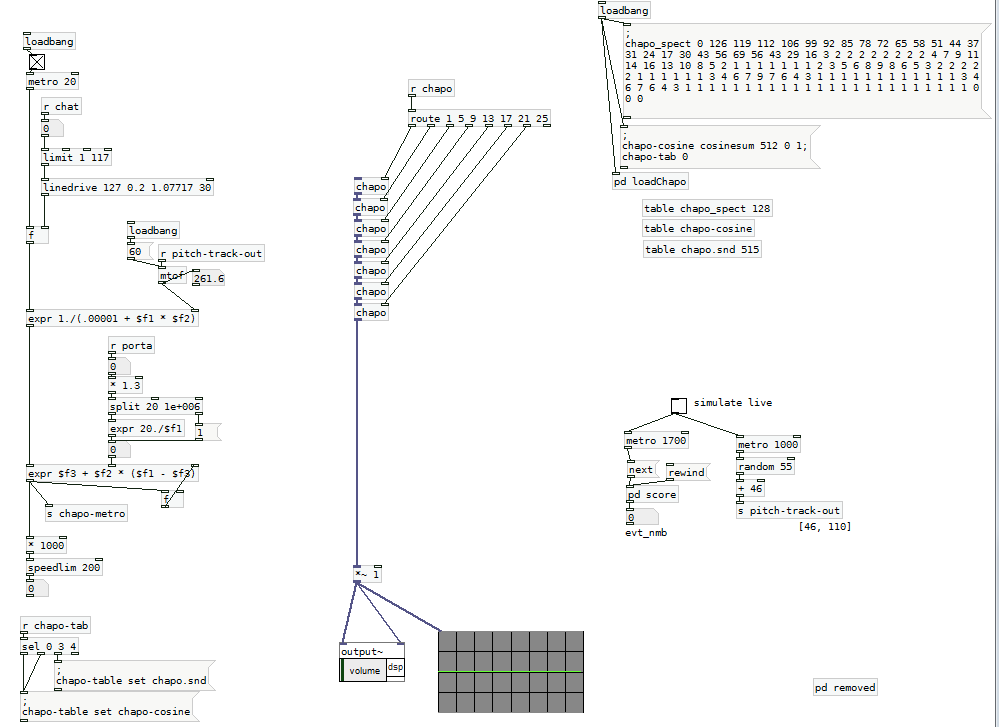

- capture of the main window ( a copy)

- capture of the abstraction {chapo]

I don't get the algorithm on the left side of the main window ;

neither the synthesis. I see additive synthesis but their is this table named 'chapo_spect'..

I copied this chapo part with the depedencies there :

http://www2.univ-mlv.fr/~larrieu/jupiter/share-chapo.zip

( a text file describe some problems, i have no sound)

the original patch of Jupiter is there (PDRP project ) : http://musicweb.ucsd.edu/~mpuckette/pdrp/latest/

edit : it's possible that an an error is present inside the source patch... and so in my copy

thank you,