Why doesn't pure data allow other audio and video applications to run in parallel in Linux?

@ddw_music

I made some changes in qjacktctl and now it works!

Bellow are my steps to achieve this:

- Install pulseaudio-module-jack: aptitude install pulseaudio-module-jack

- add to "/etc/pulse/default.pa" the following text: "load-module module-jack-sink

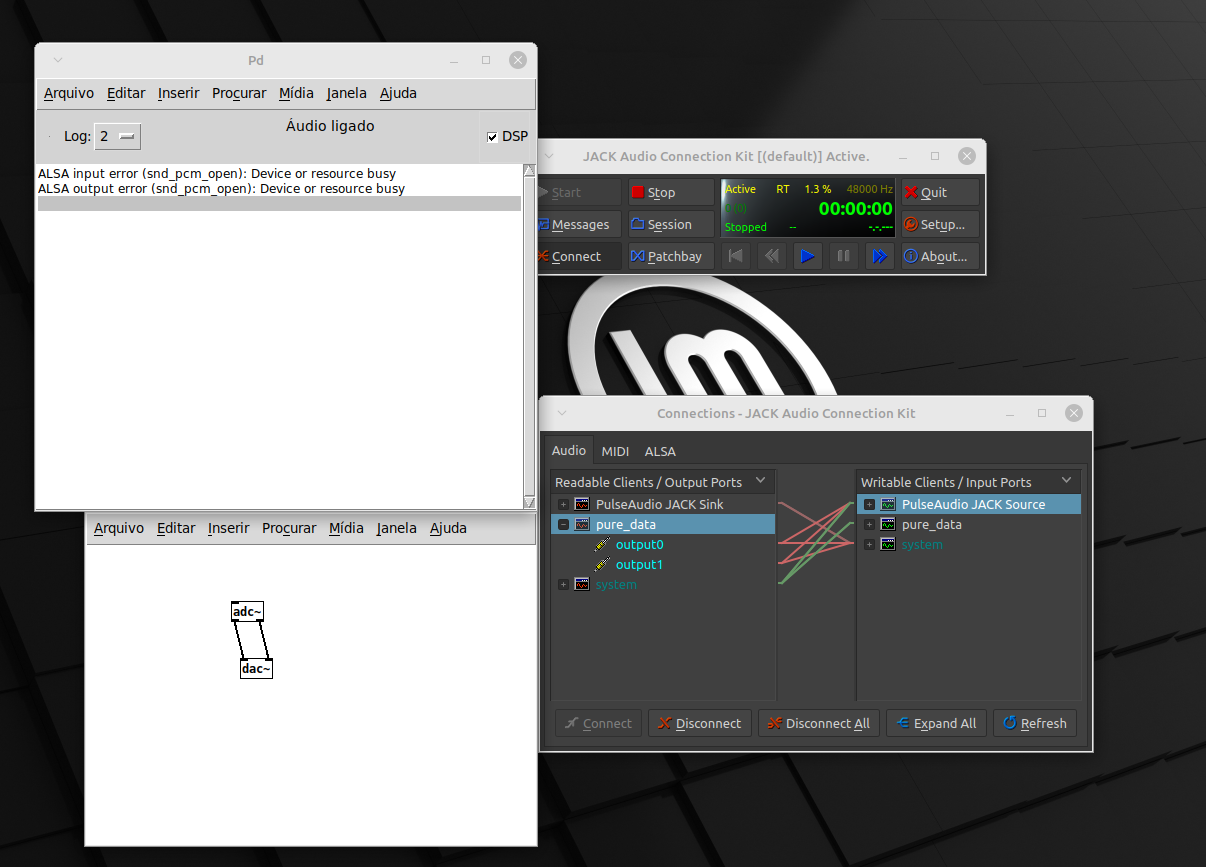

load-module module-jack-source" - Put Pure Data and QjackCTL with this setup -->

photo

photo

Now, you can use Pure Data and other music and videos applications, like YouTube, Twitch or VCL.

GRM plug-in called “Shuffling” for the granulation for Max in Pd?

@raynovich said:

And yeah, I kind of want to know more how it works. . .

Hm, well, let me propose an analogy. Analog synthesis is fairly standard: bandlimited waveforms, there are x number of ways to generate those, y number of filter implementations etc. But many of the oscillators and filters in, say, VCV Rack have a distinctive sound, because of the specific analog-emulation techniques and nonlinearities used per module. You can understand analog synthesis but that isn't enough to emulate a specific Rack module in Pd.

Re: Shuffling, I finally found this one sentence description: "Shuffling takes random sample fragments of variable dimensions from the last three seconds of the incoming sound and modulates its playback density and pitch" -- that's a granular delay.

A granular delay is fairly straightforward to implement in Pd: [delwrite~] is the grain source. Each grain is generated from [delread4~] where you can randomly choose the delay time, or sweep the delay time linearly to change the pitch. That will take care of "random sample fragments," "last three seconds," and "modulates... pitch" (you modulates playback density by controlling the rate at which grains are produced vs the duration of each grain -- normally I set an overlap parameter and grains-per-second, so that grain dur = overlap / grain_freq).

"... of variable dimensions" doesn't provide any useful technical detail.

But what isn't covered in the overview description of a granular delay is the precise connection between the Shuffling plug-in parameters and the audio processing. Since GRM Tools are closed-source, you would have to get hold of the plug-in and do a lot of tests (but if you have the plug-in, then just [vstplugin~] and done), or guess and you would end up with an effect that's somewhat like Shuffling, but maybe not exactly what the composer specified.

I'll send a grain-delay template a bit later, hang on.

hjh

[lincurve] based on SuperCollider's lincurve mapping

Here's a Pd-vanilla version of a linear-to-curved range mapping formula from SuperCollider. Published at https://github.com/jamshark70/hjh-abs . There's a message-rate and signal-rate version.

(Helpfile typo has been fixed but I won't bother to upload a new screenshot.)

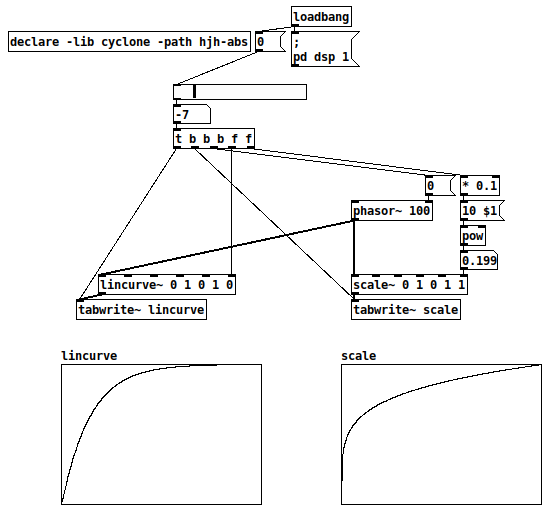

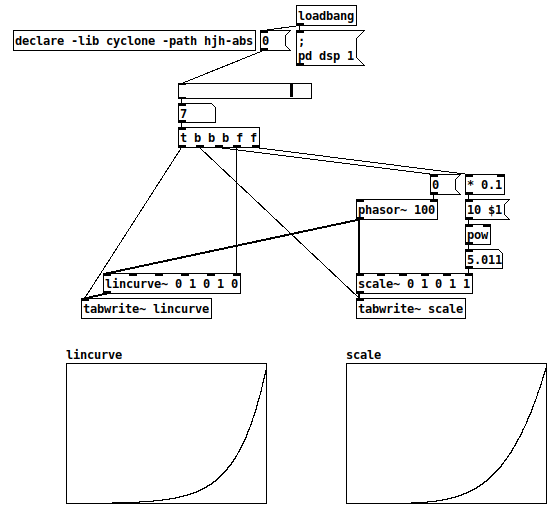

This is, of course, close to cyclone's [scale]. It's close -- the symmetry is different (help file says "better" but actually that's a matter of taste, or situational need). For reciprocal values of [scale]'s "exponential" factor, the respective curves seem to be a reflection around the diagonal (x <--> y); for negated values of [lincurve]'s curve factor, one curve is a 180-degree rotation of the other (x --> -x and y --> -y). Compare -7 vs +7 lincurve against 0.2 vs 5.0 scale:

-7 curve, ~0.2 exp:

+7 curve, ~5.0 exp (lincurve and scale are very very close here!):

hjh

Wet-Dry Mix In Amplitude Modulation

Wet-Dry AM.pd

Hey everyone,

I'm experimenting with trying to get a clean fade between the dry carrier sound and the wet modulated sound when using AM but am running into a couple problems.

- When I make the modulating signal into a unipolar waveform, I can get a clean transition between the dry sound and modulated tremolo sound, but at faster modulation speeds the carrier can be heard with the other 2 frequency bands

- When I use the bipolar waveform, the transition between the dry signal and the tremolo signal is sloppy and the rate begins to sound like it doubles

I'm wondering why the carrier sound is present when modulating at high speeds with the unipolar waveform but not with the bipolar waveform? And I'm also curious why the tremolo speed feels like it doubles in its rate when crossfading with the bipolar waveform?

Ideally, I would like to have the best of both worlds in the patch where it crossfades cleanly into a full tremolo, but also where the carrier signal doesn't exist when the modulation is fully wet. Not sure if videos work here, but I'll try and post one.[link text](Amplitude Modulation.mp4 link url)

sinesum cannot indicate phase shift?

there is no easy way to do a phase shift with sinesum

Correct-ish. You can shift any partial by 180 degrees bu multiplying it's amplitude by -1 I suppose

One could make a patch that could do a phase shift of a certain partials and then combine the two or three or more arrays

Yes. The solution is in this thread: https://forum.pdpatchrepo.info/topic/3506/phase-timing-help-basic

Alternatively, if you need phase control of each partial you could stack a bunch of [cos~] modules driven by the same phasor like this:

[phasor~]

|

[*~ n] (where n is partial#)

|

[+~ p] (where p is phase)

|

[cos~]

|

[*~ a] (where a is amplitude (duh))

Trying to reproduce a sound with Pd

@jameslo Analog components all have tolerances, +- 10% is not uncommon for pots, so any of the knobs between the two modules could be 20% off from each other given a worse case scenario, plus all those other components in the module could make it even further off, Generally circuits are designed to minimize the effects of component tolerances and high tolerance parts will be used in critical locations like frequency but amplitude and PW are not critical location, our ears will not notice small differences here. On top of that components age and their value changes, so two 30 year old modules can be very different despite once being identical. Things like phase and frequency are not constants in the analog world, things drift and oscillators which share a power source tend to sync when they get close. Most have to be very close in frequency to sync this way, the beat frequency will be well under 1hz but under certain situations you can hear it if you listen close. When trying to copy a patch from the analog world in the digital, knobs can only be used as a rough guide, need to use your ears.

When you distorted the wave you added harmonics and changed the strengths of the old harmonics, only harmonics of equal strength but opposite phase will cancel fully. You most likely did have cancellation just not full cancellation (depends on how you distorted it). Put both oscillators to the same frequency and opposite phase, listen to the distorted one and then add in the undistorted oscillator, you should hear a decrease in harmonic content from the cancellation. This will be most apparent with low to moderate levels of distortion, the more distortion, the more harmonics you are adding, the less the two waves have in common, and as a result less gets canceled.

Edit: didn't quite finish that last sentence.

Is absolute phase audible?

@jameslo No time to check out your patch at the moment unfortunately..... maybe later..... looks interesting....

So this post might be total rubbish.

Absolute phase has some sort of meaning in analog audio because without equalisation a positive pressure at the microphone will produce a positive pressure at the speaker (unless it is wired out of phase) at exactly the same time... (not exactly true as electrons move at the speed of light...... but for us humans it holds true).

In the digital domain that cannot be true as there will be latency..... which means a phase shift..... which means the relationship no longer holds in time....... but it is just a delay that you can only hear if you are also listening to the original signal......... although the output is no longer technically in "absolute phase".

In the air it gets much more complicated..... conical speaker diaphragms...... reflections.... humidity.. pressure.....temperature...... wind...... comb filtering with stereo or if you really want to mess it up 7:1 surround..... and two ears placed where exactly......

Those elements and more produce phase differences that our brains are highly sensitive to..... its an evolutionary life saver.

So you will hear phase changes but if the phase is fixed there is no reference for your brain to access.

If you put a jack socket in your neck and feed your signal directly to your brain then you will be certain that you hear no artefacts.

So you hear changes..... but once the audio wave becomes "fixed" again in time you will "just" hear that wave.

If the gate changes the shape of the wave then I think you should be able to hear that though....

But the brain has trouble with tones because we are slow to recognise pitch..... from the Pd/doc/Manual......... cognition.zip ....... change_perception.pd is a good one.......

Probably.

I think.

David

Is absolute phase audible?

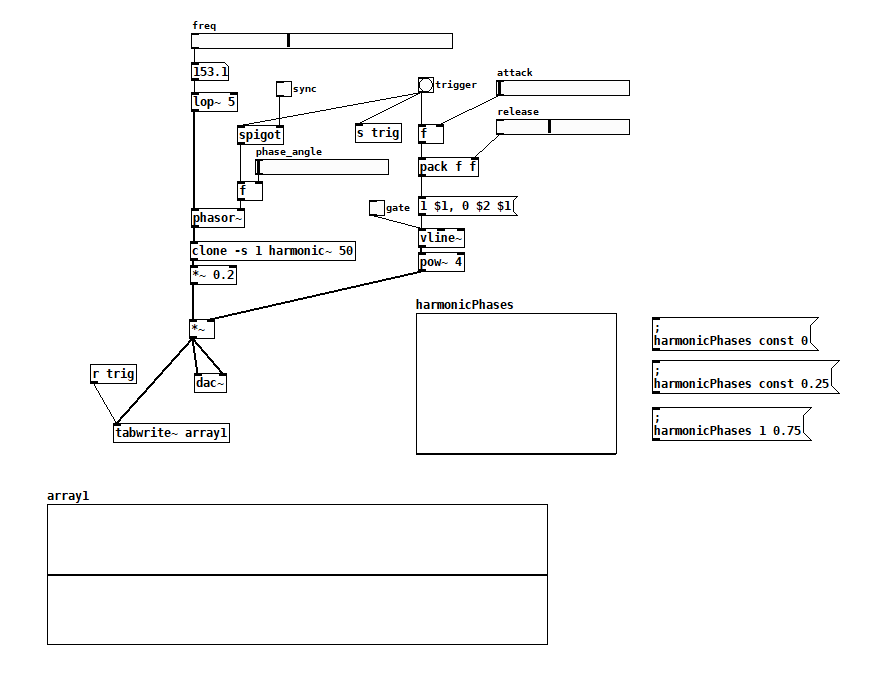

My friend who is a much more experienced synthesist than I was telling me how important it was to consider whether the oscillators were synchronized with the gate (and if so, at what phase angle) because it affects the time-domain shape of the attack. He also said that his FFT-based spectrum analyzer showed no change even as the attack shape changed, but that you could still hear the difference.

I was doubtful because my understanding has always been that the ear can’t hear absolute phase and so that’s why, in additive synthesis, even if you randomize the phase of the harmonics, things should still sound the same. It’s particularly striking with squares and ramps; get the phase of even one harmonic wrong, and it looks like something completely different on a scope.

So I wrote a test in Pd, and while I don’t hear any differences in attack, I do hear differences in tone as the phases of the harmonics change. If you’re interested, try my test for yourself. I’m approximating a ramp using 50 harmonics, and you can control the phase of each harmonic using the phaseTable. Trigger the AR envelope with and without sync and see if you can hear a difference. Next, open up the gate so you get a steady tone and play with the phaseTable. You’ll hear the timbre change subtly, especially when changing the lower harmonics. Now I’m not sure I could tell you what phase I’m hearing in a double-blind test, but I do hear a difference. So am I wrong that the ear can’t hear absolute phase? And if I’m not wrong, why do I hear a difference? It sounds different on different speakers/headphones/earbuds so I’m guessing it has to do with asymmetries between the positive and negative excursions of the diaphragm?

additiveSaw~.pd

harmonic~.pd

Routing different signals to clone instances

@whale-av Thank you very much for your reply! In my case I need to route signal outputs of each cloned object inside one module to another cloned objects inside another module. What I mean is that the first module receives signals from outside of it. I don’t need to route signals between instances of cloned objects inside the module, what i need is to direct multiple signals coming from different instances of cloned objects inside one module and send them outside and then send them to cloned instances inside another module. Hope I managed to explain well enough. Thanks a lot!

Routing different signals to clone instances

@djaleksei You can route separately to specific modules within [clone] by including the clone number in the [send~] and [receive~]

You do that using $ in the name.

Clone 1 will assign the value of 1 to $1...... clone 2 the value 2...... etc,

So if you use [receive~ $1-modulation] then each cloned module can receive separately.

If each osc module is to be paired with each modulation module thenit is a simple task...... putting [send~ $1-modulation] in the modulation cloned abstraction will have fmmod1 send to osc1, fmmod2 send to osc2 etc.

You can give [clone] more arguments..... giving you the possibility to use $2, $3 etc. for more complexity...... i.e. if you one day add another bank of cloned oscillators to your patch.......

https://forum.pdpatchrepo.info/topic/9774/pure-data-noob/4

David.