Purr Data GSoC and Dictionaries in Pd

0.12ms on my machine for 5000th key.... 0.033ms for 3rd key

That's an order of magnitude difference.

Imagine a table full of fortune cookies with 100 different people's names on them. You're asked to retrieve them for the people. In this case your only method is to search all of them. The person whose cookie is at the front of the table is lucky-- if they need to go in a hurry you'll find their cookie immediately. The person whose cookie is at the far end is unlucky-- you'll probably have to search through all the cookies before you find theirs.

Now hang 26 chains from the edge of the table, and label them with the letter A-Z. Store each cookie in a link of the chain that matches the first letter of that name.

Now on average you'll take a little more time to find the cookie that was previously sitting on the near edge of the table. But on average retrieving cookies will be 100 times faster.

However, in the worst possible case everybody's name begins with the letter "d" and you're back where you started. So you need a better rule than simple alphabetical order to try to get the worst case retrieval time closer to the average retrieval time. You'd prefer that "Dan" and "Dane" are in different slots, for example.

If you use the simple hashing algo in the gensym function that registers Pd symbols, it seems to do a decent job of spreading out symbols among all available slots in the table. So as long as the algo to map a name to a slot in the table is quick to run, this gets the worst case lookup time close to the average case lookup time, and that's good enough for a soft realtime system.

If the table has 1000 slots, and the time to turn a key into an index is negligible, then the only big expense is searching through the various items hanging from the chain at that index. In your example of 5000 items, the chain would likely only have five items to search. So the retrieval time on average is going to be a lot close to 0.033, and the worst case is unlikely to be much more than that.

Can Pd busy wait?

@jancsika said:

You might want to start looking at the source for these questions.

I know, I know.... I can't understand my hesitance.

That's the intended behavior. I thought @jameslo was complaining that his patch froze the GUI indefinitely.

Yeah, while the busy wait is happening, I don't care that the GUI doesn't update. But once it's done I was expecting it would update, and I thought that if there happened to be N busy waits in some message evaluation tree, then the GUI would update after each one, but I don't think that's how GUI updates are scheduled. I rewrote the quicksort to remove the slowMotionInstantReplay and insert a busy wait every time 2 table elements are swapped. It looks like the array display isn't updated until the entire sort completes, whereas I was hoping to see each pair swap sequentially.

quicksort with busy wait.pd

(This isn't really the behavior I reported earlier when I said it "freezes the UI". In that case the table would only be partially sorted, but I haven't taken the time to reproduce it, mostly because I really don't think this approach is right in Pd.)

Edit: yeah, I can see now that I wrote "blocking the UI" previously, but think that might have been the wrong way to describe what I was seeing. Sorry.

JASS, Just Another Synth...Sort-of, codename: Gemini (UPDATED: esp with midi fixes)

JASS, Just Another Synth...Sort-of, codename: Gemini (UPDATED TO V-1.0.1)

jass-v1.0.1( esp with midi fixes).zip

1.0.1-CHANGES:

- Fixed issues with midi routing, re the mode selector (mentioned below)

- Upgraded the midi mode "fetch" abstraction to be less granular

- Fix (for midi) so changing cc["14","15","16"] to "rnd" outputs a random wave (It has always done this for non-midi.)

- Added a midi-mode-tester.pd (connect PD's midi out to PD's midi in to use it)

- Upgrade: cc-56 and cc-58 can now change pbend-cc and mod-cc in all modes

- Update: the (this) readme

INFO: Values setting to 0 on initial cc changes is (given midi) to be expected.

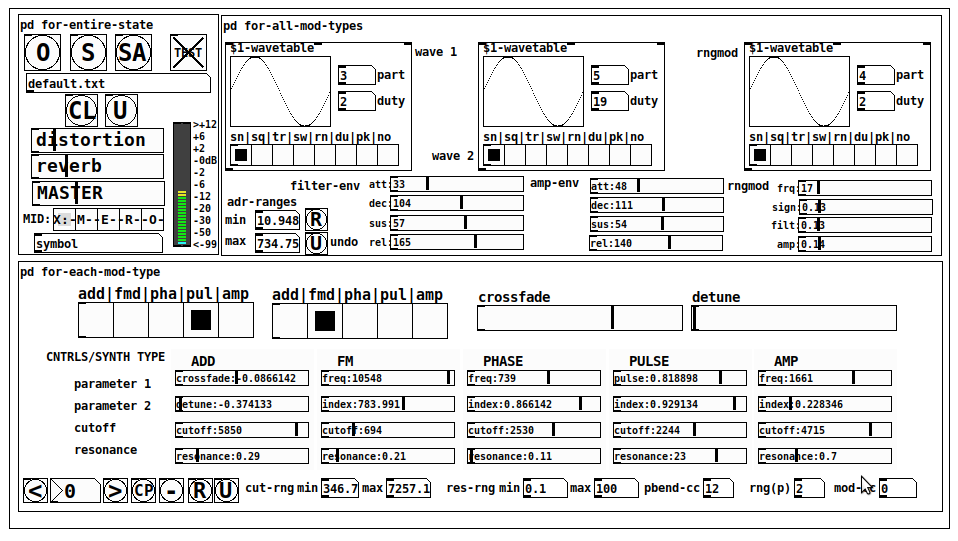

JASS is a clone-based, three wavetable, 16 voice polyphonic, Dual-channel synth.

With...

- The initial, two wavetables combined in 1 of 5 possible ways per channel and then adding those two channels. Example: additive+frequency modulation, phase+pulse-modulation, pulse-modulation+amplitude modulation, fm+fm, etc

- The third wavetable is a ring modulator, embedded inside each mod type

- 8 wave types, including a random with a settable number of partials and a square with a settable dutycycle

- A vcf~ filter embedded inside each modulation type

- The attack-decay-release, cutoff, and resonance ranges settable so they immediately and globally recalculate all relevant values

- Four parameters /mod type: p1,p2, cutoff, and resonance

- State-saving, at both the global level (wavetables, env, etc.), as well as, multiple "substates" of for-each-mod-type settings.

- Distortion, reverb

- Midiin, paying special attention to the use of 8-knob, usb, midi controllers (see below for details)

- zexy-limiters, for each channel, after the distortion, and just before dac~

Instructions

Requires: zexy

for-entire-state

- O: Open preset. "default.txt" is loaded by...default

- S: Save preset (all values incl. the multiple substates) (Note: I have Not included any presets, besides the default with 5 substates.)

- SA: Save as

- TEST: A sample player

- symbol: The filename of the currently loaded preset

- CL: Clear, sets all but a few values to 0

- U: Undo CL

- distortion,reverb,MASTER: operate on the total out, just before the limiter.

- MIDI (Each selection corresponds to a pgmin, 123,124,125,126,127, respectively, see below for more information)

- X: Default midi config, cc[1,7,8-64] available

- M: Modulators;cc[10-17] routed to ch1&ch2: p1,p2,cutoff,q controls

- E: Envelopes; cc[10-17] routed to filter- and amp-env controls

- R: Ranges; cc[10-17] routed to adr-min/max,cut-off min/max, resonance min/max, distortion, and reverb

- O: Other; cc[10-17] routed to rngmod controls, 3 wavetypes, and crossfade

- symbol: you may enter 8 cc#'s here to replace the default [10-17] from above to suit your midi-controller's knob configuration; these settings are saved to file upon entry

- vu: for total out to dac~

for-all-mod-types

- /wavetable

- graph: of the chosen wavetype

- part: partials, # of partials to use for the "rn" wavetype; the resulting, random sinesum is saved with the preset

- duty: dutycycle for the "du" wavetype

- type: sin | square | triangle | saw | random | duty | pink (pink-noise: a random sinesum with 128 partials, it is not saved with the preset) | noise (a random sinesum with 2051 partials, also not saved)

- filter-env: (self-explanatory)

- amp-env: (self-explanatory)

- rngmod: self-explanatory, except "sign" is to the modulated signal just before going into the vcf~

- adr-range: min,max[0-10000]; changing these values immediately recalculates all values for the filter- and amp-env's scaled to the new range

- R: randomizes all for-all-mod-types values, but excludes wavetype "noise"; rem: you must S or SA the preset to save the results

- U: Undoes R

for-each-mod-type

- mod-type-1: (In all cases, wavetable1 is the carrier and wavetable2 is the modulator); additive | frequency | phase | pulse | amplitude modulation

- mod-type-2: Same as above; mod-type-2 May be the same type as mod-type-1

- crossfade: Between ch1 and ch2

- detune: Applied to the midi pitch going into ch2

- for-each-clone-type controls:

- p1,p2: (self-explanatory)

- cutoff, resonance: (self-explanatory)

- navigation: Cycles through the saved substates of for-each-mod-type settings (note: they are lines on the end of a [text])

- CP: Copy the current settings, ie. add a line to the end of the [text] identical to the current substate

- -: Delete the current substate

- R: Randomize all (but only a few) substate settings

- U: Undo R

- cut-rng: min,max[0-20000] As adr-range above, this immediately recalculates all cutoff values

- res-rng: min,max[0-100], same as previously but for q

- pbend: cc,rng: the pitchwheel may be assigned to a control by setting this to a value >7 (see midi table below for possibilities); rng is in midi pitches (+/- the value you enter)

- mod-cc: the mod-wheel may be assigned to a control [7..64] by setting this value

midi-implementation

| name | --- | Description |

|---|---|---|

| sysex | not supported | |

| pgmin | 123,124,125,126,127; They set midi mode | |

| notein | 0-127 | |

| bendin | pbend-cc=7>pitchbend; otherwise to the cc# from below | |

| touch | not supported | |

| polytouch | not supported |

cc - basic (for all midi-configs)

| # | name | --- | desciption |

|---|---|---|---|

| 1 | mod-wheel | (assignable) | |

| 7 | volume | Master |

cc - "X" mode/pgmin=123

| cc | --- | parameter |

|---|---|---|

| 8 | wavetype1 | |

| 9 | partials 1 | |

| 10 | duty 1 | |

| 11 | wavetype2 | |

| 12 | partials 2 | |

| 13 | duty 2 | |

| 14 | wavetype3 | |

| 15 | partials 3 | |

| 16 | duty 3 | |

| 17 | filter-att | |

| 18 | filter-dec | |

| 19 | filter-sus | |

| 20 | filter-rel | |

| 21 | amp-att | |

| 22 | amp-dec | |

| 23 | amp-sus | |

| 24 | amp-rel | |

| 25 | rngmod-freq | |

| 26 | rngmod-sig | |

| 27 | rngmod-filt | |

| 28 | rngmod-amp | |

| 29 | distortion | |

| 30 | reverb | |

| 31 | master | |

| 32 | mod-type 1 | |

| 33 | mod-type 2 | |

| 34 | crossfade | |

| 35 | detune | |

| 36 | p1-1 | |

| 37 | p2-1 | |

| 38 | cutoff-1 | |

| 39 | q-1 | |

| 40 | p1-2 | |

| 41 | p2-2 | |

| 42 | cutoff-2 | |

| 43 | q-2 | |

| 44 | p1-3 | |

| 45 | p2-3 | |

| 46 | cutoff-3 | |

| 47 | q-3 | |

| 48 | p1-4 | |

| 49 | p2-4 | |

| 50 | cutoff-4 | |

| 51 | q-4 | |

| 52 | p1-5 | |

| 53 | p2-5 | |

| 54 | cutoff-5 | |

| 55 | q-5 | |

| 56 | pbend-cc | |

| 57 | pbend-rng | |

| 58 | mod-cc | |

| 59 | adr-rng-min | |

| 60 | adr-rng-max | |

| 61 | cut-rng-min | |

| 62 | cut-rng-max | |

| 63 | res-rng-min | |

| 64 | res-rng-max |

cc - Modes M, E, R, O

Jass is designed so that single knobs may be used for multiple purposes without reentering the previous value when you turn the knob, esp. as it pertains to, 8-knob controllers.

Thus, for instance, when in Mode M(pgm=124) your cc send the signals as listed below. When you switch modes, that knob will then change the values for That mode.

In order to do this, you must turn the knob until it hits the previously stored value for that mode-knob.

After hitting that previous value, it will begin to change the current value.

cc - Modes M, E, R, O assignments

Where [10..17] may be the midi cc #'s you enter in the MIDI symbol field (as mentioned above) aligned to your particular midi controller.

| cc# | --- | M/pgm=124 | --- | E/pgm=125 | --- | R/pgm=126 | --- | O/pgm=127 |

|---|---|---|---|---|---|---|---|---|

| 10 | ch1:p1 | filter-env:att | adr-rng-min | rngmod:freq | ||||

| 11 | ch1:p2 | filter-env:dec | adr-rng-max | rngmod:sig | ||||

| 12 | ch1:cutoff | filter-env:sus | cut-rng-min | rngmod:filter | ||||

| 13 | ch1:q | filter-env:re | cut-rng-max | rngmod:amp | ||||

| 14 | ch2:p1 | amp-env:att | res-rng-min | wavetype1 | ||||

| 15 | ch2:p2 | amp-env:dec | res-rng-max | wavetype2 | ||||

| 16 | ch2:cutoff | amp-env:sus | distortion | wavetype3 | ||||

| 17 | ch2:q | amp-env:rel | reverb | crossfade |

In closing

If you have anywhere close to as much fun (using, experimenting with, trying out, etc.) this patch, as I had making it, I will consider it a success.

For while an arduous learning curve (the first synth I ever built), it has been an Enormous pleasure to listen to as I worked on it. Getting better and better sounding at each pass.

Rather, than say to much, I will say this:

Enjoy. May it bring a smile to your face.

Peace through love of creating and sharing.

Sincerely,

Scott

3-op FM synth with mod matrix

@RandLaneFly Hi, I'll do my best to give you some replies:

- yes, offset just adds a fixed frequency in hertz regardless of the ratio or the note played. It's useful if you want to use an operator as an LFO;

- I used clip because the patch can receive values from external messages from the parent patch, the clip avoids unwanted values whatever you send to it. It's got to do with the GUI of the patch rather than with the synth itself;

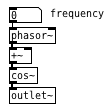

- here is pphasor~.pd, it's just a phasor with sample accurate phase reset to be able to sync the operators accurately. I adapted it from some old patch I found, but I can't remember what I've changed to be honest. It only replaces phasor~ so you'd still need cos~ afterwards;

- about wrap~ and cos~: in a normal situation, where you do phase modulation with phasor and cos~, you don't need wrap~ because cos~ already wraps whatever phase it receives, even if it's outside the 0..1 range. In this case, instead of cos~, I used a table to store the waveform. Two reasons for this: 1) I wanted to be able to change the resolution of the sine and 2) I wanted to be able to use other waveforms other than a sine wave. Having said that, if your phase (after being scrambled around by all the modulators acting on it) has to address a table, you need it to be within the range of that table, otherwise you would be addressing points that are outside of the table (which would result in silence). This is why the phase is wrap~ped to the 0..1 range, and then multiplied by the length of the table in samples (4096 in this case);

- I don't understand what you mean by:

@RandLaneFly said:

But I take it after that it's sending out the phases to the tables, what is the purpose of the line being formed and sent into the right inlet?

The tabread4~ object is your output waveform for this specific operator you're looking at. Afterwards it's multiplied by the envelope (received on the right inlet of the *~ object), and then it's sent to the output (aka, what you hear) and to the phase tables.

- If by "the math in the table patch" you mean the fexpr~ object, that is exactly the same as found in the thread I mentioned in the first post. It averages the last two samples in order to filter very high, harsh frequencies in the feedback path. It's possible that FM8 uses a different filter, which would result in a different sound, but I have no way of knowing that.

I'm afraid the only resource I used for making this mess of a patch was that single thread about feedback, and then I expanded it for multiple operators. I very much like how it sounds, but it's incredibly expansive with polyphony, and on my computer 3 operators seem to be the limit, I wanted to try six but could never manage.

My program segfaults when trying to use PD Extra patches

Hi there,

I've been playing around with libpd the last few months, trying to make a little app that does audio synthesis from some computer vision algorithms. The program is now complete.. The only thing is, it's a little cpu intensive and I'd like to make it more efficient.

Basically, what I'm trying to do now is incorporate the [pd~] object in pd-extra to take advantage of parallelism. I'm writing my code in C++ right now and compiling it on a Linux machine running Ubuntu Studio.

I set up my program and Makefile in a way similar to the pdtest_freeverb c++ example. After some wrangling, everything compiles fine. But when I try to run it,

Segmentation fault (core dumped)

Boo. At this point I try compiling pdtest_freeverb itself. It compiles fine (although I have to add a #include line in main.cpp for usleep). When I run it,

error: signal outlet connect to nonsignal inlet (ignored)

verbose(4): ... you might be able to track this down from the Find menu.

Segmentation fault (core dumped)

So then I try rebuilding libpd. I delete the old one with make clobber and then make with UTIL=true and EXTRA=true. Still no dice. I delete the whole libpd folder from my hard drive and then clone it again from github. I build libpd with a bunch of different combinations of options. I checkout a couple different versions of libpd and do the same. Nothing seems to work - still getting segfaults.

So, I am at a bit of a loss here. I don't know why these segfaults are happening. Any help would be ever so appreciated as I have been stuck on this for a while now.

Here is the output from gdb's backtrace full if that is helpful:

pdtest_freeverb:

#0 0x000055555557ad2c in freeverb_dsp (x=0x5555557d1820, sp=<optimized out>)

at src/externals/freeverb~.c:550

No locals.

#1 0x00007ffff7a966b3 in ugen_doit () from ../../../libs/libpd.so

No symbol table info available.

#2 0x00007ffff7a96bd3 in ugen_doit () from ../../../libs/libpd.so

No symbol table info available.

#3 0x00007ffff7a96bd3 in ugen_doit () from ../../../libs/libpd.so

No symbol table info available.

#4 0x00007ffff7a96bd3 in ugen_doit () from ../../../libs/libpd.so

No symbol table info available.

#5 0x00007ffff7a985ef in ugen_done_graph () from ../../../libs/libpd.so

No symbol table info available.

#6 0x00007ffff7aa5797 in canvas_dodsp () from ../../../libs/libpd.so

No symbol table info available.

#7 0x00007ffff7aa58cf in canvas_resume_dsp () from ../../../libs/libpd.so

No symbol table info available.

#8 0x00007ffff7aa6ad2 in glob_evalfile () from ../../../libs/libpd.so

No symbol table info available.

#9 0x00007ffff7b6d876 in libpd_openfile () from ../../../libs/libpd.so

No symbol table info available.

#10 0x000055555556e085 in pd::PdBase::openPatch (path="./pd", patch="test.pd",

this=0x555555783358 <lpd>) at ../../../cpp/PdBase.hpp:140

handle = <optimized out>

dollarZero = <optimized out>

handle = <optimized out>

dollarZero = <optimized out>

#11 initAudio () at src/main.cpp:55

bufferFrames = 128

patch = {_handle = 0x7fffffffdd50, _dollarZero = 6, _dollarZeroStr = "", _filename = "",

_path = ".\000\000\000\000\000\000\000hcyUUU\000\000\020\254M\367\377\177\000\000\000\275\271\270\254\335\\X\000\000\000\000\000\000\000\000`cyUUU\000\000`3xUUU\000\000\000\000\000\000\000\000\000\000@cyUUU\000\000P xUUU\000\000\000\000\000\000\000\000\000\000\070eVUUU\000\000\006\000\000\000\377\000\000\000\000\aN\367\377\177\000\000\200\aN\367\377\177\000\000\000\004N\367\377\177\000\000\300\002N\367\377\177\000\000\000\275\271\270\254\335\\X\000\000\000\000%\000\000\000(F\230\366\377\177\000\000\360\332UUUU\000\000\000\275\271\270\254\335\\X\004\000\000\000\000\000\000\000h\000\000\000\000\000\000\000\004\000\000\000\000\000\000\000"...}

parameters = {deviceId = 6, nChannels = 0, firstChannel = 3099180288}

options = {flags = 4294958528, numberOfBuffers = 32767,

streamName = <error reading variable: Cannot create a lazy string with address 0x0, and a non-zero length.>, priority = -8728}

#12 0x000055555555af09 in main (argc=<optimized out>, argv=<optimized out>) at src/main.cpp:85

No locals.

Thanks again for any help!

3-op FM synth with mod matrix

@zxcvbs Hi, yes I realise the patch is very counterintuitive, I'll do my best to try and explain what's going on. Let's forget about polyphony and consider one voice.

The PMops abstraction is the single voice, inside it are the three operators (PMop, both badly named sorry) and the tables subpatch. The basic structure of each operator could be reduced to the simple:

but the +~ object, instead of receiving the phase from a fixed modulator, it receives it from a table. Each operator has its own table, so operator 1 receives the phase from tabreceive~ $0-phase1 which is connected to +~.

At the same time (to populate those tables), the output from cos~ of each operator is sent to all tables, how much of the signal goes to each is decided in the modulation matrix.

The reason I decided for this arrangement is because this way each operator can send its phase to any operator, including itself (feedback). As explained in the first post, feedback has to be done with the operator working at block~ 1 in order to sound good, but if an operator receives the feedback every sample (block~ 1) but to that you add the phase of the other modulators every 64 samples (the default block size), the result sounds bad. This is the reason I used this mess of tables, to have an FM-8 style modulation matrix.

If you want to implement hard-coded algorithms, the same thing can be done without tables, patching each algorithm in its own abstraction or subpatch and switch between them as @whale-av suggested in your thread. Plus I see none of your algorithms have feedback, so they don't even need to run at block~ 1, and therefore the approach with tables is really not necessary.

Hope this clarifies things a bit.

ofelia: deque class

@Cuinjune I tried to create a video frame table ("circular video buffer").

It is possible to read (draw) video from the table but somehow only the current frame is stored at every position of the table.

Here is an example patch. "frames" is the frame table. "N" is the table size. At the left is the texture from the table, at the right the original video. Do you know what I do wrong?

I also tried ofPixels instead of ofTexture with the same result.

And I tried to store several images (jpg´s) instead of video to the frame buffer, which does probably work because each image has its own source.

;

if type(window) ~= "userdata" then;

window = ofWindow();

end;

;

local canvas = pdCanvas(this);

local clock = pdClock(this, "setup");

local videoPlayer = ofVideoPlayer();

local outputList = {};

local frames = {};

local N = 150;

;

function ofelia.bang();

ofWindow.addListener("setup", this);

ofWindow.addListener("update", this);

ofWindow.addListener("draw", this);

ofWindow.addListener("exit", this);

window:setPosition(50, 100);

window:setSize(800 + 40, 600 + 40);

if type(window) ~= "userdata" then;

window = ofWindow();

end;

;

window:create();

if ofWindow.exists then;

clock:delay(0);

end;

end;

;

function ofelia.free();

window:destroy();

ofWindow.removeListener("setup", this);

ofWindow.removeListener("update", this);

ofWindow.removeListener("draw", this);

ofWindow.removeListener("exit", this);

end;

;

function ofelia.setup();

ofSetWindowTitle("Video Player");

ofBackground(0, 0, 0, 255);

end;

;

function ofelia.moviefile(path);

videoPlayer:load(path);

videoPlayer:setLoopState(OF_LOOP_NORMAL);

videoPlayer:setPaused(true);

end;

;

function ofelia.setSpeed(f);

videoPlayer:setSpeed(f / 100);

end;

;

function ofelia.play();

videoPlayer:play();

end;

;

function ofelia.stop();

videoPlayer:setFrame(0);

videoPlayer:setPaused(true);

end;

;

function ofelia.setPaused(f);

if f == 1 then pause = true;

else pause = false;

end;

videoPlayer:setPaused(pause);

end;

;

function ofelia.setFrame(f);

videoPlayer:setFrame(f);

end;

;

function ofelia.setPosition(f);

videoPlayer:setPosition(f);

end;

;

function ofelia.setVolume(f);

videoPlayer:setVolume(f / 100);

end;

;

function ofelia.update();

videoPlayer:update();

if videoPlayer:isFrameNew() then;

table.insert(frames, 1, videoPlayer:getTexture());

if #frames > N then;

table.remove(frames, N + 1);

end;

end;

end;

;

function ofelia.draw();

videoPlayer:draw(420, 20, 400, 600);

if #frames == N then;

frames[ofelia.frame]:draw(20, 20, 400, 600);

end;

outputList[1] = videoPlayer:getPosition();

outputList[2] = videoPlayer:getCurrentFrame();

outputList[3] = videoPlayer:getTotalNumFrames();

return outputList;

end;

;

function ofelia.exit();

videoPlayer:close();

end;

;

Web Audio Conference 2019 - 2nd Call for Submissions & Keynotes

Apologies for cross-postings

Fifth Annual Web Audio Conference - 2nd Call for Submissions

The fifth Web Audio Conference (WAC) will be held 4-6 December, 2019 at the Norwegian University of Science and Technology (NTNU) in Trondheim, Norway. WAC is an international conference dedicated to web audio technologies and applications. The conference addresses academic research, artistic research, development, design, evaluation and standards concerned with emerging audio-related web technologies such as Web Audio API, Web RTC, WebSockets and Javascript. The conference welcomes web developers, music technologists, computer musicians, application designers, industry engineers, R&D scientists, academic researchers, artists, students and people interested in the fields of web development, music technology, computer music, audio applications and web standards. The previous Web Audio Conferences were held in 2015 at IRCAM and Mozilla in Paris, in 2016 at Georgia Tech in Atlanta, in 2017 at the Centre for Digital Music, Queen Mary University of London in London, and in 2018 at TU Berlin in Berlin.

The internet has become much more than a simple storage and delivery network for audio files, as modern web browsers on desktop and mobile devices bring new user experiences and interaction opportunities. New and emerging web technologies and standards now allow applications to create and manipulate sound in real-time at near-native speeds, enabling the creation of a new generation of web-based applications that mimic the capabilities of desktop software while leveraging unique opportunities afforded by the web in areas such as social collaboration, user experience, cloud computing, and portability. The Web Audio Conference focuses on innovative work by artists, researchers, students, and engineers in industry and academia, highlighting new standards, tools, APIs, and practices as well as innovative web audio applications for musical performance, education, research, collaboration, and production, with an emphasis on bringing more diversity into audio.

Keynote Speakers

We are pleased to announce our two keynote speakers: Rebekah Wilson (independent researcher, technologist, composer, co-founder and technology director for Chicago’s Source Elements) and Norbert Schnell (professor of Music Design at the Digital Media Faculty at the Furtwangen University).

More info available at: https://www.ntnu.edu/wac2019/keynotes

Theme and Topics

The theme for the fifth edition of the Web Audio Conference is Diversity in Web Audio. We particularly encourage submissions focusing on inclusive computing, cultural computing, postcolonial computing, and collaborative and participatory interfaces across the web in the context of generation, production, distribution, consumption and delivery of audio material that especially promote diversity and inclusion.

Further areas of interest include:

- Web Audio API, Web MIDI, Web RTC and other existing or emerging web standards for audio and music.

- Development tools, practices, and strategies of web audio applications.

- Innovative audio-based web applications.

- Web-based music composition, production, delivery, and experience.

- Client-side audio engines and audio processing/rendering (real-time or non real-time).

- Cloud/HPC for music production and live performances.

- Audio data and metadata formats and network delivery.

- Server-side audio processing and client access.

- Frameworks for audio synthesis, processing, and transformation.

- Web-based audio visualization and/or sonification.

- Multimedia integration.

- Web-based live coding and collaborative environments for audio and music generation.

- Web standards and use of standards within audio-based web projects.

- Hardware and tangible interfaces and human-computer interaction in web applications.

- Codecs and standards for remote audio transmission.

- Any other innovative work related to web audio that does not fall into the above categories.

Submission Tracks

We welcome submissions in the following tracks: papers, talks, posters, demos, performances, and artworks. All submissions will be single-blind peer reviewed. The conference proceedings, which will include both papers (for papers and posters) and extended abstracts (for talks, demos, performances, and artworks), will be published open-access online with Creative Commons attribution, and with an ISSN number. A selection of the best papers, as determined by a specialized jury, will be offered the opportunity to publish an extended version at the Journal of Audio Engineering Society.

Papers: Submit a 4-6 page paper to be given as an oral presentation.

Talks: Submit a 1-2 page extended abstract to be given as an oral presentation.

Posters: Submit a 2-4 page paper to be presented at a poster session.

Demos: Submit a work to be presented at a hands-on demo session. Demo submissions should consist of a 1-2 page extended abstract including diagrams or images, and a complete list of technical requirements (including anything expected to be provided by the conference organizers).

Performances: Submit a performance making creative use of web-based audio applications. Performances can include elements such as audience device participation and collaboration, web-based interfaces, Web MIDI, WebSockets, and/or other imaginative approaches to web technology. Submissions must include a title, a 1-2 page description of the performance, links to audio/video/image documentation of the work, a complete list of technical requirements (including anything expected to be provided by conference organizers), and names and one-paragraph biographies of all performers.

Artworks: Submit a sonic web artwork or interactive application which makes significant use of web audio standards such as Web Audio API or Web MIDI in conjunction with other technologies such as HTML5 graphics, WebGL, and Virtual Reality frameworks. Works must be suitable for presentation on a computer kiosk with headphones. They will be featured at the conference venue throughout the conference and on the conference web site. Submissions must include a title, 1-2 page description of the work, a link to access the work, and names and one-paragraph biographies of the authors.

Tutorials: If you are interested in running a tutorial session at the conference, please contact the organizers directly.

Important Dates

March 26, 2019: Open call for submissions starts.

June 16, 2019: Submissions deadline.

September 2, 2019: Notification of acceptances and rejections.

September 15, 2019: Early-bird registration deadline.

October 6, 2019: Camera ready submission and presenter registration deadline.

December 4-6, 2019: The conference.

At least one author of each accepted submission must register for and attend the conference in order to present their work. A limited number of diversity tickets will be available.

Templates and Submission System

Templates and information about the submission system are available on the official conference website: https://www.ntnu.edu/wac2019

Best wishes,

The WAC 2019 Committee

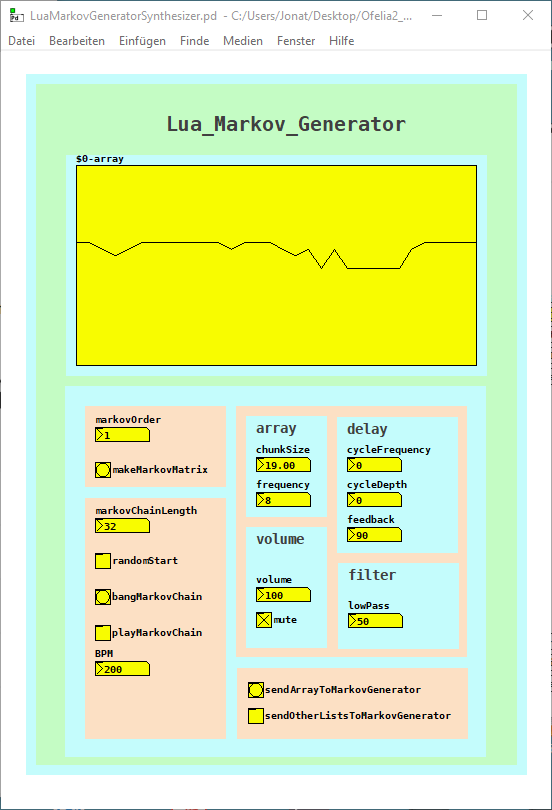

Lua / Ofelia Markov Generator Patch / Abstraction

I finished the Ofelia / Lua Markov Generator abstraction / patch.

The markov generator is part of two patches but can easily be used as an abstraction.

I want to use it for pattern variations of a sequencer for example.

It just needs a Pure Data list as input and outputs a markov chain of variable order and length.

Or draw into the array and submit it to the markov generator.

The first patch is an experiment trying to create interesting sounds with the markov algorithm.

In addition I used the variable Delay from the Pure Data help files:

LuaMarkovGeneratorSynthesizer.pd

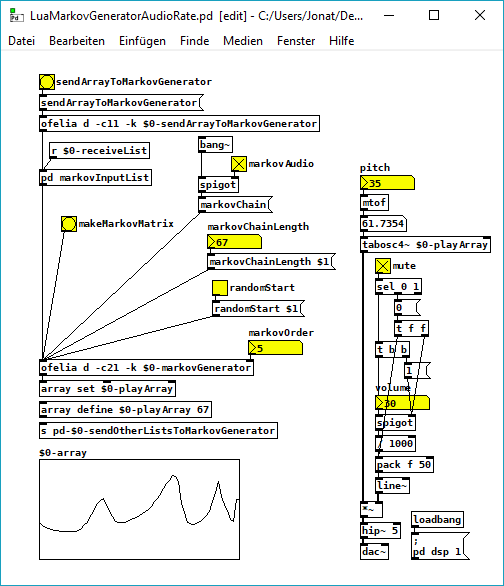

The second patch creates markov chains at audio rate, it is quite cpu heavy but works until the 10th markov order.

It is quite noisy but I was courius how it will sound:

LuaMarkovGeneratorAudioRate.pd

And here is the Lua code.

The core of the code is adapted from this python code: https://eli.thegreenplace.net/2018/elegant-python-code-for-a-markov-chain-text-generator/

A few things that I do not really understand yet, but finally it works without errors (it was not easy sometimes  ):

):

-- LUA MARKOV GENERATOR;

function ofelia.list(fv);

;

math.randomseed(os.time()- os.clock() * 1000);

;

print("LUA MARKOV GENERATOR");

local markovOrder = fv[1];

print("Markov Order: ", math.floor(markovOrder));

;

-- make dictionary;

;

local function defaultdict(default_value_factory);

;

local t = {};

local metatable = {};

metatable.__index = function(t, key);

if not rawget(t, key) then;

rawset(t, key, default_value_factory(key));

end;

return rawget(t, key);

end;

return setmetatable(t, metatable);

end;

;

-- make markov matrix;

;

local model = defaultdict(function() return {} end);

local data = {};

for i = 1, #ofelia.markovInputList do;

data[i] = ofelia.markovInputList[i];

end;

print("Data Size: ", #ofelia.markovInputList);

for i = 1, markovOrder do;

table.insert(data, data[i]);

end;

for i = 1, #data - markovOrder do;

local state = table.concat({table.unpack(data, i, i + markovOrder - 1)}, "-");

local next = table.unpack(data, i + markovOrder, i + markovOrder);

model[state][next] = (model[state][next] or 0)+1;

end;

;

-- make tables from dict;

;

local keyTbl = {};

local nexTbl = {};

local prbTbl = {};

for key, value in pairs(model) do;

for k, v in pairs(value) do;

table.insert(keyTbl, key);

table.insert(nexTbl, k);

table.insert(prbTbl, v);

end;

end;

;

print("Key: ", table.unpack(keyTbl));

print("Nex: ", table.unpack(nexTbl));

print("Prb: ", table.unpack(prbTbl));

;

print("Make a Markov Chain...");

;

function ofelia.markovChain();

;

-- make start key;

;

local startKey = {};

if ofelia.randomStart == 1 then;

local randomKey = math.random(#keyTbl);

startKey = randomKey;

else;

startKey = 1;

end;

;

local markovString = keyTbl[startKey];

local out = {};

for match in string.gmatch(keyTbl[startKey], "[^-]+") do;

table.insert(out, match);

end;

;

-- make markov chain;

;

for i = 1, ofelia.markovChainLength do;

;

-- weighted random choices;

;

local choices = {};

local weights = {};

for j = 1, #keyTbl do;

if markovString == keyTbl[j] then;

table.insert(choices, nexTbl[j]);

table.insert(weights, prbTbl[j]);

end;

end;

;

-- print ("choices:", table.unpack(choices));

-- print ("weights:", table.unpack(weights));

;

local totalWeight = 0;

for _, weight in pairs(weights) do;

totalWeight = totalWeight + weight;

end;

rand = math.random() * totalWeight;

local choice = nil;

for i, weight in pairs(weights) do;

if rand < weight then;

choice = choices[i];

break;

else;

rand = rand - weight;

end;

end;

;

if math.type(choice) == "integer" then;

choice = choice * (1.0);

end;

;

table.insert(out, choice);

local lastStep = {table.unpack(out, #out - (markovOrder-1), #out)};

markovString = table.concat(lastStep, "-");

end;

;

return {table.unpack(out, markovOrder + 1, #out)};

end;

end;

;

lua markov generator

i build a lua markov generator inspired from this python code with the idea to use it with pure data / ofelia: https://eli.thegreenplace.net/2018/elegant-python-code-for-a-markov-chain-text-generator/

finally the code works fine with the eclipse lua ide or with this ide https://studio.zerobrane.com/, but somehow not yet with pure data / ofelia.

here is the (not yet working) patch: ofelia_markov.pd

and here the lua code: markov_pd.lua

math.randomseed(os.time()- os.clock() * 1000);

-- make dictionary;

function defaultdict(default_value_factory);

local t = {};

local metatable = {};

metatable.__index = function(t, key);

if not rawget(t, key) then;

rawset(t, key, default_value_factory(key));

end;

return rawget(t, key);

end;

return setmetatable(t, metatable);

end;

;

;

-- make markov matrix;

print('Learning model...')

;

STATE_LEN = 3;

print ("markov order: " , STATE_LEN)

model = defaultdict(function() return {} end)

data = "00001111010100700111101010000005000700111111177111111";

datasize = #data;

print("data: ", data);

print("datasize: ", #data);

data = data .. data:sub(1, STATE_LEN);

print("altered data: ", data);

print("altered datasize: ", #data);

for i = 1, (#data - STATE_LEN) do;

state = data:sub(i, i + STATE_LEN-1);

-- print("state: ", state)

local next = data:sub(i + STATE_LEN, i + STATE_LEN);

-- print("next: ", next);

model[state][next] = (model[state][next] or 0)+1;

end;

;

;

-- make markov chain;

print('Sampling...');

;

local keyTbl = {};

local nexTbl = {};

local prbTbl = {};

for key, value in pairs(model) do;

for k, v in pairs(value) do;

table.insert(keyTbl, key);

table.insert(nexTbl, k);

table.insert(prbTbl, v);

end;

end;

print ("keyTbl: ", table.unpack(keyTbl));

print ("nexTbl: ", table.unpack(nexTbl));

print ("prbTbl: ", table.unpack(prbTbl));

;

;

-- make random key;

local randomKey = keyTbl[math.random(#keyTbl)];

state = randomKey;

print("RandomKey: ", randomKey);

;

-- make table from random key;

local str = state;

local stateTable = {};

for i = 1, #str do;

stateTable[i] = str:sub(i, i);

end;

;

out = stateTable;

print ("random key as table: ", table.unpack(out));

;

-- make markov chain;

for i = 1, datasize do;

;

-- weighted random choices;

local choices = {};

local weights = {};

for j = 1, #keyTbl do;

if state == keyTbl[j] then;

table.insert(choices, nexTbl[j]);

table.insert(weights, prbTbl[j]);

end;

end;

-- print ("choices:",table.unpack(choices));

-- print ("weights:",table.unpack(weights));

;

local totalWeight = 0;

for _, weight in pairs(weights) do;

totalWeight = totalWeight + weight;

end;

rand = math.random() * totalWeight;

local choice = nil;

for i, weight in pairs(weights) do;

if rand < weight then;

choice = choices[i];

choice = choice:sub(1,1);

break;

else;

rand = rand - weight;

end;

end;

;

table.insert(out, choice);

state = string.sub(state, 2, #state) .. out[#out];

-- print("choice", choice);

-- print ("state", state);

end;

;

print("markov chain: ", table.concat(out));

somehow pure data / ofelia interprets the nexTbl values as a functions while they are strings?

this is part of what the pure data console prints: nexTbl: function: 0000000003B9BF30 function: 0000000003B9BF30 function: 0000000003B9BF30 function: 0000000003B9BF30 function: 0000000003B9BF30 function: 0000000003B9BF30 function: 0000000003B9BF30 function: 0000000003B9BF30 function: 0000000003B9BF30 function: 0000000003B9BF30 function: 0000000003B9BF30 function: 0000000003B9BF30 function: 0000000003B9BF30 function: 0000000003B9BF30 function: 0000000003B9BF30

ofelia: [string "package.preload['#d41b70'] = nil package.load..."]:93: attempt to index a function value (local 'choice')

and this ist the output from the lua ide:

Program 'lua.exe' started in 'C:\Users\Jonat\Downloads\ZeroBraneStudio\myprograms' (pid: 220).

Learning model...

markov order: 1

data: 00001111010100700111101010000005000700111111177111111

datasize: 53

altered data: 000011110101007001111010100000050007001111111771111110

altered datasize: 54

Sampling...

keyTbl: 5 7 7 7 1 1 1 0 0 0 0

nexTbl: 0 0 1 7 7 1 0 5 7 1 0

prbTbl: 1 2 1 1 1 17 7 1 2 7 13

RandomKey: 1

random key as table: 1

markov chain: 111111000077701111100070001100000001100017011171111117

Program completed in 0.06 seconds (pid: 220).