Rich Synthesis Tutorial series

We've put up a series of beyond-the-basics synthesis tutorials on our youtube page:

So far we have:

- beyond sine tones

- envelopes

- polyphony with [clone]

- acid filters

- note sequences

- neat FM

- synth strings

- plucked strings

- wavetable oscillators

The patches and some other bits are up on our site:

https://reallyusefulplugins.tumblr.com/richsynthesis

Feedback/requests/suggestions welcome!

Web Audio Conference 2019 - 2nd Call for Submissions & Keynotes

Apologies for cross-postings

Fifth Annual Web Audio Conference - 2nd Call for Submissions

The fifth Web Audio Conference (WAC) will be held 4-6 December, 2019 at the Norwegian University of Science and Technology (NTNU) in Trondheim, Norway. WAC is an international conference dedicated to web audio technologies and applications. The conference addresses academic research, artistic research, development, design, evaluation and standards concerned with emerging audio-related web technologies such as Web Audio API, Web RTC, WebSockets and Javascript. The conference welcomes web developers, music technologists, computer musicians, application designers, industry engineers, R&D scientists, academic researchers, artists, students and people interested in the fields of web development, music technology, computer music, audio applications and web standards. The previous Web Audio Conferences were held in 2015 at IRCAM and Mozilla in Paris, in 2016 at Georgia Tech in Atlanta, in 2017 at the Centre for Digital Music, Queen Mary University of London in London, and in 2018 at TU Berlin in Berlin.

The internet has become much more than a simple storage and delivery network for audio files, as modern web browsers on desktop and mobile devices bring new user experiences and interaction opportunities. New and emerging web technologies and standards now allow applications to create and manipulate sound in real-time at near-native speeds, enabling the creation of a new generation of web-based applications that mimic the capabilities of desktop software while leveraging unique opportunities afforded by the web in areas such as social collaboration, user experience, cloud computing, and portability. The Web Audio Conference focuses on innovative work by artists, researchers, students, and engineers in industry and academia, highlighting new standards, tools, APIs, and practices as well as innovative web audio applications for musical performance, education, research, collaboration, and production, with an emphasis on bringing more diversity into audio.

Keynote Speakers

We are pleased to announce our two keynote speakers: Rebekah Wilson (independent researcher, technologist, composer, co-founder and technology director for Chicago’s Source Elements) and Norbert Schnell (professor of Music Design at the Digital Media Faculty at the Furtwangen University).

More info available at: https://www.ntnu.edu/wac2019/keynotes

Theme and Topics

The theme for the fifth edition of the Web Audio Conference is Diversity in Web Audio. We particularly encourage submissions focusing on inclusive computing, cultural computing, postcolonial computing, and collaborative and participatory interfaces across the web in the context of generation, production, distribution, consumption and delivery of audio material that especially promote diversity and inclusion.

Further areas of interest include:

- Web Audio API, Web MIDI, Web RTC and other existing or emerging web standards for audio and music.

- Development tools, practices, and strategies of web audio applications.

- Innovative audio-based web applications.

- Web-based music composition, production, delivery, and experience.

- Client-side audio engines and audio processing/rendering (real-time or non real-time).

- Cloud/HPC for music production and live performances.

- Audio data and metadata formats and network delivery.

- Server-side audio processing and client access.

- Frameworks for audio synthesis, processing, and transformation.

- Web-based audio visualization and/or sonification.

- Multimedia integration.

- Web-based live coding and collaborative environments for audio and music generation.

- Web standards and use of standards within audio-based web projects.

- Hardware and tangible interfaces and human-computer interaction in web applications.

- Codecs and standards for remote audio transmission.

- Any other innovative work related to web audio that does not fall into the above categories.

Submission Tracks

We welcome submissions in the following tracks: papers, talks, posters, demos, performances, and artworks. All submissions will be single-blind peer reviewed. The conference proceedings, which will include both papers (for papers and posters) and extended abstracts (for talks, demos, performances, and artworks), will be published open-access online with Creative Commons attribution, and with an ISSN number. A selection of the best papers, as determined by a specialized jury, will be offered the opportunity to publish an extended version at the Journal of Audio Engineering Society.

Papers: Submit a 4-6 page paper to be given as an oral presentation.

Talks: Submit a 1-2 page extended abstract to be given as an oral presentation.

Posters: Submit a 2-4 page paper to be presented at a poster session.

Demos: Submit a work to be presented at a hands-on demo session. Demo submissions should consist of a 1-2 page extended abstract including diagrams or images, and a complete list of technical requirements (including anything expected to be provided by the conference organizers).

Performances: Submit a performance making creative use of web-based audio applications. Performances can include elements such as audience device participation and collaboration, web-based interfaces, Web MIDI, WebSockets, and/or other imaginative approaches to web technology. Submissions must include a title, a 1-2 page description of the performance, links to audio/video/image documentation of the work, a complete list of technical requirements (including anything expected to be provided by conference organizers), and names and one-paragraph biographies of all performers.

Artworks: Submit a sonic web artwork or interactive application which makes significant use of web audio standards such as Web Audio API or Web MIDI in conjunction with other technologies such as HTML5 graphics, WebGL, and Virtual Reality frameworks. Works must be suitable for presentation on a computer kiosk with headphones. They will be featured at the conference venue throughout the conference and on the conference web site. Submissions must include a title, 1-2 page description of the work, a link to access the work, and names and one-paragraph biographies of the authors.

Tutorials: If you are interested in running a tutorial session at the conference, please contact the organizers directly.

Important Dates

March 26, 2019: Open call for submissions starts.

June 16, 2019: Submissions deadline.

September 2, 2019: Notification of acceptances and rejections.

September 15, 2019: Early-bird registration deadline.

October 6, 2019: Camera ready submission and presenter registration deadline.

December 4-6, 2019: The conference.

At least one author of each accepted submission must register for and attend the conference in order to present their work. A limited number of diversity tickets will be available.

Templates and Submission System

Templates and information about the submission system are available on the official conference website: https://www.ntnu.edu/wac2019

Best wishes,

The WAC 2019 Committee

[gme~] / [gmes~] - Game Music Emu

Allows you to play various game music formats, including:

AY - ZX Spectrum/Amstrad CPC

GBS - Nintendo Game Boy

GYM - Sega Genesis/Mega Drive

HES - NEC TurboGrafx-16/PC Engine

KSS - MSX Home Computer/other Z80 systems (doesn't support FM sound)

NSF/NSFE - Nintendo NES/Famicom (with VRC 6, Namco 106, and FME-7 sound)

SAP - Atari systems using POKEY sound chip

SPC - Super Nintendo/Super Famicom

VGM/VGZ - Sega Master System/Mark III, Sega Genesis/Mega Drive,BBC Micro

The externals use the game-music-emu library, which can be found here: https://bitbucket.org/mpyne/game-music-emu/wiki/Home

[gme~] has 2 outlets for left and right audio channels, while [gmes~] is a multi-channel variant that has 16 outlets for 8 voices, times 2 for stereo.

[gmes~] only works for certain emulator types that have implemented a special class called Classic_Emu. These types include AY, GBS, HES, KSS, NSF, SAP, and sometimes VGM. You can try loading other formats into [gmes~] but most likely all you'll get is a very sped-up version of the song and the voices will not be separated into their individual channels. Under Linux, [gmes~] doesn't appear to work even for those file types.

Luckily, there's a workaround which involves creating multiple instances of [gme~] and dedicating each one to a specific voice/channel. I've included an example of how that works in the zip file.

Methods

- [ info ( - Post game and song info, and track number in the case of multi-track formats

- this currently does not include .rsn files, though I plan to make that possible in the future. Since .rsn is essentially a .rar file, you'll need to first extract the .spc's and open them individually.

- [ path ( - Post the file's full path

- [ read $1 ( - Reads the path of a file

- To get gme~ to play music, start by reading in a file, then send either a bang or a number indicating a specific track.

- [ goto $1 ( - Seeks to a time in the track in milliseconds

- Still very buggy. It works well for some formats and not so well for others. My guess is it has something to do with emulators that implement Classic_Emu.

- [ tempo $1 ( - Sets the tempo

- 0.5 is half speed, while 2 is double. It caps at 4, though I might eventually remove or increase the cap if it's safe to do so.

- [ track $1 ( - Sets the track without playing it

- sending a float to gme~ will cause that track number to start playing if a file has been loaded.

- [ mute $1 ... ( - Mutes the channels specified.

- can be either one value or a list of values.

- [ solo $1 ... ( - Mutes all channels except the ones specified.

- it toggles between solo and unmute-all if it's the same channel(s) being solo'd.

- [ mask ($1) ( - Sets the muting mask directly, or posts its current state if no argument is given.

- muting actually works by reading an integer and interpreting each bit as an on/off switch for a channel.

- -1 mutes all channels, 0 unmutes all channels, 5 would mute the 1st and 3nd channels since 5 in binary is 0101.

- [ stop ( - Stops playback.

- start playback again by sending a bang or "play" message, or a float value

- [ play | bang ( - Starts playback or pauses/unpauses when playback has already started, or restarts playback if it has been stopped.

- "play" is just an alias for bang in the event that it needs to be interpreted as its own unique message.

Creation args

Both externals optionally take a list of numbers, indicating the channels that should be played, while the rest get muted. If there are no creation arguments, all channels will play normally.

Note: included in the zip are libgme.* files. These files, depending on which OS you're running, might need to accompany the externals. In the case of Windows, libgme.dll almost definitely needs to accompany gme(s)~.dll

Also, gme can read m3u's, but not directly. When you read a file like .nsf, gme will look for a file that has the exact same name but with the extension m3u, then use that file to determine track names and in which order the tracks are to be played.

configure pd to listen to my midi controller from the command line (rpi)

Some more details, in case anyone else is able to jump in on this...running PD 0.47.1 on my Raspberry Pi headless on the latest raspbian stretch lite.

similar to @francis666, if I run sudo pd -nogui -listdev I get the following list:

audio input devices:

- bcm2835 ALSA (hardware)

- bcm2835 ALSA (plug-in)

- Teensy MIDI (hardware)

- Teensy MIDI (plug-in)

audio output devices: - bcm2835 ALSA (hardware)

- bcm2835 ALSA (plug-in)

- Teensy MIDI (hardware)

- Teensy MIDI (plug-in)

API number 1

no midi input devices found

no midi output devices found

I'm hoping to use Teensy MIDI as a USB midi device. If I run aconnect I can see the MIDI input coming in so it's definitely working, it's just not getting recognised by PD. I've tried a number of different startup flag combinations, but there's something fishy about the "no midi input devices found" message that I'm getting there. What's going on? Any ideas? I've definitely done this a number of times in the past, just using the command sudo pd -nogui -midiindev 1 midwest.pd but that now doesn't seem to work.

The main recent change has been updating to Stretch and PD .47 from .46, is there any way that could be causing the problem?

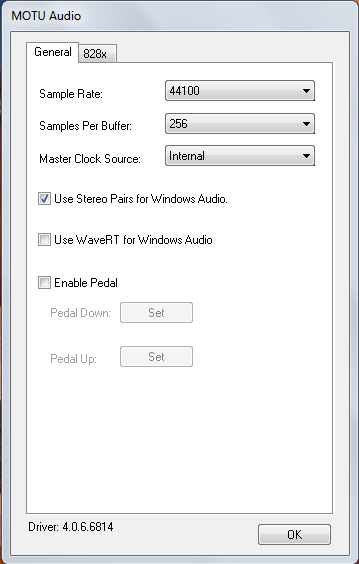

Audio Settings for multichannel with MOTU 828 mk3

Hi Matthieu,

I see your post is a little bit old but I'm experiencing the exact same problem now with my setup.

I'm using a Windows 7 machine with a MOTU 828x sound card connected via USB to the PC and Pd 0.48.1 vanilla.

Here what I've done:

- I've checked the "Use Stereo Pairs for Windows Audio" inside the "MOTU Audio Console";

- opened PD and selected "standard MMIO" as driver from the "Media" menù;

- now here's the list of outputs as it appears from the drop-down menu of "Media/Audio Setting.../Output device":

- MOTU Analog 3-4

- Loudspeakers (devide High ...

- MOTU Main-Out 1-2

- MOTU ADAT optical A 3-4

- Digital Output MOTU Audio

- MOTU ADAT optical A 1-2

- MOTU Analog 1-2

- MOTU ADAT optical A 7-8

- MOTU ADAT optical B 3-4

- MOTU Analog 7-8

- MOTU Analog 5-6

- Digital Output

- MOTU SPDIF 1-2

- MOTU ADAT optical B 1-2

- MOTU ADAT optical A 5-6

- MOTU Phones 1-2

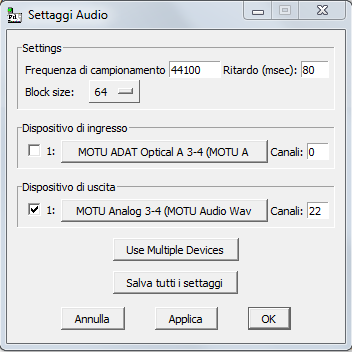

As you see this list is pretty messed up and the names of logical consecutive output channels are not consequential. I would like to have 8 analog outputs from my MOTU so I selected the first item on the list (MOTU analog 3-4) then specified a total of 22 channels.

I'm obliged to set 22 as the total number of output channels because in my list MOTU Analog 5-6 are the last analog elements present. Because items in the list represent pairs of channels, this item corresponds to logical channel 21 and 22.

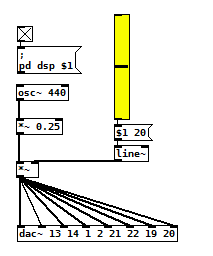

- Then I created the dac object this way:

[dac~ 13 14 1 2 21 22 19 20]

Here's an image

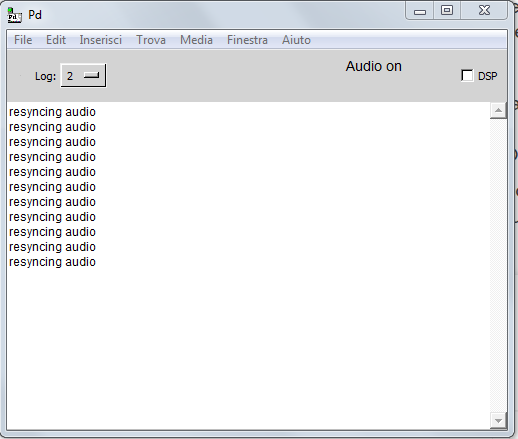

This way I'm able to hear sound on all analog outputs of the MOTU even if I'm experiencing variuous 'clicks' and a series of "resyncing audio" messages inside the PD console...

I confess, this method is the only way I'm able to make this setup work but it seems to me to be pretty messy and not intuitive at all.

What seems to be even worse is that analog audio outputs inside the device list seems to change their order at each computer restart, so every time I have to restart from scratch.

- Is there some easier solution to this problem?

- Maybe a preference file I can create for PD to load at each startup containing all these settings?

- or there may be a way to programmatically select correct "analog outputs" from the device list in my patch (even if string parsing doesn't seem to be so easy in PD to me).

- Would launching PD from console, maybe from an ad-hoc script, solve the problem?

Thank you so much for your support

M

ofelia v1.0.6 released

Hi, ofelia v1.0.6 is now available.

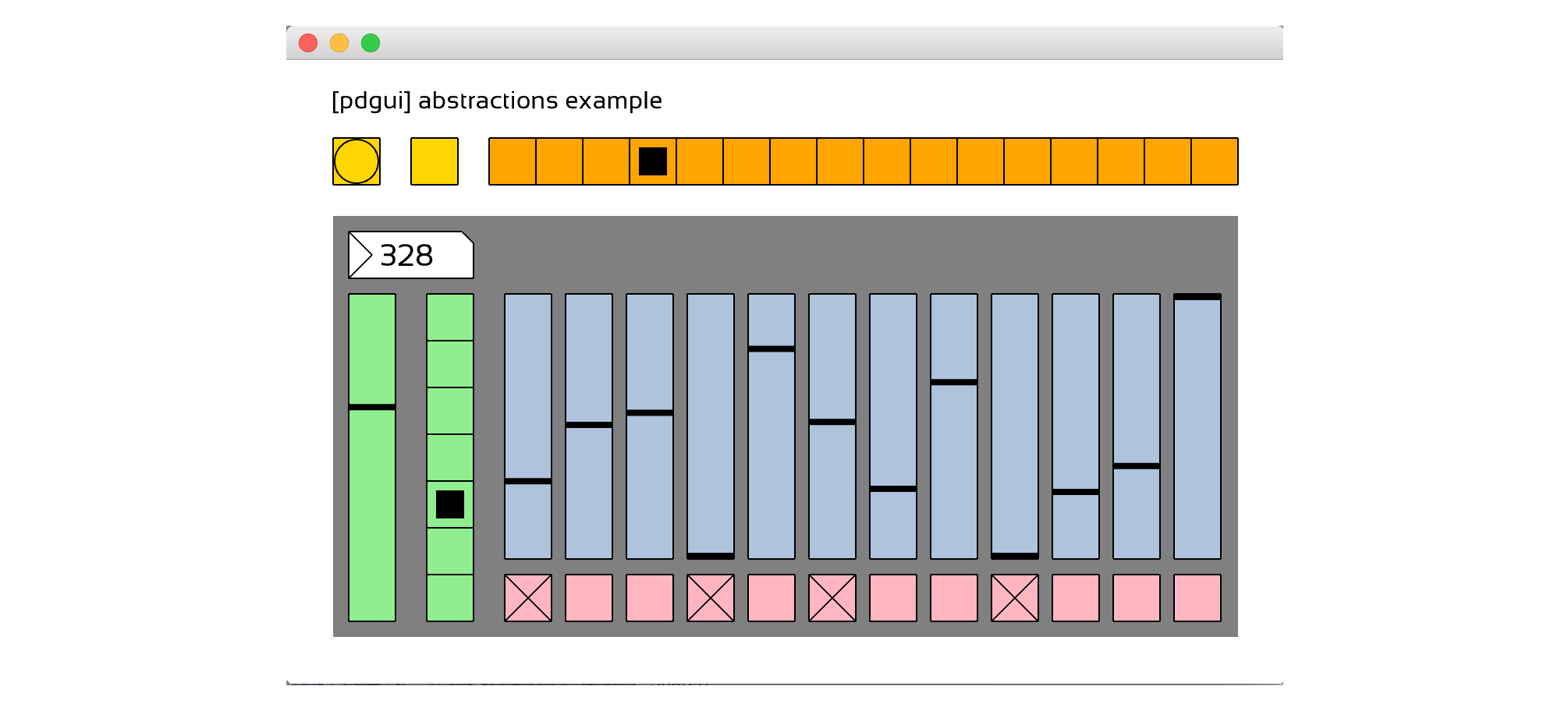

This version includes [pdgui] abstractions which emulate pd's built-in GUI.

Please try out "ofelia/examples/gui/pdguiExample.pd".

More GUI abstractions will be added in the close future.

You can also customize the look/behavior of the GUI if you know the basics of ofelia.

Changes:

- [ofCreateFbo] auto MSAA scaling is disabled

- fixed bug for mesh editor and getter objects

- [ofReceive], [ofValue] can change name dynamically

- float inlet is removed from [ofGetCanvasName], [ofGetDollarZero], [ofGetDollarArgs], [ofGetPatchDirectory] as it's problematic when used in cloned abstraction

- [ofGetPos], [ofGetScale] are renamed to [ofGetWindowPos], [ofGetWindowScale]

- [ofGetTranslate], [ofGetRotate], [ofGetScale] are added

- [pdgui] abstractions are added to the "examples/gui" directory

- [ofMap] has 5th argument which enables/disables clamping

- [ofGetElapsedTime], [ofGetLastFrameTime] returns time in seconds

- [ofGetElapsedTimeMillis], [ofGetLastFrameTimeMillis] are added

Upcoming features:

- More GUI abstractions

- GLSL shader loader

- Video player and grabber

More info about ofelia: https://github.com/cuinjune/ofxOfelia

Cheers

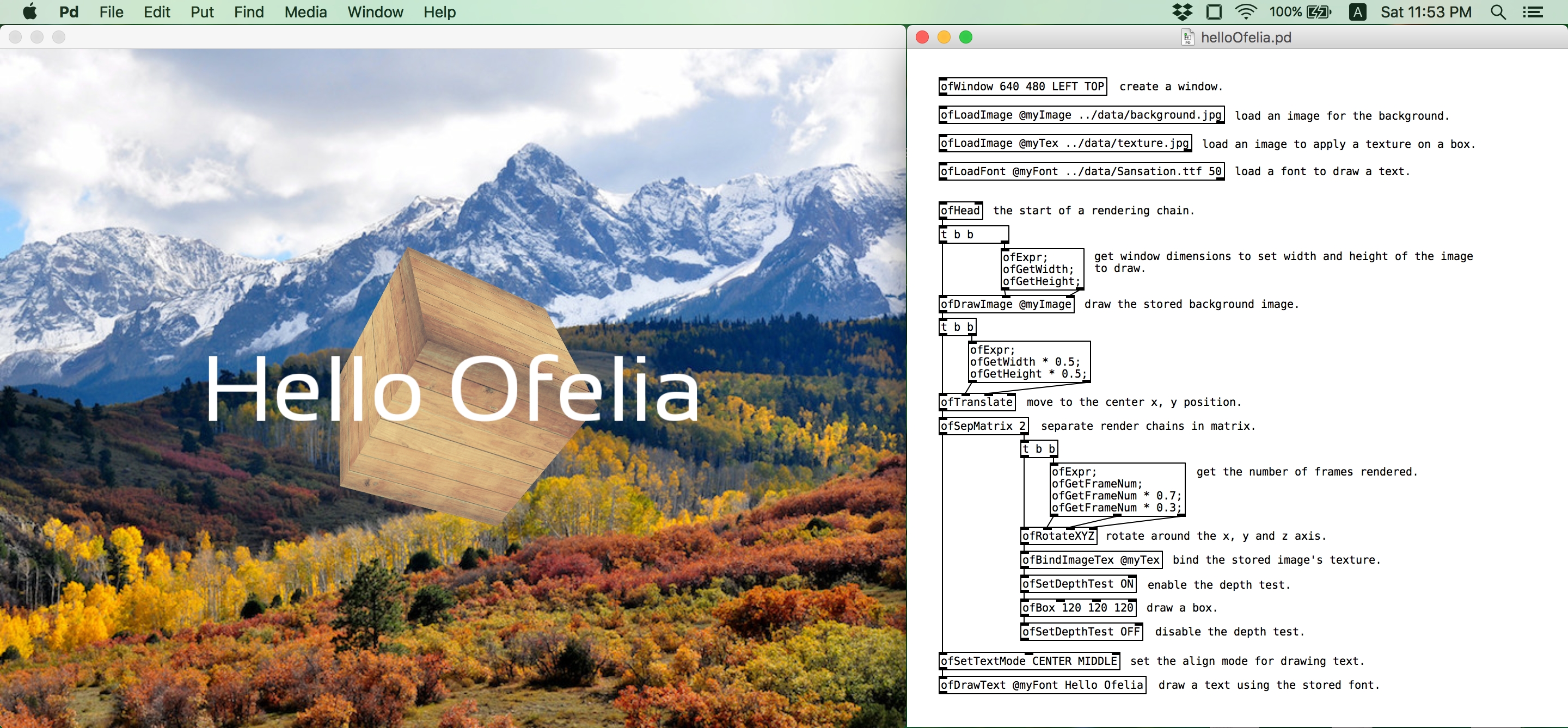

ofelia : Pd external library written with openFrameworks

Hey guys. I'm happy to announce my first Pd external library, ofelia. https://github.com/cuinjune/ofxOfelia

ofelia is a Pure Data(aka Pd) external library written with openFrameworks for creating cross-platform multimedia applications.

The library consists of over 400 objects covering most features of openFrameworks core API, designed to ease the development of multimedia applications such as visual arts, musical apps and interactive games.

And thanks to the real-time nature of Pure Data, one can make changes and see the result immediately without having to compile.

The library includes the following features:

-

interactive output window

-

various getters and event listeners

-

2d/3d shapes drawing

-

image and font loading

-

camera, lighting, material

-

framebuffer object

-

various data types (vec3f, color..)

-

various utilities to speed up development (new expr, counter..)

-

bandlimited oscillators and resonant filters

The library is currently available to be used under macOS, Linux(64bit) and Windows. It will also be available on Raspberry Pi soon.

Furthermore, the patch made with ofelia and Pd vanilla objects can easily be converted to a standalone application.

You can share the application with a wide range of audiences including non-Pd users and mobile device users.

Installation

- Make sure you have Pd installed on your desktop.

- Start Pd and go to

Help->Find externals, then search forofelia - Select the proper version of ofelia for your system to download and install.

Explore

- Try the example patches inside

ofelia/examplesdirectory. - Open

ofelia/help-intro.pdto see the list of built-in objects in ofelia. - Open the help files to learn more about each object.

- Create something cool and share it with other people.

I hope you like it. 🙂

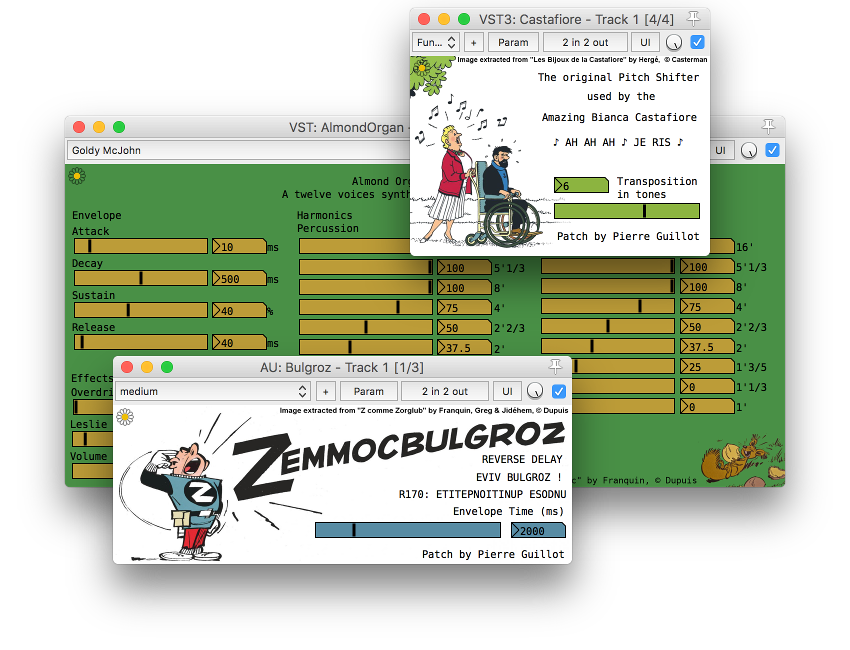

Camomile v1.0.1 - An audio plugin with Pure Data embedded

The brand new Camomile release (1.0.1) is available!

Camomile is a plugin with Pure Data embedded that offers to load and to control patches inside a digital audio workstation. The plugin is available in the VST2, VST3 and Audio Unit formats for Linux, Windows and MacOS. Download and information on the website: https://github.com/pierreguillot/camomile/wiki.

Read carefully the documentation. Feedback are more than welcome!

For feature request and bug report, please use the issue section on the Github repository if you can:

https://github.com/pierreguillot/camomile/issues.

An overview of the main changes:

v1.0.0

- Use libpd instead of my personal wrapper.

- Use TLS approach of Pd to manage thread concurrency issues.

- Use a text file to define the properties of the plugins.

- Generate plugins with the patches included.

- Separate the GUI and the parameters' definitions.

v1.0.1

- Fix thread concurrency issue that occurred when selecting a program (#77).

- Fix stack overflow issue due to concurrent access to the Pd's stack counter (#69).

- Update documentation for VST2/VST3/AU generation on MacOS to display the name of the plugins in Ableton (#75).

- Improve the whole documentation (#72) and start "How to Create Patches" (#73).

- Add more warning when there are extra arguments in parameters' methods.

- Add support for "openpanel" and "savepanel" methods.

- Update examples Bulgroz, AlmondOrgan, Castafiore, MiniMock.

- Start/Add support for patch description in the text file (#74).

- Start/Add support for patch credits in the text file (#74).

Further information on the previous versions and the changes on this topic.

Foot Pedal Behaviours Abstraction

Thanks for your thoughts...great to get feedback from your very much wider perspective.

RE Bug...bit of a background

It was as you said...but more the point that pd was trying to take exclusive control of the audio i/o and seemed to create a collision...as soon as I unticked the io...it all worked...thanks again.

Your patch seems to work great...good to see a clean up and understand a little deeper.

The help file for input_event still opens so I think the dll is kaput...sadly as there is no other way to undo via midi in Live. Not sure what to do there. Could pd execute an autoit script or dll? That way you could use a direct send to window rather than needing focus?

Im obviously trying to set up performance rig like a hardware box whereby I basically plug up, turn on and play (have performance daw set up on a dedicated surface pro 3 that doesnt do anything else)

I use the setup in a couple of modes;

A. Midi Guitarist

B. Percussionist/atmospheres etc

with LIve as the centre hub.

USB Hardware

- Floor board is always present (looking for some way of installing better switches on the rig kontrol 3 if anyone has any pointers or maybe arduino/pd/bluetooth would be amazing)

- UR44 IO always present

- Fishman Triple Play always present

- Optional Axiom Controller Keyboard or Percussion controller eg KMI Boppad

In boot sequence (using vbs/autoit script to facilitate auto start up);

- loopMidi - To setup internal patching

- Midiox - To take care of different usb hardware being plugged in via vbs as I have had a lot of trouble trying to do this in pd and eventually gave up...although it would be a lot simpler. Can interrogate usb devices plugged in etc

- Pd patch

- Ableton Live

So if there are any resources really detailing the auto connection of midi io in Pd, that would be brilliant...would like to remove the need for midiox.

The idea of using arduino to hardwire this into a new foot controller sounds amazing but I just dont have the time to learn more atm...anyone out there interested...Id be willing to donate towards it

Purr Data 2.3.1

Purr Data 2.3.1 is now available:

https://github.com/jonwwilkes/purr-data/releases/tag/2.3.1

Bug fixes in 2.3.1:

- fixed some display bugs in GUI

- fixed display bug with preset_hub

- fixed undefined behavior on x64 systems with the dollar arg parser

- strengthen the testing system

New in 2.3.0:

- added first draft of an external test suite to make sure a majority of external classes can load and instantiate correctly. Previously, the CI runners could register a "successful" build even if build errors kept one or more libraries from building correctly. Now there is a minimum number of objects that must instantiate in the tests or the build will fail. We can build on this to guarantee an exact number of creators for each library, making it much easier (and less risky) to imiprove the build system.

- ported tof/imagebang and allow it to instantiate with no arguments

- added "arch", "platform", and "libdir" methods to [pdinfo] and update help patch

- default loader now uses the "hexmunge" code to find filenames with hex encodings that accommodate object names with special characters

Bugfixes in 2.3.0:

- fixed up external arg types, use static declarations to protect against namespace pollution, fix allocation errors, fix crashers, header problems, fix buggy aliases for externals, fix some makefiles, fix various buffer overflows

- added missing help files for some external aliases (don't think they are used, but they are required by the Makefile)

- handle special case of trailing "/" or "/~" in legacy external alias classnames

- updated zexy, markex, iemmatrix alias files to use the simplified hexmunger

- removed external hexloader loader from default loaded libs now that we have rudimentary hexloading in the main loader

- removed arbitrary limit of 128 characters for classnames that are absolute paths

- fixed harmgen interface to use proper A_GIMME args

- fixed invalid reads in iemmatrix

- bump lyonpotpourri to head to fix missing object name argument

- make [declare -lib] handle absolute paths (and namespace-prefixed paths) consistent with object boxes

- removed references to helpers in fluid~ that got removed

- allow iemlib, unauthorized, lyonpotpourri iem_spec2, bin_ambi, iem_ambi, iem_adaptifilt and mrpeach objects to instantiate without arguments. Try to set sane defaults for these situations, while also outputting a warning. (Might consider changing the warning to an error...)

- allow class_addcreator to register an additional creator with the namespace prefix if one was used

- triage iem16's lack of shared lib with statically declared copy/pasta functions

- remove a bunch of state files from iem16 that somehow got added to the repo at the outset

- remove unnecessary pd files from fluid~

- make all cyclone classes instantiate when no arguments are provided

- switch lyonpotpourri submodule to gitlab mirror. This is needed to make some quick fixes found by the test system (which we can later request to merge upstream)

Report issues here: