udpsend and receive

@toddak That's a good resume...... as the Germans would say...... al ist clar....

I would recommend a separate router because it will be much easier to set up.

If for any reason it doesn't work you can borrow a spare almost immediately anywhere in the world.

Many of them (like the Linksys wrt54 series) have two aerials (diversity, more likely to get the message) and you can even plug in better aerials if you wish.

You can place it centrally to your performance space, doubling the possible size of the space.

You will be giving the Pi that you had planned to be the router less work.

Also, it might be easier to set up security so that your audience do not access your network during the show.

Yes, it's a big shame about the screens. It's a lot easier (especially when you set up for a performance) to VNC / network / putty (for windows) into them from your laptop..... and easier setting up at home as well. You can even do a remote reboot before a show if you are feeling a bit uncertain.

With vnc you can have all four of your Pi desktops on your laptop screen for control and monitoring. I would recommend setting up a vnc on them anyway for the day when you cannot get through the audience to adjust a Pi. You will need to use the X11 desktop or you will see a different desktop on your laptop from the one on your touch screen, which can be very confusing.

Through a network share you can copy/paste your patches between your laptop and the Pi's.

Through a remote terminal you can reboot / change your config files etc.

Yes, I see no reason not to switch to Jessie. Then I would put Pd-extended on the Pi's even though the extended project is not likely to evolve. There is just more stuff to play with without building externals.

OSC is great for what you want to do, so as Alexandros said, Vanilla will be ok now that osc has been added..........

For them all to receive at the same time you could broadcast to the network so they all receive the same message, or send to each Pi individually. For your current project broadcast is fine...... but if you might develop a more complex system (very likely I think) it is best to talk to them individually from the start through their individual ip addresses.

Osc messages are sent over udp, not tcp, so the sender has no idea whether the message has been received. I have never had a problem starting tracks, even on my system where there can be up to 64 musicians all adjusting their monitoring at the same time (a huge flood of osc messages). If you ever find that a Pi does not play you could set your patch to send the message two of three times in a row. The difference in start time (between Pi's) would still be less that the delays in the room that you will hear depending upon where you are sitting (with the same track on every Pi.).

I don't know the osc objects in vanilla.

In extended the easy objects for osc are...........

To receive......... [dumpOSC xxxx] where xxxx is the port number.

To send............... [packOSC] feeding it's output to [udpsend]

So with extended you would be using [udpsend].......

David.

udpsend and receive

@whale-av

No problem for all the questions, I have been working in solitude on this project with a lot of unknowns, so a lot of trial and error (previously have only used max/msp in laptop sound installations and performance scenarios).

Actually, no back up cards, but I did use the one that I hadn't completely set up yet, so getting it back to where it was isn't that hard. And to be honest i"m used to re-setting these things, its been quite a steep learning curve with lots not working.

The manual I found for puredata (http://en.flossmanuals.net/pure-data/network-data/send-and-receive/) was suggesting the netsend / netreceive objects, so I just took that for granted. At the moment, in its simplest form I would just like the same bang to be sent to all rpi's simultaneously, to that playback start could be synchronised. I assume for this to happen they could all just listen on the same port, and receive the same bang?

I was planning on one of the four rpi as being the router as well as an interface. Is this a bad idea? To be honest getting one to be a router was a huge battle, so if i could just use a router that would be easier. Conveniently those router's you suggested run off 12v, and my system runs of that, so it would be easy to put in the mix. Would the signal be stronger/reach further using a router as opposed to an rpi?

No, not planning on streaming audio. My thought process was that this might be a headache, and take up a bit of CPU. But in all honest its not needed for the project. The main forms of playback would literally be a mono file loaded into a PD patch with synchronised playback, or a PD patch as instrument (ie, sampler/synth type of interface) loaded onto each pi. In either case, they would be controlled via OSC. The instrument version might have been controlled by different ipads/OSC devices.

Too late, have already bought the screens. Part of this decision process was that there is so much that could go wrong in this scenario, that with a touch screen added I could easily trouble shoot in the field. Also, for the instrument patch, the instrument could just be on the screen which would be great.

The original touchscreens, small 2" ish ones, wouldn't work with Jessie, that's the short answer. However, what I wanted to do was to be able to access the HDMI out in order to mirror the screen, so that I could actually see what I was programming. I didn't know when purchasing them that the HDMI out is disabled once they are installed, and its a headache to get them output both to screen and HDMI out. So the 7" screens I figured would easily allow me to program the device in my studio without the need for extra monitors, and could easily be used in the field.

So, I'm pretty sure i can now change to Jessie, as the 7" screens seem easier to work with. The only thing that wouldn't work is the tutorial I have for turning a RPI into a router. But, if that will make things complicated (which if I'm reading between the lines, it might?), then I think powering an external router might be an easier option.

I think Armel in the long run will cause headaches, it does make sense to stay with the newer version, and I think switching to Jessie at this point in time would make sense.

Epic reply. Thoughts welcome!!

udpsend and receive

@toddak Ah!......... so I am sorry toddak, but I have a lot of questions......

So you still want to use the touch screens, and as you have a few Pi's you have backup cards so you have managed to get back to where you were before the update disaster? I hope so as that would make me feel much better!

You do not really want to use netsend and netreceive but in fact OSC objects (so you don't need MrPeach....... and a later vanilla will be ok as Alexandros suggested)? However, extended has many useful objects!

You are using an extra Pi as a router and you want to use [netsend] and [netreceive] on that?

I would think you would be better off with a dedicated router. I just bought another wrt54 on ebay for 99 uk pence.

Are you planning to stream audio to these 4 Pi's (in which case you will need extended) or are you just sending osc messages from them, or just receiving osc messages so as to start/stop playback?

I have not managed to make audio streaming to a Pi work reliably yet without occasional dropouts, and the sound will not work well at all unless you give Pd root privileges............. so remember.... for later...

sudo chmod 4755 /usr/bin/pd-extended

For audio on a Pi it should be run headless, so you should drop your touch screens in that case.

If you are using the Pi's with touch screens just to send osc messages you would be better off with some £40 android 7" tablets running TouchOSC (one licence for all of the android devices you own).

If they are receiving Osc to control their local playback then why do they have touch screens. Is it the touch screens that would not work with Jessie, or some other screen?

Jessie is not very different from Wheezy (it is not a huge update) but it is exclusively armhf. If the touch screens are needed and will not work with Jessie then you are stuck with the current wheezy that you have installed.

If you need to stay with armel then on one of your working Pi,s (armel) you should try this http://puredata.info/downloads/pd-extended-rpi version of extended and install pulse audio and the fonts (manually or with an apt-get) first. A lot of information you can find from here http://puredata.info/docs/raspberry-pi/?searchterm=raspberry

But if you have Rpi B2 (or anything other that an A or a Zero) you should really be running an armhf distribution.

David.

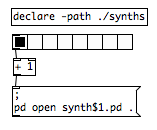

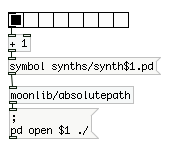

pd open

Hi David, I am also David.

m0oonlib doesn't come with Pd-vanilla, but I am willing to get Pd-extended working (I'm actually prototyping for a patch that will run on a raspberry pi.) I definitely want to share these patches when I'm done, so I am using relative paths because I want it to run on different environments, with different file structures, with everything relative to the main patch.

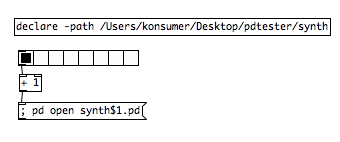

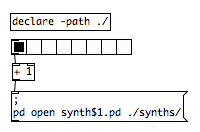

If I declare /synths wouldn't that be an absolute location (at the root of my filesystem?)

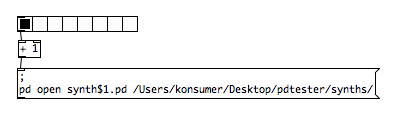

open messages require 2 params, the second of which is the path. If you leave it off you will get this error:

Bad arguments for message 'open' to object 'pd'

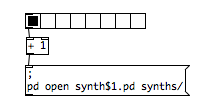

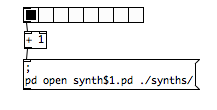

I have tried it several ways, and all do not work:

(I get the Bad Argument error)

With all of these I get Device not configured:

These work, but are not ideal:

I'd prefer not hardcoding an absolute path.

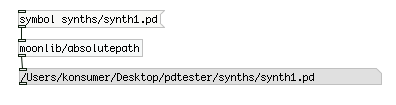

moonlib/absolutepath doesn't seem to work with a directory name, only a file. That means this works:

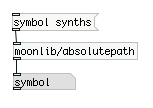

But not this (the format I actually need for path):

This works, but really I'd just prefer a solution that works in Pd-vanilla:

TouchOSC \> PureData \> Cubase SX3, and vice versa (iPad)

So, tonight have sussed 2 way comms from TouchOSC on my iPad to CubaseSX3, and thought I'd share this for those who were struggling with getting their DAW to talk back to the TouchOSC App. These patches translate OSC to MIDI, and MIDI back to OSC.

Basically, when talking back to OSC your patch needs to use:

[ctrlin midictrllr# midichan] > [/127] > [send /osc/controller $1] > [sendOSC] > [connect the.ipa.ddr.ess port]

So, in PD:

[ctrlin 30 1] listens to MIDI Controller 30 on MIDI Channel 1, and gets a value between 0 & 127. This value is divided by 127, as TouchOSC expects a value between 0 & 1. We then specify the OSC controller to send it to and the result of the maths ($1), and send the complete OSC packet to the specified IPAddress and Port.

This can be seen in LogicPad.vst-2-osc.pd

All files can be found in http://www.minimotoscene.co.uk/touchosc/TouchOSC.zip (24Mb)

This archive contains:

MidiYoke (configure via Windows > Start > Control Panel)

PD-Extended

touchosc-default-layouts (just incase you don't have LogicPad)

Cubase SX3 GenericRemote XML file (to import).

Cubase SX3 project file.

2x pd files... osc-2-vst, and vst-2-osc. Open both together.

In PD > Midi settings, set it's Midi Input and Midi Output to different channels (eg: Output to 3, Input to 2). In Cubase > Device Settings > Generic Remote, set Input to 3, and output to 2.

Only PAGE ONE of the LogicPad TouchOSC layout has been done in the vst-2-osc file.

Am working on the rest and will update once complete.

As the layout was designed for Logic, some functions don't work as expected, but most do, or have been remapped to do something else. Will have a look at those once I've gotten the rest of the PD patch completed.

Patches possibly not done in most efficient method... sorry. This is a case of function over form, but if anyone wants to tweak and share a more efficient way of doing it then that would be appreciated!

Hope this helps some of you...

PD, TouchOSC, Traktor, lots of problems =\[

I don't have a clear enough picture of how your setup is configured to determine where the problem might be, so I will ask a bunch of questions...

1) Midi mapping problem

Touch OSC on the iPad is configured and communicating with PD on windows, yes?

Are you using mrpeach OSC objects in PD?

Did you also install Midi Yoke as well as Midi OX?

Is there another midi controller that could be overriding the midi sent from PD to Tracktor?

Are you using Midi OX to watch the midi traffic to Tracktor in realtime?

I don't use Touch OSC (using MRMR) but you mentioned that the PD patch is being generated? Can you post the patch that it's generating?

2) Midi Feedback

It sounds like you want the communication to be bidirectional, this is not as simple as midi feedback. Remember Touch OSC communicates using OSC and Tracktor accepts and sends Midi. So what you have to do is have Tracktor output the midi back to PD and have PD send the OSC to Touch OSC to update the sliders.

I would seriously consider building your PD patch by hand and not using the generator. You'll learn more that way and will be able to customize it better to your needs.

Workshop: Xth Sense - Biophysical generation and control of music

April 6, 7, 8 2011

11:00-19:00

Xth Sense – biophysical generation and control of music

@NK

Elsenstr. 52/

2.Hinterhaus Etage 2

12059 Berlin Neukölln

FULL PROGRAM: http://www.nkprojekt.de/xth-sense-%E2%80%93-biophysical-generation-and-control-of-music/

~ What

The workshop offers an hands-on experience and both theoretical and practical training in gestural control of music and bodily musical performance, deploying the brand-new biosensing technology Xth Sense.

Developed by the workshop teacher Marco Donnarumma within a research project at The University of Edinburgh, Xth Sense is a framework for the application of muscle sounds to the biophysical generation and control of music. It consists of a low cost, DIY biosensing wearable device and an Open Source based software for capture, analysis and audio processing of biological sounds of the body (Pure Data-based).

Muscle sounds are captured in real time and used both as sonic source material and control values for sound effects, enabling the performer to control music simply with his body and kinetic energy. Forget your mice, MIDI controllers, you will not even need to look at your laptop anymore.

The Xth Sense biosensor was designed to be easily implemented by anyone, no previous experience in

electronics is required.

The applications of the Xth Sense technology are manifold: from complex gestural control of samples and audio synthesis, through biophysical generation of music and sounds, to kinetic control of real time digital processing of traditional musical instruments, and more.

~ How

Firstly, participants will be introduced to the Xth Sense Technology by its author and led through the assembling of their own biosensing wearable hardware using the materials provided.

Next, they will become proficient with the Xth Sense software framework: all the features of the framework will be unleashed through practical exercises.

Theoretical background on the state of art of gestural control of music and new musical instruments will be developed by means of an audiovisual review and participatory critical analysis of relevant projects selected by the instructor.

Eventually, participants will combine hardware and software to implement a solo or group performance to be presented during the closing event. At the end of the workshop, participants will be free to keep the Xth Sense biosensors they built and the related software for their own use.

~ Perspective participants

The workshop is open to anyone passionate about sound and music. Musical background and education does not matter as long as you are ready to challenge your usual perspective on musical performance. Composers, producers, sound designers, musicians, field recordists are all welcome to join our team for an innovative and highly creative experience. No previous experience in electronics or programming is required, however participants should be familiar with digital music creation.

Participation is limited to 10 candidates.

Preregistration is required and can be done by sending an email to info@nkprojekt.de

Requirements and further info

Participants need to provide their own headphones, soundcards and laptops with Pd-extended already installed.

Musicians interested in augmenting their favourite musical instrument by means of body gestures are encouraged to bring their instrument along. More information about the Xth Sense and a video of a live performance can be viewed on-line at

http://res.marcodonnarumma.com/projects/xth-sense/

http://marcodonnarumma.com/works/music-for-flesh-ii/

http://marcodonnarumma.com/teaching/

Dates

6-7-8 April, 11.00-19.00 daily (6 hours sessions + 1 hour break)

Fee

EUR 90 including materials (EUR 15).

Contact

Marco Donnarumma

m[at]marcodonnarumma.com

http://marcodonnarumma.com

Problems going fullscreen on external projector

I got a response from the creator of Gridflow on the mailing list, which I'll reprint here in case it can help people

I've made a simple video mixer in Gridflow (9.13) and I want to send the output to an external projector. I've set up my computer so that I can drag windows to the second screen, but when I attempt to go full screen using [#out sdl] and then pressing Esc it goes fullscreen on both screens.

Sounds like the kind of problem that can't be solved in GridFlow. It's a SDL issue.

If I use an [#out window] object, which I believe is an x11 window, there's no way (that I can find) to go full screen, so I'm stuck at with an 800x600 window on a 1366x768 screen. I did attempt to use a [#scale_to (768 1366)] object to emulate being full screen but the scaling causes a massive drop in framerate.

Changing the screen resolution is a sure way to avoid even having to scale.

[#scale_to] is a simple abstraction not meant to be fast or fancy. It would be a good thing to make a much better software scaler for GridFlow (interpolating and faster), but it wouldn't be as fast as a hardware-accelerated scaler, or simply changing the screen resolution.

Changing screen resolution also gives you the best image quality when using a CRT, whereas on a LCD it just means you're getting the LCD monitor to do the scaling for you.

Can anyone suggest how to go fullscreen on only one screen, how to go fullscreen using an x11 window or some other way to do this?

There is the "border" method for removing the border, the "move" method to put a window wherever you want (compute window coordinates to match the way the mouse travels from one screen to the other).

Wrong order of operation..

Hey,

plz save this as a *.pd file:

#N canvas 283 218 450 300 10;

#X obj 150 162 print~ a;

#X obj 211 162 print~ b;

#X obj 110 130 bng 15 250 50 0 empty empty empty 17 7 0 10 -262144

-1 -1;

#X obj 211 138 +~;

#X obj 150 94 osc~ 440;

#X obj 211 94 osc~ 440;

#X obj 271 94 osc~ 440;

#X connect 2 0 1 0;

#X connect 2 0 0 0;

#X connect 3 0 1 0;

#X connect 4 0 0 0;

#X connect 5 0 3 0;

#X connect 6 0 3 1;

It should show a simple patch with 3 [osc~], 1 [+~] and 2 [print~ a/b] to analyze the whole thing. Each [osc~] is the start of an audio-line/ -path!!

(The order in which you connect the [Bng]-button to the [print~]'s is unimportant since all data (from data-objects) is computed before, or rather between, each audio-cycle.)

Now to get this clear:

- Delete & recreate the most left [osc~] "A". Hit the bang-button. Watch the console, it first should read "a: ..." then "b: ..."

- now do the same with the second [osc~] "B" in the middle... Hit bang & watch the console: first "a: ..." then "b: ..."

- and once more: del & recreate the third [osc~] "C" and so on. Now the console should read first "b: ..." then "a: ..." !

to "1)": the last (most recent) [osc~] created is "A", so the audio-path, lets call it "pA", is run at first. So [print~ a] is processed first, then [print~ b].

to "2)": last [osc~] is "B". Now we have an order from first (oldest) to last (most recent) [osc~] : C,A,B !!

So now fist "B" & "pB" then "A" & "pA" then "C" & "pC" is processed. But "pB" ends at the [+~], because the [+~] waits for the 2nd input ("C") until it can put out something. Since "C" still comes after "A" you again get printed first "a: ..." then "b: ..." in the console!

to "3)": last [osc~] is "C", so the order is like "A,B,C". So at first "C" & "pC" is processed, then "B" & "pB", this makes [+~] put out a signal to [print~ b]. Then "A" & "pA" is processed and the [print~ a] as well. -> first "b: ..." then "a: ..."

This works for "cables" too. Just connect one single [osc~ ] to both (or more) [print~]'s. Hit bang and watch the console, then change the order you connect the [osc~] to the [print~]'s..

That's why the example works if you just recreate the 1st object in an audio-path to make it being processed at first. And that's why I'd like to have some numbers (according to the order of creation) at the objects and cables to determine the order of processing.

But anyways, I hope this helps.

And plz comment if there is something wrong.

Multitouch display - HID?

I'm not so sure about getting the coordinates of the touch points. Why do you want the coordinates? What's your OS?

The only thing I can tell you is that a single touch device is interpreted by the OS as a mouse...and that's hella useful. I don't know anything about multi-touch. Perhaps the OS is okay with simply interpreting it as "two mice." I think the problem is a question of it knowing that these are two distinct events - it needs extra info about one point being held down as the "other" is active. I just performed a test on my single-touch device - if I hold down one point on the screen and then tap another it will jump to the new point and stay there until it detects movement someplace else (as with a synaptics laptop touchpad). That said, as far as the computer is concerned, it registered both points, so if you really do only need the raw data, you might be able to get by with a single-touch. Say, you have different active spaces on the screen - like 4 Grid objects, one in each corner. You could touch them practically simultaneously and you would have 4 distinct coordinate outputs - with a single-touch device. You would run into a problem if you wanted both inputs to move simultaneously and you need continuous output, even then you may be able to come up with some kind of interpolation.

You can see my recent giddy post about my single-touch device using the Grid object - I hope that Acer has all of the drivers and lists compatibility online.

If you don't need multi-touch get an Elo, they are really cheap! As a matter of fact, you could probably get two or three Elo's for the same price, and THEN you have multi-touch.

- J.P.