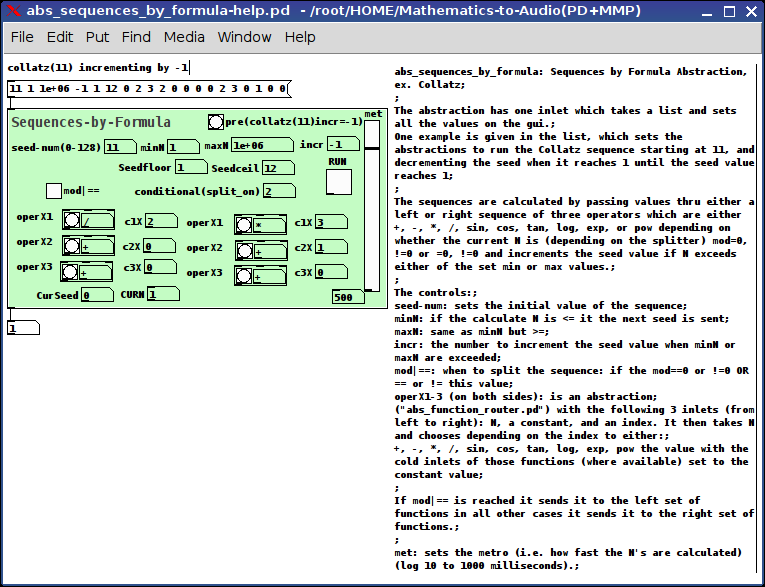

abs_sequences_by_formula: Sequences by Formula Abstraction, ex. Collatz

abs_sequences_by_formula: Sequences by Formula Abstraction, ex. Collatz

abs_sequences_by_formula-help.pd

abs_sequences_by_formula.pd (required)

abs_function_router.pd (required)

Really I was just curious to see if I could do this.

As to practical purpose: unknown (tho I Do think it's cool to stack sequences with a function router and might come in handy for those folks pursuing Sequencers)

The abstraction has one inlet which takes a list and sets all the values on the gui.

One example is given in the list, which sets the abstraction to run the Collatz sequence starting at 11, and decrementing the seed when it reaches 1 until the seed value reaches 1.

The sequences are calculated by passing values thru either a left or right sequence of three operators which are either +,-,*,/,sin,cos,tan,log,exp, or pow depending on whether the current N is (depending on the splitter) mod=0,!=0 or =0,!=0 and increments the seed value if N exceeds either of the set min or max values.

The controls:

seed-num: sets the initial value of the sequence;

minN: if the calculate N is <= it the next seed is sent;

maxN: same as minN but >=;

incr: the number to increment the seed value when minN or maxN are exceeded;

mod|==: when to split the sequence: if the mod==0 or !=0 OR == or != this value;

operX1-3 (on both sides): is an abstraction ("abs_function_router.pd") with the following 3 inlets (from left to right): N, a constant, and an index. It then takes N and chooses depending on the index to either: +,-,*,/,sin,cos,tan,log,exp,pow the value with the cold inlets of those functions (where available) set to the constant value;

If mod|== is reached it sends it to the left set of functions in all other cases it sends it to the right set of functions.

met: sets the metro (i.e. how fast the N's are calculated) (log 10 to 1000 milliseconds).

Footnote:

My intention here was to make a tool available which might allow us as pd users to show non-pd users what we mean when we say "Mathematics is 'Musical'".

Ciao!.

Have fun. Let me know if you need any help.

Peace.

Scott

mrpeach on Raspberry

Hi there,

Thanks for taking the time. I am getting a little bit frustrated here, so I guess it’s best to ask the community:

How do I get MrPeach to work on 0.47.1? I am using a Raspberry Pi 3 (running Linux version 4.9.20-v7+) and Pure Data 0.47.1.

I installed mrpeach using the “find externals” functionality. Of course I edited the path to “/home/pi/pd-externals” and created a “mrpeach” entry in the startup preferences.

Some background information: I want my Python code to “communicate” with pd but actually I do not care how they do this. OSC-Messages seem to be the easiest solution.

Well, seemed. I am a total beginner to pd

Help much appreciated

Daniel

By the way here is the log:

tried ./osc/routeOSC.l_arm and failed

tried ./osc/routeOSC.pd_linux and failed

tried ./osc/routeOSC/routeOSC.l_arm and failed

tried ./osc/routeOSC/routeOSC.pd_linux and failed

tried ./osc/routeOSC.pd and failed

tried ./osc/routeOSC.pat and failed

tried ./osc/routeOSC/osc/routeOSC.pd and failed

tried /home/pi/pd-externals/osc/routeOSC.l_arm and failed

tried /home/pi/pd-externals/osc/routeOSC.pd_linux and succeeded

tried ./mrpeach.l_arm and failed

tried ./mrpeach.pd_linux and failed

tried ./mrpeach/mrpeach.l_arm and failed

tried ./mrpeach/mrpeach.pd_linux and failed

tried ./mrpeach.pd and failed

tried ./mrpeach.pat and failed

tried ./mrpeach/mrpeach.pd and failed

tried /home/pi/pd-externals/mrpeach.l_arm and failed

tried /home/pi/pd-externals/mrpeach.pd_linux and failed

tried /home/pi/pd-externals/mrpeach/mrpeach.l_arm and failed

tried /home/pi/pd-externals/mrpeach/mrpeach.pd_linux and failed

tried /home/pi/pd-externals/mrpeach.pd and failed

tried /home/pi/pd-externals/mrpeach.pat and failed

tried /home/pi/pd-externals/mrpeach/mrpeach.pd and failed

mrpeach: can't load library

Parsing OSC format udp list - [SOLVED]

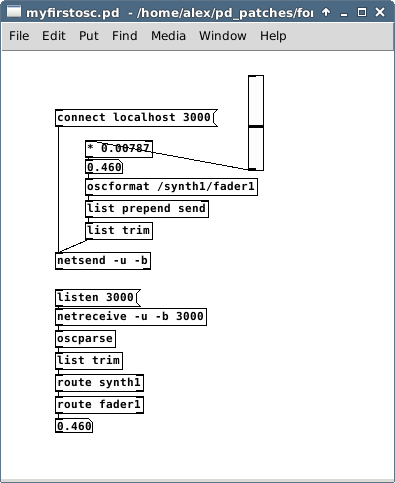

Youd patch used only a UDP connection, no OSC format in messages sent. Even though you typed an OSC-style address in a message, that's not an OSC format. OSC format is bytes that are not so human-readable. You need to incorporate the native OSC objects that come with vanilla, [oscformat] and [oscparse].

Here's a screenshot that does what you probably want (note that the OSC address in [oscformat] can omit the forward slashes, but since this is a standard OSC-style address, I kept them there):

Ardour 5.5 and Midi-Off Signals (or the lack thereof)

@Ibilata It seems that it was an Ardour problem back in 2013........ https://community.ardour.org/node/7595...... still not fixed?

Can Ardour send osc when feedback is on, or only when it receives an osc message? You could mute / unmute your synth with osc if it does.

http://manual.ardour.org/using-control-surfaces/controlling-ardour-with-osc/feedback-in-osc/

I don't use it..... but someone who does might respond......

David.

TouchOSC \> PureData \> Cubase SX3, and vice versa (iPad)

So, tonight have sussed 2 way comms from TouchOSC on my iPad to CubaseSX3, and thought I'd share this for those who were struggling with getting their DAW to talk back to the TouchOSC App. These patches translate OSC to MIDI, and MIDI back to OSC.

Basically, when talking back to OSC your patch needs to use:

[ctrlin midictrllr# midichan] > [/127] > [send /osc/controller $1] > [sendOSC] > [connect the.ipa.ddr.ess port]

So, in PD:

[ctrlin 30 1] listens to MIDI Controller 30 on MIDI Channel 1, and gets a value between 0 & 127. This value is divided by 127, as TouchOSC expects a value between 0 & 1. We then specify the OSC controller to send it to and the result of the maths ($1), and send the complete OSC packet to the specified IPAddress and Port.

This can be seen in LogicPad.vst-2-osc.pd

All files can be found in http://www.minimotoscene.co.uk/touchosc/TouchOSC.zip (24Mb)

This archive contains:

MidiYoke (configure via Windows > Start > Control Panel)

PD-Extended

touchosc-default-layouts (just incase you don't have LogicPad)

Cubase SX3 GenericRemote XML file (to import).

Cubase SX3 project file.

2x pd files... osc-2-vst, and vst-2-osc. Open both together.

In PD > Midi settings, set it's Midi Input and Midi Output to different channels (eg: Output to 3, Input to 2). In Cubase > Device Settings > Generic Remote, set Input to 3, and output to 2.

Only PAGE ONE of the LogicPad TouchOSC layout has been done in the vst-2-osc file.

Am working on the rest and will update once complete.

As the layout was designed for Logic, some functions don't work as expected, but most do, or have been remapped to do something else. Will have a look at those once I've gotten the rest of the PD patch completed.

Patches possibly not done in most efficient method... sorry. This is a case of function over form, but if anyone wants to tweak and share a more efficient way of doing it then that would be appreciated!

Hope this helps some of you...

PD, TouchOSC, Traktor, lots of problems =\[

I don't have a clear enough picture of how your setup is configured to determine where the problem might be, so I will ask a bunch of questions...

1) Midi mapping problem

Touch OSC on the iPad is configured and communicating with PD on windows, yes?

Are you using mrpeach OSC objects in PD?

Did you also install Midi Yoke as well as Midi OX?

Is there another midi controller that could be overriding the midi sent from PD to Tracktor?

Are you using Midi OX to watch the midi traffic to Tracktor in realtime?

I don't use Touch OSC (using MRMR) but you mentioned that the PD patch is being generated? Can you post the patch that it's generating?

2) Midi Feedback

It sounds like you want the communication to be bidirectional, this is not as simple as midi feedback. Remember Touch OSC communicates using OSC and Tracktor accepts and sends Midi. So what you have to do is have Tracktor output the midi back to PD and have PD send the OSC to Touch OSC to update the sliders.

I would seriously consider building your PD patch by hand and not using the generator. You'll learn more that way and will be able to customize it better to your needs.

OSC sequencer revisited (Ardour, AlgoScore, IanniX, ?)

I'm looking for a timeline-based software where:

- I can draw (and ideally, record) multichannel automation,

- said automation can then be sent out as OSC data,

- its playback position (and ideally, speed) can be controlled by OSC, MTC or in some other programmable way.

From this page: http://www.ardour.org/osc_control

I see Ardour can be controlled by OSC, and I suppose it can handle automation, but I can't find any mention of whether it can send out OSC data.

IanniX always looked too artsy to me, so if anyone has experimented with it, I'd like to know if it's possible to be precise to a degree where I want it to eg linearly output values from 0.1 to 0.569 over 0.6 seconds?

Anyone familiar with AlgoScore or 'Timeline OSC Sequencer'? I just found these while writing this post - shame on me

BTW, I posted in this section because this is I/O stuff, and I need it to control arduino, video etc, although that's not obvious from the post.... If there is a more appropriate section, please move it at will.

Wrong order of operation..

Hey,

plz save this as a *.pd file:

#N canvas 283 218 450 300 10;

#X obj 150 162 print~ a;

#X obj 211 162 print~ b;

#X obj 110 130 bng 15 250 50 0 empty empty empty 17 7 0 10 -262144

-1 -1;

#X obj 211 138 +~;

#X obj 150 94 osc~ 440;

#X obj 211 94 osc~ 440;

#X obj 271 94 osc~ 440;

#X connect 2 0 1 0;

#X connect 2 0 0 0;

#X connect 3 0 1 0;

#X connect 4 0 0 0;

#X connect 5 0 3 0;

#X connect 6 0 3 1;

It should show a simple patch with 3 [osc~], 1 [+~] and 2 [print~ a/b] to analyze the whole thing. Each [osc~] is the start of an audio-line/ -path!!

(The order in which you connect the [Bng]-button to the [print~]'s is unimportant since all data (from data-objects) is computed before, or rather between, each audio-cycle.)

Now to get this clear:

- Delete & recreate the most left [osc~] "A". Hit the bang-button. Watch the console, it first should read "a: ..." then "b: ..."

- now do the same with the second [osc~] "B" in the middle... Hit bang & watch the console: first "a: ..." then "b: ..."

- and once more: del & recreate the third [osc~] "C" and so on. Now the console should read first "b: ..." then "a: ..." !

to "1)": the last (most recent) [osc~] created is "A", so the audio-path, lets call it "pA", is run at first. So [print~ a] is processed first, then [print~ b].

to "2)": last [osc~] is "B". Now we have an order from first (oldest) to last (most recent) [osc~] : C,A,B !!

So now fist "B" & "pB" then "A" & "pA" then "C" & "pC" is processed. But "pB" ends at the [+~], because the [+~] waits for the 2nd input ("C") until it can put out something. Since "C" still comes after "A" you again get printed first "a: ..." then "b: ..." in the console!

to "3)": last [osc~] is "C", so the order is like "A,B,C". So at first "C" & "pC" is processed, then "B" & "pB", this makes [+~] put out a signal to [print~ b]. Then "A" & "pA" is processed and the [print~ a] as well. -> first "b: ..." then "a: ..."

This works for "cables" too. Just connect one single [osc~ ] to both (or more) [print~]'s. Hit bang and watch the console, then change the order you connect the [osc~] to the [print~]'s..

That's why the example works if you just recreate the 1st object in an audio-path to make it being processed at first. And that's why I'd like to have some numbers (according to the order of creation) at the objects and cables to determine the order of processing.

But anyways, I hope this helps.

And plz comment if there is something wrong.

What gives a sequencer its flavor?

A fantastically helpful reply. I had been making a patch that manufactures sequencer grids, which I might finish, though it's more of a cool idea than a practical necessity. While I was making it I did have many many thoughts about what would be cool, and I came up with a really big one, which I will share when I've finished a version of it. It presents one solution to the randomness/inhumanity problem.

Yeah I was considering using color changes with the toggles. I am pondering allowing for color-based velocity for each note, or maybe using an "accent" system that loudens accented notes by a certain modifiable percentage. I was also considering using structures to make a sequencer, but I don't want it to be really processor heavy. I might also purchase Max at some point to get another perspective on this live machine I'm building.

I do like the idea of weighting different sounds, timewise, maybe having some sort of changeable lead/delay param for each sequencer lane. Of course I am making pretty straightforward beat music, so anything weighted too heavily will have to be for texture and color rather than the core of the beat itself. I am also curious about the different ways to shuffle sequences, as with the MPC having a particularly pleasing shuffle algorithm. I guess I'm interested in rhythmic algorithms.

Anyway, I'm gonna just build as many different kinds of sequencers as I can think of, put them in a big patch, then have a MIDI router that allows me to output the sequences as note, CC, or other types of messages, then control various Pd and Logic synths from there. My true mission is to design a performance instrument that allows for tons of flexibility (independent sequence lengths, or being able to switch the output of a given sequence element), and with a very special twist. I can barely contain myself.

I think the ability to output any kind of message is one of the true strengths of Pd when compared with Logic. If I want to generate movement with a synth's params, I have to use that synth's internal mod matrix (if it has one), or I have to use automation which exists in a GUI element separated from any note information. I don't want to go digging into the nested windows of my sequencer program to be able to quickly program automation.

This conversation has me really excited. Thanks for the valuable input. And who are you, by the way?

D

What gives a sequencer its flavor?

i find, one of the good things to do, is to play round with a normal sequencer, and when you get a thought like "oh man, i wish i could just turn off the second half of the sequence every now and then", write that down, and then include that function in your own sequencer.

also, if you're designing a sequencer using pd's gui, those toggle buttons are absolute crap. but you can make them better with a bit of tweaking. instead of having just the little X show up in the middle of the toggle box, send a message like this:

[r $0-toggle-1(

|

- <- whatever number you put in here, is the colour

|

[color $1 $1 $1(

|

that way, the whole box gets turned on and off with a click. makes it much easier to see what's happening.

also, one of the biggest strengths with sequencing in pd is the ability to randomise, either randomly or intelligently. for example, if you are making a random drum pattern, then you probably want more notes to fall on 0,4,8, 12 than on 5, 7, 11....etc , so you build a patch that does that with weighted randoms and then you get reasonably cool sequences right off the bat that can be tweaked manually to work well.

just thinking of your idea about 'colouring' sequencers so that they lead or delay notes, and i think a cool way to do that would be to actually colour the individual sounds. so, for example, with a typical bass drum and hihat thing, you probably want the bass drum to be pretty steady, so you could assign it a colouration factor of 0.2 or something. but the hihat should be allowed to roam a bit, so maybe give it a 0.4.

but yeah, i think one of the best things about pd, is that you can program in all sorts of bends and tweaks that regular sequencers don't have. especially randomising stuff, makes it easy to try a heap of sequences very quickly. only problem i find with this type of method, is that it does tend to lead to the sounds becomign very erratic very quickly. with traditional sequencers, it takes a while to get all the notes in there, and you're more likely to just let it loop for a while and keep the same groove. but once you start getting into highspeed loop prototypes with randomization, you can switch between heaps of different sounds really quickly. this is really cool and definitely has advantages, but on the other hand, music is also something which has to come from the soul, so the jitterbug randomness does often mess things up too much. it's a bit of a balancing act, maybe. but if you can program the randomising stuff to work well and generally hit on good results, then that is better.

not sure if i answered much of the original question, but good to have a think about these things. cheers