How much is a signal aliasing?

@lacuna Thanks, that's really interesting. I don't think I solved this using FFTs because I didn't understand how to add the bins to get a meaningful measure of signal strength (among many other things). Looking over the code I wrote I can see it's gonna take a while before I understand what I was trying to do, but in the meantime maybe we can discuss your test and possibly poke holes in my original assumptions.

In my limited experience the filters in Pd do strange things when set to frequencies outside of the musical pitch range, so I would wonder if lp10_bess~ works properly at Nyquist. But I really don't know either way. And what does it mean to low pass filter a signal at Nyquist, since the filter itself is subject to the Nyquist limit? Or maybe you have other reasons to do so?

I'm still not convinced that comparing a signal with its upsampled version is meaningful. How do we know that the upper harmonics in the upsampled version are present and aliasing at the normal sample rate? My understanding is that upsampling just gives us more frequency headroom in which to filter out frequencies above Nyquist. But for measurement I wonder whether we should be instead passing the normal signal to an upsampled subpatch and then measuring how much energy is above 22050. Then again, that signal would look like a stepped signal in the upsampled environment, and that seems like it would have a lot of additional high frequency energy, so maybe that's a terrible idea.

I'm obviously more confused now than when I originally asked the question.

That said, I can't believe that there is an audible aliasing problem with plain sine waves. If you want I'd be happy to write you a double-blind listening test based on the Lady Tasting Tea experiment. Let me know and we'll work out what it should contain.

But assuming your method is valid, maybe you should try fft-split~ from Audiolab instead of the IEM filters. If I can understand my old code then maybe I'll have something more constructive to contribute later.

s~/r~ throw~/catch~ latency and object creation order

I searched through many of the s~/r~ throw~/catch~ hops in my own code for mistakes based on what I've learned, and it looks like ~50% of all my non-local audio connections carry modulation signals that originate from control rate objects, so those aren't affected much. Only a few programs would have been broken had the non-local connections not matched, but because things were created in signal-flow order where it mattered, there was a consistent (and often unnecessary) 1 block delay everywhere. Many of those patches were later converted to use tabsend~/tabreceive~ under a small block size, and a few were converted to use delay lines. I was burned by the creation order side effect of delwrite~/delread~ once, but it wouldn't have happened had I not taken a lazy shortcut. Hilariously, I also found one test patch that I used to declare definitively, once and for all, that s~/r~ always introduces a 1 block delay if the sort order isn't controlled using the G05 technique. To paraphrase Agent K in the movie Men in Black, imagine what I'll "know" tomorrow!

So as a practical issue, I doubt coders are getting tripped up by this frequently. If you're like me you tend to code in signal flow order, and so you are mostly just introducing latency unnecessarily. RE advice, I agree with @Nicolas-Danet: use local audio connections wherever it matters and never mind the clutter. Even my worst spider web isn't so bad. Subpatches and abstractions can help hide the mess.

But when things are broken and showtime is looming, you'd be foolish not to use what you know, especially when you can always go back after curtain calls and adjust things to satisfy the style police. Here is a summary of the sort rules as best as I've been able to figure them out so far:

- A patch orders its constituent audio subpatches and abstractions, but has no influence over their internal ordering. Consequently, these rules are applied starting at the top level patch and recurse into each subpatch and abstraction.

- Audio chain tributaries and independent audio chains are executed in reverse order of their head's creation, except for those created by [clone], which are executed in the order of their creation (i.e. ascending clone index order).

- Audio branches that start from fanout connections are executed in reverse order of their start connection's creation.

- Whenever the rules conflict, the rule that places the tilde object the latest in line takes precidence.

These sort rules can affect your patch because unless s~ and throw~ buffer their data before their corresponding r~ and catch~ are executed, the latter will have to wait a block to access it. Delread~ will have a minimum 1 block delay.

Finally, I thought of this technique if you're absolutely certain you are going to get burned (or are just paranoid): wrap all s~, r~, throw~, catch~, delwrite~, delread~, and audio chain heads in their own subpatches. I noticed that if you modify the contents of a subpatch, it doesn't change its sort order in the containing patch, so you can add test code (e.g. [sig~ <uniqueNr>]->[tabsend~ <sharedArray>]) to subpatch pairs and check their execution order without changing it. If the order is wrong, then you cut and repaste the appropriate subpatches or audio connections according to the rules above until it isn't. That should take 15 mins, not days.

Threshold~ limited?

I want to build a patch which acts like the gate input of eurorack's "maths" module, with dedicated rising and falling times at the beginning of a gate signal resp. at the end.

I want to build a patch which acts like the gate input of eurorack's "maths" module, with dedicated rising and falling times at the beginning of a gate signal resp. at the end.

I.e. a square wave will get kind of a trapezoidal signal form with specific rise and fall times.

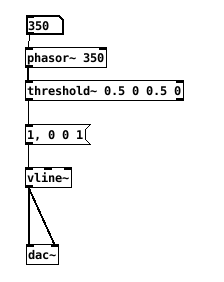

For testing purposes I made the following simple patch where the phasor's frequency can be changed. The threshold~ sends a bang and a small 1ms square wave signal is synthesized with the vline~.

But there seems to be an upper limit of the frequency of around (at least on my computer) 350Hz. Can not hear a tone with a higher pitch, with lower frequencies there is a kind of FM sound.

My question is: where is the problem in generating bang frequency higher than 350Hz? What is an alternate patch to get a bang signal at the raising edge of a signal and another second bang signal at the falling edge (like in threshold)?

s~/r~ throw~/catch~ latency and object creation order

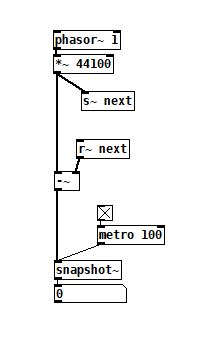

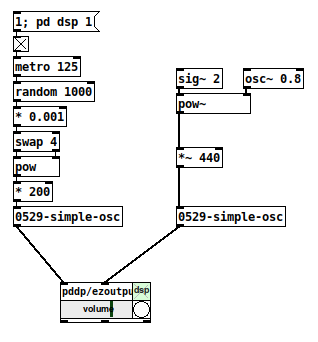

For a topic on matrix mixers by @lacuna I created a patch with audio paths that included a s~/r~ hop as well as a throw~/catch~ hop, fully expecting each hop to contribute a 1 block delay. To my surprise, there was no delay. It reminded me of another topic where @seb-harmonik.ar had investigated how object creation order affects the tilde object sort order, which in turn determines whether there is a 1 block delay or not. Object creation order even appears to affect the minimum delay you can get with a delay line. So I decided to do a deep dive into a small example to try to understand it.

Here's my test patch: s~r~NoLatency.pd

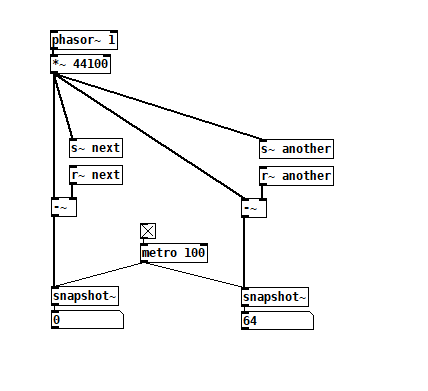

The s~/r~ hop produces either a 64 sample delay, or none at all, depending on the order that the objects are created. Here's an example that combines both: s~r~DifferingLatencies.pd

The s~/r~ hop produces either a 64 sample delay, or none at all, depending on the order that the objects are created. Here's an example that combines both: s~r~DifferingLatencies.pd

That's pretty kooky! On the one hand, it's probably good practice to avoid invisible properties like object creation order to get a particular delay, just as one should avoid using control connection creation order to get a particular execution order and use triggers instead. On the other hand, if you're not considering object creation order, you can't know what delay you will get without measuring it because there's no explicit sort order control. Well...technically there is one and it's described in G05.execution.order, but it defeats the purpose of having a non-local signal connection because it requires a local signal connection. Freeze dried water: just add water.

That's pretty kooky! On the one hand, it's probably good practice to avoid invisible properties like object creation order to get a particular delay, just as one should avoid using control connection creation order to get a particular execution order and use triggers instead. On the other hand, if you're not considering object creation order, you can't know what delay you will get without measuring it because there's no explicit sort order control. Well...technically there is one and it's described in G05.execution.order, but it defeats the purpose of having a non-local signal connection because it requires a local signal connection. Freeze dried water: just add water.

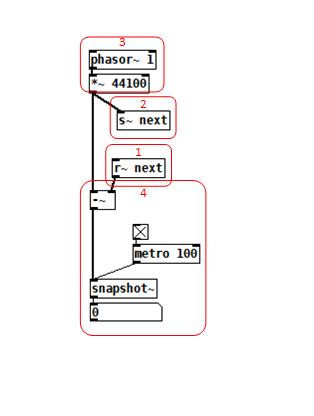

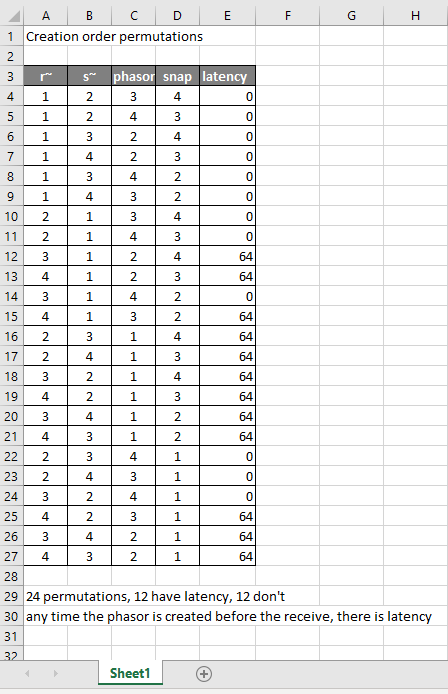

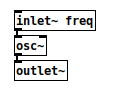

To reduce the number of cases I had to test, I grouped the objects into 4 subsets and permuted their creation orders:

The order labeled in the diagram has no latency and is one that I just stumbled on, but I wanted to know what part of it is significant, so I tested all 24 permuations. (Methodology note: you can see the object creation order if you open the .pd file in a text editor. The lines that begin with "#X obj" list the objects in the order they were created.)

The order labeled in the diagram has no latency and is one that I just stumbled on, but I wanted to know what part of it is significant, so I tested all 24 permuations. (Methodology note: you can see the object creation order if you open the .pd file in a text editor. The lines that begin with "#X obj" list the objects in the order they were created.)

It appears that any time the phasor group is created before the r~, there is latency. Nothing else matters. Why would that be? To test if it's the whole audio chain feeding s~ that has to be created before, or only certain objects in that group, I took the first permutation with latency and cut/pasted [phasor~ 1] and [*~ 44100] in turn to push the object's creation order to the end. Only pushing [phasor~ 1] creation to the end made the delay go away, so maybe it's because that object is the head of the audio chain?

I also tested a few of these permutations using throw~/catch~ and got the same results. And I looked to see if connection creation order mattered but I couldn't find one that did and gave up after a while because there were too many cases to test.

So here's what I think is going on. Both [r~ next] and [phasor~ 1] are the heads of their respective locally connected audio chains which join at [-~]. Pd has to decide which chain to process first, and I assume that it has to choose the [phasor~ 1] chain in order for the data buffered by [s~ next] to be immediately available to [r~ next]. But why it should choose the [phasor~ 1] chain to go first if it's head is created later seems arbitrary to me. Can anyone confirm these observations and conjectures? Is this behavior documented anywhere? Can I count on this behavior moving forward? If so, what does good coding practice look like, when we want the delay and also when we don't?

Mono / stereo detection

aha, so they take messages when you name them?

[quote]What would not work is to patch a control and a signal in simultaneously: the signal will overwrite the control.[/quote]

haha, in max it is like that:

most externals take signals and messages at the signal inlets.

if you connect a signal and a number to math objects like +~, the number would multiply the signal value.

if you want to connect signals and messages to an abstraction, you have to use something like [route start stop int float] inside the abstraction - the signal will come out of the "does not match" output of [route].

to complete the magic, you can pack signals into named connections using [prepend stereoL] [prepend stereoR] [prepend modulation1] [prepend modulation2] and then you can send all 4 signals through one connection into the subpatch.

you can also mix different types of connections that way.

...

regarding the problem of the OP:

in max i made myself abstractions to enhance the function of inlets and outlets, where you can send a [metro 3000] into using s/r in order to find out which inlets are connected and which not.

in theory one could do that with audio, too, and add a pulsetrain of + 1000. to a stream, later decode the stuff by modulo 1000.

Mono / stereo detection

@Obineg said:

one reason why i find it "strange" is that it causes an inconsistency between the externals paradigm and the patch paradigm, since you cant have inlets in your abstractions which takes both signals and messages.

In fact, you can connect a control to a signal-rate inlet~.

This abstraction is kind of dumb, but it makes the point:

Then, here, the left-hand side patches random numbers (generated in the control domain) into the slot defined by the signal-rate [inlet~]. And, the right-hand side patches a signal-rate LFO. They both work.

What would not work is to patch a control and a signal in simultaneously: the signal will overwrite the control.

about your example... no idea how that same implementation would perform in pd? but in max, if you´d cut off a signal objects from DSP, for example by shutting down a subcircuit, the last value of the now stopped signal would remain in the input buffer of all connected objects.

It appears that, when you connect nothing to [inlet~], it already holds its last value and doesn't reset. You can test that, in the sample patch above, by deleting the signal connection into the right-hand side instance of the 0529 abstraction. If it went to 0, then the right channel would be silent. Instead, it holds frequency.

So it seems it already works as you specify. (That's different from what whale-av said -- which is understandable -- as others point out, Pd help patches lost some key details so it's easy to get confused about this kind of fine print.)

This also means that there really is no reliable way to tell if something is connected or not. You can specify an initial value for the [inlet~], but if you connect something and then delete the connection, this initial value will be lost and not reset.

hjh

Mono / stereo detection

@Obineg said:

i would have found it more logically that inlet~ would only get activated when you connect something to it, since patching requires a recompile anyway.

the way it is you are creating a lot of signal connections for nothing for some abstraction designs.

A counterexample:

Let's say you're making a mid-side encoder abstraction: two signal inlet~'s (left and right), and a [+~] and [-~]. The left inlet~ goes into the left inlets of the two math operators; the right inlet~ goes into the operators' right inlets. So the adder produces the mid signal, and the difference operator produces the side.

Now you put the abstraction into another patch, and connect a signal to the abstraction instance's right inlet.

By your logic, the operators' left inlets should be deactivated. But the operators still need to calculate. (Passthrough isn't an option because, in this contrived example, the [-~] side signal should be phase inverted relative to the mid signal -- the [-~] must be doing 0 - R.) To calculate, the operators need two inputs.

At this point, software designers would have to weigh the CPU cost of passing zeros (arguably this is likely to be minimal) against the added complexity of maintaining active vs inactive connection states. Considering that it's easy to underestimate the maintenance impact of code complexity, it actually is a legit decision to accept a small CPU cost in exchange for more straightforward logic.

I don't find it strange at all.

hjh

Per-sample processing? (for Karplus-Strong)

@jameslo a lop in the feedback path is something I tried but I only found it useful to shape the spectrum rather than smooth out the signal to the dac. I ended up with some crazy sets of filters and it's great fun. When I do key-tracked lops I like to put the cutoff either right on the fundamental or maybe at 2x, 3x, depending on how steep the rolloff is. Thanks for the pointer, I'll have a look!

@Booberg Yes, that occurred to me and in effect I'm doing more or less that. Filtering the feedback chain like @jameslo says is also useful in containing unstable signals. However, in this case I'm not dealing with an unstable signal per se. I think the problem really is that the excitation signal has too harsh of an attack and the delay being too long makes the repeats noticeable as almost-separate events. A partial solution at this point is to either smooth the attack of the exciter or low-pass the output signal.

In general, though, I was wondering how using [gen~ ] manages to smooth things out without requiring filtering, and it seems the sample-level processing (as opposed to block-level) has something to do with it.

Starting a Pure Data Wiki (Database/Examples Collection)

Another sample from the work-in-progress ...

https://docs.google.com/document/d/1tzS2KU8x31JXoUxmkEr5WikJvxcrgHa4C0vM8LBw49Q

I thought it would be worth having a category for these more self-contained collections

Modular Systems in Pd

ACRE

https://git.iem.at/pd/acre

ARGOPd

http://gerard.paresys.free.fr/ARGOPd/

Automatonism

https://www.automatonism.com/

Context

https://github.com/LGoodacre/context-sequencer

https://contextsequencer.wordpress.com/

DENSO

https://www.d-e-n-s-o.org/pure

DIY

http://pdpatchrepo.info/patches/patch/76

Kraken - Virtual Guitar Effects Pedal Board

https://forum.pdpatchrepo.info/topic/11999/kraken-a-low-cpu-compact-highly-versatile-guitar-effects-stompbox

LWM Rack

http://lwmmusic.com/software-lwmrack.html

La Malinette

http://malinette.info/en/#home

Open toolkit for hardware and software interactive art-making systems

Mandril Boxes

http://musa.poperbu.net/index.php/puredata-mainmenu-50#mandril

Metastudio

http://www.sharktracks.co.uk/html/software.html

Muselectron Studio

http://www.muselectron.it/MuselectronStudio/Studio_index.html

NetPd

http://www.netpd.org

NoxSiren

https://forum.pdpatchrepo.info/topic/13122/noxsiren-modular-synthesizer-system-v10

Pd Modular Synthesizer

https://github.com/chrisbeckstrom/pd_modular_synth

Pd-Modular

https://github.com/emssej/pd-modular

PdRacks

https://github.com/jyg/pdr

Proceso

https://patchstorage.com/proceso/

Universal Polyphonic Player (UPP)

https://grrrr.org/research/software/upp/

https://github.com/grrrr/upp

VVD - Virtual (Virtual) Devices

http://www.martin-brinkmann.de/pd/vvd.zip

“a virtual modular-rack” from http://www.martin-brinkmann.de/pd-patches.html

XODULAR

https://patchstorage.com/xodular/

A previous incarnation of Automatonism

any others that should be in there?

Routing different signals to clone instances

Hello all!

I’m new to this forum! However I’ve been working with Pd for a while. Currently I’m programming a polyphonic synth in Pd with FM capabilities and I’m facing a problem. I have created some sort of a module which includes one oscillator, a low pass filter and an ADSR envelope generator. The output of this module is an audio signal. It also includes some inputs and one of them is a signal input for frequency modulation (it receives a signal from the outside and uses it to modulate the frequency of the oscillator inside the module). I use clone command to create multiple instances of the same module to make it polyphonic. Then I tried to route a signal from another cloned module to the FM input of the first module and the obvious ocurred: when I play only one note the FM works as supposed to. But when I play multiple notes it sounds terrible and it is because the audio signal of all the notes from the second module goes to the FM input of the first module and then it is routed to all the cloned instances of it. What I need is to route only the signal corresponding to one note and route it to the same note of the first module. Is there any way to achieve this? I understand that a signal goes equally to all instances of a cloned object, but is it possible get separate signals from all instances of the second module and route them separately to the corresponding instances for the first module?