ALSA output error (snd\_pcm\_open) Device or resource busy

Sorry to necro this thread, but I finally found out how to run PureData under Pulseaudio (which otherwise results with "ALSA output error (snd_pcm_open): Device or resource busy").

First of all, run:

pd -alsa -listdev

PD will start, and in the message window you'll see:

audio input devices:

1. HDA Intel PCH (hardware)

2. HDA Intel PCH (plug-in)

audio output devices:

1. HDA Intel PCH (hardware)

2. HDA Intel PCH (plug-in)

API number 1

no midi input devices found

no midi output devices found

... or something similar.

Now, let's add the pulse ALSA device, and run -listdev again:

pd -alsa -alsaadd pulse -listdev

The output is now:

audio input devices:

1. HDA Intel PCH (hardware)

2. HDA Intel PCH (plug-in)

3. pulse

audio output devices:

1. HDA Intel PCH (hardware)

2. HDA Intel PCH (plug-in)

3. pulse

API number 1

no midi input devices found

no midi output devices found

Notice, how from the original two ALSA devices, now we got three - where the third one is pulse!

Now, the only thing we want to do, is that at startup (so, via the command line), we set pd to run in ALSA mode, we add the pulse ALSA device, and then we choose the third (3) device (which is to say, pulse) as the audio output device - and the command line argument for that in pd is -audiooutdev:

pd -alsa -alsaadd pulse -audiooutdev 3 ~/Desktop/mypatch.pd

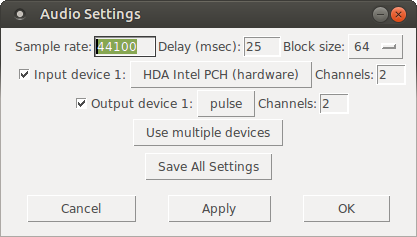

Yup, now when you enable DSP, the patch mypatch.pd should play through Pulseaudio, which means it will play (and mix) with other applications that may be playing sound at the time! You can confirm that the correct output device has been selected from the command line, if you open Media/Audio Settings... once pd starts:

As the screenshot shows, now "Output device 1" is set to "pulse", which is what we needed.

Hope this helps someone!

EDIT: I had also done changes to /etc/pulse/default.pa as per https://wiki.archlinux.org/index.php/PulseAudio#ALSA.2Fdmix_without_grabbing_hardware_device beforehand, not sure whether that makes a difference or not (in any case, trying to add dmix as a PD device and playing through it, doesn't work on my Ubuntu 14.04)

Listing things I plug in... DMX

Hello again,

I have a question. Is there a way to list things I plug into my computer. Like a print command I can run in Pd that will list all the things currently plugged in?

What I want to do is control some DMX lights with Pd. Someone has very kindly lent me an adapter that will go between my laptop and the lights (USB dongle/plug and play) however I can't seem to find it on my computer.

I was thinking that there has to be a way to list them all...

Any help is much appreciated,

Toby

Automatically enabling audio and MIDI devices as they're plugged in

@lzr Yes, I had thought of using those tools to automate the setup, and it would be superb if audio at least could be made reliable. But there are other problems with audio especially. If you automatically select a device you cannot know whether it will run at the samplerate you have set, and especially you cannot know whether it can run with the currently set buffer. I suppose that if you know what device could be plugged (for your own set-up) then you can allow for that, but a universal patch will be impossible without parsing an on-line database. Even then, with different drivers for different os's and numerous possibilities for hard and software buffering.......?

I played around for a long time with the possibility. I also could not find a parameter for setting the block size (it can be set from an audio-dialog message, but not by parameters). Here is what I found and built to test what would work for windows.....

audio.zip

Semi-GOOD news for you (maybe)...... in windows when standard (not asio) drivers are selected the [listdevices( message (and the audiosettings window) does update as audio devices are plugged/un-plugged (see get_audio_parameters.pd in the zip)..

David.

Automatically enabling audio and MIDI devices as they're plugged in

Hi, @whale-av.

I'm not really using aggregate devices at the moment. I'm just plugging and unplugging individual audio and MIDI devices while PD is open: they never appear in PD's dialogs, I always have to restart it. Tried this with a different app: devices appear in a couple of seconds after I plug them in. So this is definitely possible in Mac OS.

As for your problem with the Asio4all drivers, @whale-av, do you always have all of your devices plugged in to USB before starting PD? If that's the case, the patch I'm working on may be of help, since it'll automatically select known devices in PD, by name. I'm not familiar with how Asio4all works, but if your devices have unique distinguishable names, you should be able to automatically select them from a PD patch by using the mediasettings library and sending "midi-dialog" messages to [s pd]

vcf_filter~ clicks

This patch fixes the issue to an extent. There were two different unwanted sounds that I was hearing: 1) a high frequency tambourine-like sound. This went away if I put the filter in a subwindow and upsampled 2x. 2) a pop sound when a large change in filter frequency was made quickly. I created a dual-filter crossfade for this. Each has a switch~ to cut down on CPU, but the vcf_filter~ type which originally used less CPU than the vcf~ now uses more.

Sounds like maybe this isn't an issue for some people, but here it is anyway:

(mp3 with the high frequency sound is included here so it doesn't have to be played in the browser)

LP blending into a BP blending into a HP + filter graph

Hmmmm...I built one like this once...I think.

I don't recall exactly what I did but I think this would work (better):

First things first, decide what controls it. If it's MIDI you're looking at 0-127 control values, better off with Audio control. You can make a 128 point table that uses the curve you like, then read it from [tabread4~] with a MIDI CC or a [line~] for (much) higher resolution.

Anyhoo, it sounds like you'll want to take advantage of [moses] to separate the the control values into different ranges. Here's a thought:

0-24: controls LPF

24-48: controls LPF & BPF & and a crossfade curve between them

48-72: controls BPF

72-96: controls BPF & HPF and a crossfade curve between them

96-127: controls HPF

And now someone will say "but the phase distortion..." Yeah, I'm not phased.

When we say morphing there are much more complex ways of accomplishing this effect than crossfading - but crossfading is simple.

For visualizing it - well, you know what it does so it's an unnecessary burden on your CPU, but if you don't care about latency you can explore data structures - it'd be difficult to construct. Other alternatives are silly, like using noise and an FFT plot - but you'd be able to do that by hacking apart the resynthesis sample I think, again only if you are using it for production.

Please share, I could use a well thought out filter of this type - it's common in the old Traktor, right?

-J.P.

Stereo Crossfader with adjustable curve

I've been working on building a DJ system and I couldn't find a controllable crossfader abstraction. I found a number of ways to fade from one signal to another over time, but none that could be hooked up to a MIDI or OSC control for easy back-and-forth realtime fading. So I built one.

It's a constant-power crossfade available in stereo and mono varieties (although obviously you can hook multiple crossfaders up to the same control if you need more channels than that). The control input is on a 0 to 1 scale, not a 0 to 127 scale, so be sure and adjust accordingly. It takes a "depth" input, which is roughly analogous to the "sharpness" control on adjustable crossfaders. The default depth is 1, which gives a smooth fade suitable for beat-matching and similar applications. People doing scratching may want to use a depth of 2, 3, 4 or maybe even 5 to get a quicker fade in at the edges and a fatter middle area where both tracks can be heard. This is useful for techniques like transform scratching and variations thereon where it's nice to get a large volume response with little movement.

I'll make help patches for them at some point, but if you read the comments, they explain things pretty well.

Stereo Crossfader with adjustable curve

I've been working on building a DJ system and I couldn't find a controllable crossfader abstraction. I found a number of ways to fade from one signal to another over time, but none that could be hooked up to a MIDI or OSC control for easy back-and-forth realtime fading. So I built one.

It's a constant-power crossfade available in stereo and mono varieties (although obviously you can hook multiple crossfaders up to the same control if you need more channels than that). The control input is on a 0 to 1 scale, not a 0 to 127 scale, so be sure and adjust accordingly. It takes a "depth" input, which is roughly analogous to the "sharpness" control on adjustable crossfaders. The default depth is 1, which gives a smooth fade suitable for beat-matching and similar applications. People doing scratching may want to use a depth of 2, 3, 4 or maybe even 5 to get a quicker fade in at the edges and a fatter middle area where both tracks can be heard. This is useful for techniques like transform scratching and variations thereon where it's nice to get a large volume response with little movement.

I'll make help patches for them at some point, but if you read the comments, they explain things pretty well.

BECAUSE you guys are MIDI experts, you could well help on this...

Dear Anyone who understands virtual MIDI circuitry

I'm a disabled wannabe composer who has to use a notation package and mouse, because I can't physically play a keyboard. I use Quick Score Elite Level 2 - it doesn't have its own forum - and I'm having one HUGE problem with it that's stopping me from mixing - literally! I can see it IS possible to do what I want with it, I just can't get my outputs and virtual circuitry right.

I've got 2 main multi-sound plug-ins I use with QSE. Sampletank 2.5 with Miroslav Orchestra and Proteus VX. Now if I choose a bunch of sounds from one of them, each sound comes up on its own little stave and slider, complete with places to insert plug-in effects (like EQ and stuff.) So far, so pretty.

So you've got - say - 5 sounds. Each one is on its own stave, so any notes you put on that stave get played by that sound. The staves have controllers so you can control the individual sound's velocity/volume/pan/aftertouch etc. They all work fine. There are also a bunch of spare controller numbers. The documentation with QSE doesn't really go into how you use those. It's a great program but its customer relations need sorting - no forum, Canadian guys who wrote it very rarely answer E-mails in a meaningful way, hence me having to ask this here.

Except the sliders don't DO anything! The only one that does anything is the one the main synth. is on. That's the only one that takes any notice of the effects you use. Which means you're putting the SAME effect on the WHOLE SYNTH, not just on one instrument sound you've chosen from it. Yet the slider the main synth is on looks exactly the same as all the other sliders. The other sliders just slide up and down without changing the output sounds in any way. Neither do any effects plugins you put on the individual sliders change any of the sounds in any way. The only time they work is if you put them on the main slider that the whole synth. is sitting on - and then, of course, the effect's applied to ALL the sounds coming out of that synth, not just the single sound you want to alter.

I DO understand that MIDI isn't sounds, it's instructions to make sounds, but if the slider the whole synth is on works, how do you route the instructions to the other sliders so they accept them, too?

Anyone got any idea WHY the sounds aren't obeying the sliders they're sitting on? Oddly enough, single-shot plug-ins DO obey the sliders perfectly. It's just the multi-sound VSTs who's sounds don't individually want to play ball.

Now when you select a VSTi, you get 2 choices - assign to a track or use All Channels. If you assign it to a track, of course only instructions routed to that track will be picked up by the VSTi. BUT - they only go to the one instrument on that VST channel. So you can then apply effects happily to the sound on Channel One. I can't work out how to route the effects for the instrument on Channel 2 to Channel 2 in the VSTi, and so on. Someone told me on another forum that because I've got everything on All Channels, the effects signals are cancelling eachother out, I can't find out anything about this at the moment.

I know, theoretically, if I had one instance of the whole synth and just used one instrument from each instance, that would work. It does. Thing is, with Sampletank I got Miroslav Orchestra and you can't load PART of Miroslav. It's all or nothing. So if I wanted 12 instruments that way, I'd have to have 12 copies of Miroslav in memory and you just don't get enough memory in a 32 bit PC for that.

To round up. What I'm trying to do is set things up so I can send separate effects - EQ etc - to separate virtual instruments from ONE instance of a multi-sound sampler (Proteus VX or Sampletank.) I know it must be possible because the main synth takes the effects OK, it's just routing them to the individual sounds that's thrown me. I know you get one-shot sound VSTi's, but - no offence to any creators here - the sounds usually aint that good from them. Besides, all my best sounds are in Miroslav/Proteus VX and I just wanted to be able to create/mix pieces using those.

I'm a REAL NOOOB with all this so if anyone answers - keep it simple. Please! If anyone needs more info to answer this, just ask me what info you need and I'll look it up on the program.

Yours respectfully

ulrichburke

Compiling on osx \#1

hello

i have hard times on osx here...

system: osx (10.6) on a macbook pro

i downloaded pd, checked it out from git and svn, but so far there is no version i compiled which is working

when i compile the checked out version from git-repository, pd compiles, but when i start it i get this error:

2011-02-18 17:21:56.182 pd[72376:903] Error loading /Library/Audio/Plug-Ins/HAL/DVCPROHDAudio.plugin/Contents/MacOS/DVCPROHDAudio: dlopen(/Library/Audio/Plug-Ins/HAL/DVCPROHDAudio.plugin/Contents/MacOS/DVCPROHDAudio, 262): no suitable image found. Did find:

/Library/Audio/Plug-Ins/HAL/DVCPROHDAudio.plugin/Contents/MacOS/DVCPROHDAudio: no matching architecture in universal wrapper

2011-02-18 17:21:56.184 pd[72376:903] Cannot find function pointer NewPlugIn for factory C5A4CE5B-0BB8-11D8-9D75-0003939615B6 in CFBundle/CFPlugIn 0x100600a70 </Library/Audio/Plug-Ins/HAL/DVCPROHDAudio.plugin> (bundle, not loaded)

2011-02-18 17:21:56.189 pd[72376:903] Error loading /Library/Audio/Plug-Ins/HAL/JackRouter.plugin/Contents/MacOS/JackRouter: dlopen(/Library/Audio/Plug-Ins/HAL/JackRouter.plugin/Contents/MacOS/JackRouter, 262): no suitable image found. Did find:

/Library/Audio/Plug-Ins/HAL/JackRouter.plugin/Contents/MacOS/JackRouter: no matching architecture in universal wrapper

2011-02-18 17:21:56.190 pd[72376:903] Cannot find function pointer New_JackRouterPlugIn for factory 7CB18864-927D-48B5-904C-CCFBCFBC7ADD in CFBundle/CFPlugIn 0x100602d20 </Library/Audio/Plug-Ins/HAL/JackRouter.plugin> (bundle, not loaded)

Error in startup script: couldn't read file "5400": no such file or directory

i dont know what to do.

thanks for any help.

ingo