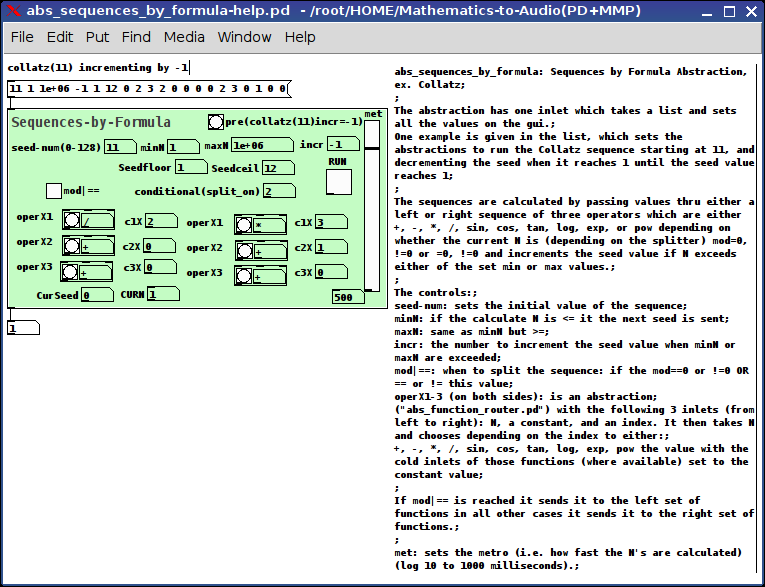

abs_sequences_by_formula: Sequences by Formula Abstraction, ex. Collatz

abs_sequences_by_formula: Sequences by Formula Abstraction, ex. Collatz

abs_sequences_by_formula-help.pd

abs_sequences_by_formula.pd (required)

abs_function_router.pd (required)

Really I was just curious to see if I could do this.

As to practical purpose: unknown (tho I Do think it's cool to stack sequences with a function router and might come in handy for those folks pursuing Sequencers)

The abstraction has one inlet which takes a list and sets all the values on the gui.

One example is given in the list, which sets the abstraction to run the Collatz sequence starting at 11, and decrementing the seed when it reaches 1 until the seed value reaches 1.

The sequences are calculated by passing values thru either a left or right sequence of three operators which are either +,-,*,/,sin,cos,tan,log,exp, or pow depending on whether the current N is (depending on the splitter) mod=0,!=0 or =0,!=0 and increments the seed value if N exceeds either of the set min or max values.

The controls:

seed-num: sets the initial value of the sequence;

minN: if the calculate N is <= it the next seed is sent;

maxN: same as minN but >=;

incr: the number to increment the seed value when minN or maxN are exceeded;

mod|==: when to split the sequence: if the mod==0 or !=0 OR == or != this value;

operX1-3 (on both sides): is an abstraction ("abs_function_router.pd") with the following 3 inlets (from left to right): N, a constant, and an index. It then takes N and chooses depending on the index to either: +,-,*,/,sin,cos,tan,log,exp,pow the value with the cold inlets of those functions (where available) set to the constant value;

If mod|== is reached it sends it to the left set of functions in all other cases it sends it to the right set of functions.

met: sets the metro (i.e. how fast the N's are calculated) (log 10 to 1000 milliseconds).

Footnote:

My intention here was to make a tool available which might allow us as pd users to show non-pd users what we mean when we say "Mathematics is 'Musical'".

Ciao!.

Have fun. Let me know if you need any help.

Peace.

Scott

Context sequencer v3.0.1

Dear PD community

I am happy to announce the beta release of Context, a powerful new sequencer for PD. Context is a modular sequencer that re-imagines musical compositions as a networks. It combines traditional step sequencing and timeline playback with non-linear and algorithmic paradigms, all in a small but advanced GUI.

Unlike most other sequencing software, Context is not an environment. It is a single abstraction which may be replicated and interconnected to create an environment in the form of a network. There are literally endless possibilities in creating Context networks, and the user has a great deal of control over how their composition will function.

From a technical perspective, Context features a lot of things that you don't often see in PD, such as click + drag canvas resizing, dynamic menus, embeddable timelines, and fully automatic state saving. It also boasts its own language, parsed entirely within PD.

Context is work in progress--there are still lots of bugs in the software and lots of holes in the documentation. However, I have gotten it to a place where I feel it is coherent enough for others to use it, and where it would benefit from wider feedback. I am especially looking for people who can help me with proof reading and bug tracking. Please let me know if you want to join the team! Even if you can't commit to much, pointing out typos or bits of the documentation that are confusing will be very helpful to me.

Some notes on the documentation: I have been putting 90% of my efforts recently into writing the manual, and only 9% into writing the .pd help files (the remaining 1% being sleep). The help files are pretty, but the information in them is not very useful. This will be corrected as soon as I have more time and better perspective. In the mean time, please don't be put off by the confusing help files, and treat the manual as the main resource.

Context is available now at https://github.com/LGoodacre/context-sequencer.

A few other links:

-

The debut performance of Context at PDCon16~:

-

An explanation of this performance: http://newblankets.org/liam_context/context-patch.webm

-

A small demo video:

-

My paper from PDCon16~: https://contextsequencer.files.wordpress.com/2016/11/goodacre-context.pdf

Finally, I should say that this project has been my blood sweat and tears for the past 18 months, and it would mean a great deal to me to see other people using it. Please share your patches with me! And also share your questions--I will always be happy to respond.

Context only works on PD Vanilla 0.47, and it needs the following externals:

zexy

cyclone

moocow

flatgui

list-abs

iemguts (v 0.2.1 or later)

Hopefully one day it will work on L2Ork and Purr Data, but not yet.

I would like to thank the PD community for their support and inspiration, in particular Joe Deken and the organizers of PDCon16.

New to PD, need help with notes failing to turn off.

I've been working on my first major patch for the past few months. It's a synthesizer based heavily on the tutorial on flossmanuals.net. After finally creating all of the features I wanted I started to modify the patch to make the synthesizer polyphonic. The catch is that I want to control the patch using the Mad Catz Mustang midi guitar controller. This controller works perfectly fine with the polyphonic synth in the PD help browser as well as with other polyphonic synth patches I've found online, so I do not think the controller has any problem interacting with the [poly] object.. The problem is that when I use it with my patch, some notes fail to turn off. It seems to happen most often when I quickly slur from one note to another. I did not have this issue when using the monophonic version of my patch. The amplifier subpatch is definitely receiving noteoff messages, but for some reason, they do not cause the envelope to close.

I think part of the problem has to do with the way the controller sends messages. I use the controller in 'tap mode,' meaning a noteon is sent whenever a fret button is pressed and noteoffs are sent when fret buttons are released. However noteoffs are also sent whenever any fret button is pressed in order to turn off the "open string" (A note can be played without pressing a fret button by striking the string sensors, and the only way to turn it off is by playing a fretted note). This occurs whether or not the open string is playing and I think this may be messing with [poly]'s voice allocation. I'm tempted to just say that the controller can't be worked around, but since I know it works for other patches I'm going crazy trying to fix my patch.

I understand it would be hard to duplicate this behavior without having the controller, but as I said before it works like a dream with other patches. I have tried to imitate the polyphony of these patches as best as I can. I've tried disconnecting and reconnecting objects in every order imaginable and I've tried delaying on and off messages in case they were somehow arriving to the amplifier envelope out of order.

Attached is a simplified version of my polyph[full poly help.pd] onic patch. I'd really appreciate it if anyone could give it a quick look over just in case there's anything really obvious that I'm just missing.

Thanks so much and have a great day.

EDIT: Please ignore the errors about missing {receive~}s or {catch~}es. The {send~}s and {throw~}s are for some of the features that I removed from this version of the patch for simplicity's sake but I did not remove the {send~}s and {throw~}s.

Yet another Sequencer (Buchla style not including randomizer)

Thanks man, I was just getting ready to edit the thing so I could have multiple instances and just add a route to the first inlet so that I could make some random note patterns or not random note patterns I guess sort of like having a structured set of notes with 2 or more sequencers and then change to another cord or mode with all that nesting ought to be pretty nice to jam along with. also recorded a 3 hour jam with 2 sequencers last night. I accidently closed my fractal sequencer sometime during the first hour, i was just getting ready to mess with the synths and get them dialed in to the sound I wanted after getting all the instruments tuned, the microphone , good and grounded as well as the headphones. The tuning is mostly 22edo or modes od 22edo since my piano is mostly tuned to a 12of22 whitekeys porcupine[7] and black keys superpyth[5] the piano actually needs a good tuniong going over but it was still fun to jam with the synth sequences being perfectly in tune to porcupine15circ which is what I named MEANTONE-KILLER accidently. so the4 synth starts out in 22edo then porcupine15 then porcupine[7]. The piano is somewhat tuned to 12of22, all the instruments are fretless but I have markers on them. It was mostly just having fun recording and learning some modes and seeing how tihs mixed mode tuning works, porcupine is cool because you can play it in 22 or 15 edo and it sounds great. I even playd in 7edo and also some JI overtop of it while I was listening to the new lite version of xensynth. definately the most fun I've had recording in quite some time.

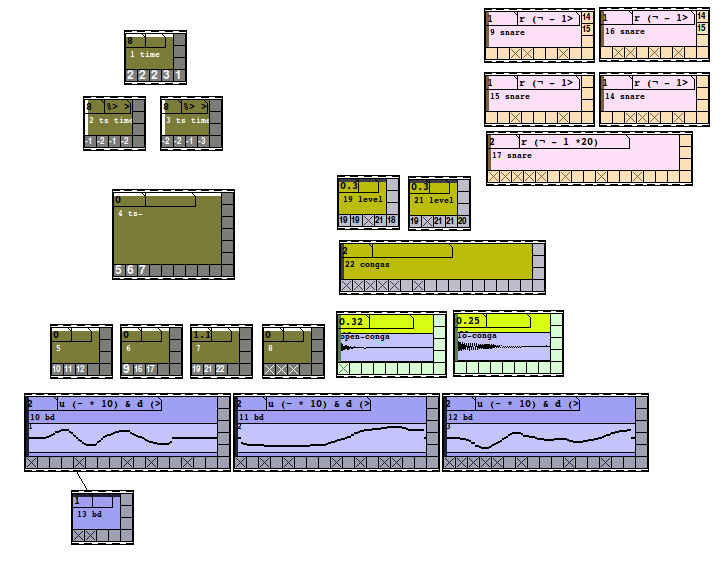

Drum machine sequence editor like micro redrum

Hey

I figured I would share this before it get s anymore complicated. It is not a complete drum machine sequencer yet only the pattern editing is done. I figured it would be good to share it as is so you can add your own drums, triggering, and preset saving.

So far it has a 16 pattern memory with 4 drum channels. The pattern storage goes into 4 arrays - 1 for each drum channel. Each pattern array is 256 bytes? Should be 2 bits but hey.

You should add a loadbang to set the initial values of pattern, BANK, and D_SELECT.

I didn't want to clutter it up for future preset saving.

I ought to be easy to add more pattern memory and more drum channels.

If anyone can figure out a better - less atom way to implement the color changing of the toggles or anything else in the patch I would be glad to know.

I 'm about frizzled over a messagebox phenomenon I ran into.

I tried every way shape and form possible to get this to work.

When reading the memory and repainting the sequence edit display after a pattern or drum select.

[r onoff]

|

| [r nametosendto]

| |

| | [r color]

| | |

[pack f s f]

\

[$2 $3; (

[$2 color $1 $1 $1;(

That is one messagebox.

A loop cycled through the toggles and that message tried to paint them and set their value but it would only do one depending on which order I changed it to.

If the colors worked then when I would switch to a new pattern the toggles would change color but not their state of 0 or 1. Some toggles would have to be clicked 2wice to change state.

I managed to get it working by sending 2 separate messages to a send.

Why doesn't the multi line message work?

Enjoy!

Workshop: Xth Sense - Biophysical generation and control of music

April 6, 7, 8 2011

11:00-19:00

Xth Sense – biophysical generation and control of music

@NK

Elsenstr. 52/

2.Hinterhaus Etage 2

12059 Berlin Neukölln

FULL PROGRAM: http://www.nkprojekt.de/xth-sense-%E2%80%93-biophysical-generation-and-control-of-music/

~ What

The workshop offers an hands-on experience and both theoretical and practical training in gestural control of music and bodily musical performance, deploying the brand-new biosensing technology Xth Sense.

Developed by the workshop teacher Marco Donnarumma within a research project at The University of Edinburgh, Xth Sense is a framework for the application of muscle sounds to the biophysical generation and control of music. It consists of a low cost, DIY biosensing wearable device and an Open Source based software for capture, analysis and audio processing of biological sounds of the body (Pure Data-based).

Muscle sounds are captured in real time and used both as sonic source material and control values for sound effects, enabling the performer to control music simply with his body and kinetic energy. Forget your mice, MIDI controllers, you will not even need to look at your laptop anymore.

The Xth Sense biosensor was designed to be easily implemented by anyone, no previous experience in

electronics is required.

The applications of the Xth Sense technology are manifold: from complex gestural control of samples and audio synthesis, through biophysical generation of music and sounds, to kinetic control of real time digital processing of traditional musical instruments, and more.

~ How

Firstly, participants will be introduced to the Xth Sense Technology by its author and led through the assembling of their own biosensing wearable hardware using the materials provided.

Next, they will become proficient with the Xth Sense software framework: all the features of the framework will be unleashed through practical exercises.

Theoretical background on the state of art of gestural control of music and new musical instruments will be developed by means of an audiovisual review and participatory critical analysis of relevant projects selected by the instructor.

Eventually, participants will combine hardware and software to implement a solo or group performance to be presented during the closing event. At the end of the workshop, participants will be free to keep the Xth Sense biosensors they built and the related software for their own use.

~ Perspective participants

The workshop is open to anyone passionate about sound and music. Musical background and education does not matter as long as you are ready to challenge your usual perspective on musical performance. Composers, producers, sound designers, musicians, field recordists are all welcome to join our team for an innovative and highly creative experience. No previous experience in electronics or programming is required, however participants should be familiar with digital music creation.

Participation is limited to 10 candidates.

Preregistration is required and can be done by sending an email to info@nkprojekt.de

Requirements and further info

Participants need to provide their own headphones, soundcards and laptops with Pd-extended already installed.

Musicians interested in augmenting their favourite musical instrument by means of body gestures are encouraged to bring their instrument along. More information about the Xth Sense and a video of a live performance can be viewed on-line at

http://res.marcodonnarumma.com/projects/xth-sense/

http://marcodonnarumma.com/works/music-for-flesh-ii/

http://marcodonnarumma.com/teaching/

Dates

6-7-8 April, 11.00-19.00 daily (6 hours sessions + 1 hour break)

Fee

EUR 90 including materials (EUR 15).

Contact

Marco Donnarumma

m[at]marcodonnarumma.com

http://marcodonnarumma.com

DJ/VJ scratching system

First my story: (you can skip down to END OF STORY if you want)

Ever since I saw Mike Relm go to town with a DVDJ, I've wanted a system where I could scratch and cue video. However, I haven't wanted to spend the $2500 for a DVDJ. As I was researching, I found a number of different systems. I am not a DJ by trade, so to get a system like Traktor or Serrato with their video modules plus turntables plus hardware plus a DJ mixer, soon everything gets really expensive. But in looking around, I found the Ms.Pinky system and after a little bit, I found a USB turntable on Woot for $60. So I bought it. It was marketed as a DJ turntable, but I knew that it wasn't really serious since it had a belt drive, but it came with a slip-pad and the USB connection meant that I wouldn't need a preamp. And so I spend the $100 on the Ms.Pinky vinyl plus software license (now only $80). This worked decently, but I had a lot of trouble really getting it totally on point. The relative mode worked well, but sometimes would skip if I scratched too vigorously. The absolute mode I couldn't get to work at all. After reading a little more, I came to the conclusion that my signal from vinyl to computer just wasn't strong enough, so I would need maybe a new needle or maybe a different turntable and I didn't really want to spend the money experimenting. I think that the Ms. Pinky system is probably a very good system with the right equipment, but I don't do this professionally, so I don't want to spend the loot on a system.

Earlier, before I bought Ms.Pinky (about two years ago), I had also looked around for a cheap MIDI USB DJ controller and not found one. Well, about a month ago, I saw the ION Discover DJ controller was on sale at Bed, Bath & Beyond for $50. They sold out before I could get one, but Vann's was selling it for $70, so I decided that that was good enough and bought one. I had planned to try to use it with Ms. Pinky since you can hook up MIDI controllers to it. But it turns out that you can hook up MIDI controllers to every control except the turntable, so that was a no go. If I had Max/MSP/Jitter, I could have changed that, but that's also way expensive. So, how should I scratch? My controller came with DJ'ing software and there's also some freeware, like Mixxx, but none of this has video support. So I look around and find Pure Data and GEM.

And I see lots of questions about scratching, how to do it. And there are even some tutorials and small patches out there, but as I look at them, none of them are quite what I'm looking for. The YouTube tutorial is really problematic because it's no good at all for scratching a song. It can create a scratching sound for a small sample, but it's taking the turntable's speed and using that as the position in the sample. If you did that with a longer song, it wouldn't even sound like a scratch. And then there are some which do work right, but none of them keep track of where you are in the playback. So, whenever you start scratching, you're starting from the beginning of the song or the middle.

So, I looked at all this and I said, "Hey, I can do this. I've got my spring break coming up. Looking at how easy PD looks and how much other good (if imperfect) work other people have done, I bet that I could build a good system for audio and video scratching within a week." And, I have.

END OF STORY

So that's what I'm presenting to you, my free audio and video scratching system in Pure Data (Pd-extended, really). I use the name DJ Lease Def, so it's the Lease Def DJ system. It's not quite perfect because it loads its samples into tables using soundfiler which means that it has a huge delay when you load a new file during which the whole thing goes silent. I am unhappy about this, but unsure how to fix it. Otherwise, it's pretty nifty. Anyway, rather than be one big patch, it relies on a system of patches which work with each other. Each of the different parts will come in several versions and you can choose which one you want to use and load up the different parts and they should work together correctly. Right now, for most of the parts there's only one version, but I'll be adding others later.

There's a more detailed instruction manual in the .zip file, but the summary is that you load:

the engine (only one version right now): loads the files, does the actual signal processing and playback

one control patch (three versions to choose from currently, two GUI versions and a MIDI version specific to the Ion Discover DJ): is used to do most of the controlling of the engine other than loading files such as scratching, fading, adjusting volume, etc.

zero or one cueing patch (one version, optional): manages the controls for jumping around to different points in songs

zero or one net patch (one version: video playback): does some sort of add-on. Will probably most commonly be used for video. The net patches have to run in a separate instance of Pd-extended and they listen for signals from the engine via local UDP packets. This is set-up this way because when the audio and video tried to run in the same instance, I would get periodic little pops, clicks, and other unsmoothnesses. The audio part renders 1000 times per second for maximum fidelity, but the video part only renders like 30 or 60 times per second. Pure Data is not quite smooth enough to handle this in a clever real-time multithreading manner to ensure that they both always get their time slices. But you put them in separate processes, it all works fine.

So, anyway, it's real scratching beginning exactly where you were in playing the song and when you stop scratching it picks up just where you left off, you can set and jump to cue points, and it does video which will follow right along with both the scratching and cuing. So I'm pretty proud of it. The downsides are that you have to separate the audio and video files, that the audio has to be uncompressed aiff or wav (and that loading a new file pauses everything for like 10 seconds), that for really smooth video when you're scratching or playing backwards you have to encode it with a codec with no inter-frame encoding such as MJPEG, which results in bigger video files (but the playback scratches perfectly as a result).

So anyway, check it out, let me know what you think. If you have any questions or feedback please share. If anyone wants to build control patches for other MIDI hardware, please do and share them with me. I'd be glad to include them in the download. The different patches communicate using send and receive with a standard set of symbols. I've included documentation about what the expected symbols and values are. Also, if anyone wants me to write patches for some piece of hardware that you have, if you can give me one, I'll be glad to do it.

Keith Irwin (DJ Lease Def)

BECAUSE you guys are MIDI experts, you could well help on this...

Dear Anyone who understands virtual MIDI circuitry

I'm a disabled wannabe composer who has to use a notation package and mouse, because I can't physically play a keyboard. I use Quick Score Elite Level 2 - it doesn't have its own forum - and I'm having one HUGE problem with it that's stopping me from mixing - literally! I can see it IS possible to do what I want with it, I just can't get my outputs and virtual circuitry right.

I've got 2 main multi-sound plug-ins I use with QSE. Sampletank 2.5 with Miroslav Orchestra and Proteus VX. Now if I choose a bunch of sounds from one of them, each sound comes up on its own little stave and slider, complete with places to insert plug-in effects (like EQ and stuff.) So far, so pretty.

So you've got - say - 5 sounds. Each one is on its own stave, so any notes you put on that stave get played by that sound. The staves have controllers so you can control the individual sound's velocity/volume/pan/aftertouch etc. They all work fine. There are also a bunch of spare controller numbers. The documentation with QSE doesn't really go into how you use those. It's a great program but its customer relations need sorting - no forum, Canadian guys who wrote it very rarely answer E-mails in a meaningful way, hence me having to ask this here.

Except the sliders don't DO anything! The only one that does anything is the one the main synth. is on. That's the only one that takes any notice of the effects you use. Which means you're putting the SAME effect on the WHOLE SYNTH, not just on one instrument sound you've chosen from it. Yet the slider the main synth is on looks exactly the same as all the other sliders. The other sliders just slide up and down without changing the output sounds in any way. Neither do any effects plugins you put on the individual sliders change any of the sounds in any way. The only time they work is if you put them on the main slider that the whole synth. is sitting on - and then, of course, the effect's applied to ALL the sounds coming out of that synth, not just the single sound you want to alter.

I DO understand that MIDI isn't sounds, it's instructions to make sounds, but if the slider the whole synth is on works, how do you route the instructions to the other sliders so they accept them, too?

Anyone got any idea WHY the sounds aren't obeying the sliders they're sitting on? Oddly enough, single-shot plug-ins DO obey the sliders perfectly. It's just the multi-sound VSTs who's sounds don't individually want to play ball.

Now when you select a VSTi, you get 2 choices - assign to a track or use All Channels. If you assign it to a track, of course only instructions routed to that track will be picked up by the VSTi. BUT - they only go to the one instrument on that VST channel. So you can then apply effects happily to the sound on Channel One. I can't work out how to route the effects for the instrument on Channel 2 to Channel 2 in the VSTi, and so on. Someone told me on another forum that because I've got everything on All Channels, the effects signals are cancelling eachother out, I can't find out anything about this at the moment.

I know, theoretically, if I had one instance of the whole synth and just used one instrument from each instance, that would work. It does. Thing is, with Sampletank I got Miroslav Orchestra and you can't load PART of Miroslav. It's all or nothing. So if I wanted 12 instruments that way, I'd have to have 12 copies of Miroslav in memory and you just don't get enough memory in a 32 bit PC for that.

To round up. What I'm trying to do is set things up so I can send separate effects - EQ etc - to separate virtual instruments from ONE instance of a multi-sound sampler (Proteus VX or Sampletank.) I know it must be possible because the main synth takes the effects OK, it's just routing them to the individual sounds that's thrown me. I know you get one-shot sound VSTi's, but - no offence to any creators here - the sounds usually aint that good from them. Besides, all my best sounds are in Miroslav/Proteus VX and I just wanted to be able to create/mix pieces using those.

I'm a REAL NOOOB with all this so if anyone answers - keep it simple. Please! If anyone needs more info to answer this, just ask me what info you need and I'll look it up on the program.

Yours respectfully

ulrichburke

What gives a sequencer its flavor?

A fantastically helpful reply. I had been making a patch that manufactures sequencer grids, which I might finish, though it's more of a cool idea than a practical necessity. While I was making it I did have many many thoughts about what would be cool, and I came up with a really big one, which I will share when I've finished a version of it. It presents one solution to the randomness/inhumanity problem.

Yeah I was considering using color changes with the toggles. I am pondering allowing for color-based velocity for each note, or maybe using an "accent" system that loudens accented notes by a certain modifiable percentage. I was also considering using structures to make a sequencer, but I don't want it to be really processor heavy. I might also purchase Max at some point to get another perspective on this live machine I'm building.

I do like the idea of weighting different sounds, timewise, maybe having some sort of changeable lead/delay param for each sequencer lane. Of course I am making pretty straightforward beat music, so anything weighted too heavily will have to be for texture and color rather than the core of the beat itself. I am also curious about the different ways to shuffle sequences, as with the MPC having a particularly pleasing shuffle algorithm. I guess I'm interested in rhythmic algorithms.

Anyway, I'm gonna just build as many different kinds of sequencers as I can think of, put them in a big patch, then have a MIDI router that allows me to output the sequences as note, CC, or other types of messages, then control various Pd and Logic synths from there. My true mission is to design a performance instrument that allows for tons of flexibility (independent sequence lengths, or being able to switch the output of a given sequence element), and with a very special twist. I can barely contain myself.

I think the ability to output any kind of message is one of the true strengths of Pd when compared with Logic. If I want to generate movement with a synth's params, I have to use that synth's internal mod matrix (if it has one), or I have to use automation which exists in a GUI element separated from any note information. I don't want to go digging into the nested windows of my sequencer program to be able to quickly program automation.

This conversation has me really excited. Thanks for the valuable input. And who are you, by the way?

D

What gives a sequencer its flavor?

i find, one of the good things to do, is to play round with a normal sequencer, and when you get a thought like "oh man, i wish i could just turn off the second half of the sequence every now and then", write that down, and then include that function in your own sequencer.

also, if you're designing a sequencer using pd's gui, those toggle buttons are absolute crap. but you can make them better with a bit of tweaking. instead of having just the little X show up in the middle of the toggle box, send a message like this:

[r $0-toggle-1(

|

- <- whatever number you put in here, is the colour

|

[color $1 $1 $1(

|

that way, the whole box gets turned on and off with a click. makes it much easier to see what's happening.

also, one of the biggest strengths with sequencing in pd is the ability to randomise, either randomly or intelligently. for example, if you are making a random drum pattern, then you probably want more notes to fall on 0,4,8, 12 than on 5, 7, 11....etc , so you build a patch that does that with weighted randoms and then you get reasonably cool sequences right off the bat that can be tweaked manually to work well.

just thinking of your idea about 'colouring' sequencers so that they lead or delay notes, and i think a cool way to do that would be to actually colour the individual sounds. so, for example, with a typical bass drum and hihat thing, you probably want the bass drum to be pretty steady, so you could assign it a colouration factor of 0.2 or something. but the hihat should be allowed to roam a bit, so maybe give it a 0.4.

but yeah, i think one of the best things about pd, is that you can program in all sorts of bends and tweaks that regular sequencers don't have. especially randomising stuff, makes it easy to try a heap of sequences very quickly. only problem i find with this type of method, is that it does tend to lead to the sounds becomign very erratic very quickly. with traditional sequencers, it takes a while to get all the notes in there, and you're more likely to just let it loop for a while and keep the same groove. but once you start getting into highspeed loop prototypes with randomization, you can switch between heaps of different sounds really quickly. this is really cool and definitely has advantages, but on the other hand, music is also something which has to come from the soul, so the jitterbug randomness does often mess things up too much. it's a bit of a balancing act, maybe. but if you can program the randomising stuff to work well and generally hit on good results, then that is better.

not sure if i answered much of the original question, but good to have a think about these things. cheers