-

jameslo

posted in technical issues • read more@rewindForward In addition to sharing your patch (it could even be one using [expr~]), please let us know what your trigger signal is (i.e. I'm unclear why the signal is ~1 ms long)

-

jameslo

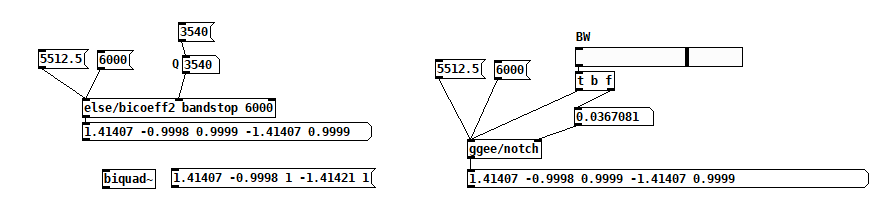

posted in technical issues • read more@whale-av I thought I had figured out the relationship between Q and BW but my patch failed miserably. So I just went with BW but got the same "close but no cigar" results:

Edit: looking back at that cookbook formula for notch coefficients (https://webaudio.github.io/Audio-EQ-Cookbook/audio-eq-cookbook.html), ff1 and ff3 are defined as 1/1+alpha, so the only way that could equal 1 is if alpha equals 0. But alpha is defined as sin(2 * pi * f / sr) / 2 * Q, so f would have to be 0 or Nyquist, or else Q is so large that it underflows the precision of the floats used to calculate the coefficients? And if it's the latter, then that seems like a weird thing to present as an illustrative example. (Note that in the example, f = 5512.5 = Nyquist/4--prob not a coincidence)I'm still mystified.

-

jameslo

posted in technical issues • read moreIn the help patch for [biquad~] there are coefficients for a very narrow bandstop filter centered at 5512.5 hz @ 44.1k or 6000 hz @ 48k. The filter's Q isn't stated so I thought I could reverse engineer it using those coefficients as my key but I can't seem to generate them exactly by using either my own coefficient calculator or the one from ELSE (bicoef2). I get close with a Q of about 3500, but I can't match all coefficients with a single Q value (I can't remember if the 1s are even posssible). Why would that be?

help coefficients search.pd -

jameslo

posted in technical issues • read more@april Check the order of coefficients to [biquad~]. I think the feedback coefficients come before the feedforward coefficients. Also check the sign of the feedback coefficients. According to this reference https://webaudio.github.io/Audio-EQ-Cookbook/audio-eq-cookbook.html (formula 4) they should be negative.

Edit: additionally, try adding triggers to the outputs of the [expr] objects to ensure each of the sub branches are being evaluated in the order you think they should be. Here's my version based on that reference: biquad notch coefficients.pd

-

jameslo

posted in technical issues • read more@solipp said:

use [set 64 1 $1(

no need to switch dsp offOMG, I was staring at my own code and didn't see that!

-

jameslo

posted in technical issues • read more@hansr If you can tolerate the latency and inflexible cutoff frequencies, I've used FFT resynthesis for this kind of thing. At 44.1 and a window size of 2048, zeroing out the first two bins would get you very close I think. And if I'm not mistaken, doing it this way introduces less phase distortion.

-

jameslo

posted in technical issues • read more@ddw_music I tested a few of my FFT patches that have adjustable window sizes and they all seem to still work on 0.56.2 without having to cycle dsp off and on. I can confirm that your patch isn't responding though, so maybe there's an issue with the oversampling portion of the message? If I enter your params as arguments in the block~ object, it seems to work fine. Maybe use dynamic patching as a temporary workaround?

-

jameslo

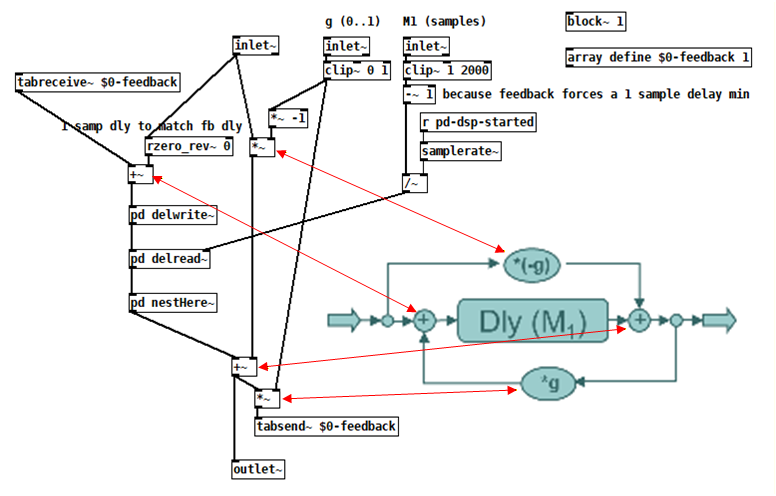

posted in technical issues • read more@Ice-Ice Well, I was hoping that if I followed that same tutorial I might be able to remedy some of my ignorance of low-level filter construction.

Nope!

But maybe what I made is still useful to you. This is untested because I honestly don't understand what I should be testing for. The examples on that tutorial sound more like feedback delays than all pass filters to me, but that just may be more of my ignorance. That said, my gut tells me that you can't just mashup H14 and this filter diagram--they're slightly different instances of the same thing.

allpass~.pd

Edit: Oooh, I think I see what's going on!

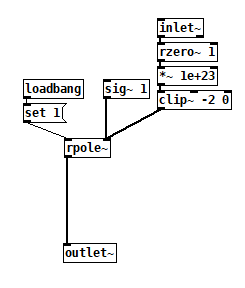

H14 implements the APF using [rzero-rev~] fed into [rpole~]. From help:[rzero-rev~] implements y[n] = -a * x[n] + x[n-1] [rpole~] implements y[n] = x[n] + a * y[n-1]Since the first equation provides input to the second, substitute x[n] in the second with the output equation of the first:

y[n] = -a * x[n] + x[n-1] + a * y[n-1]Now make an equation for that tutorial's diagram:

y[n] = -g * x[n] + x[n-M1] + g * y[n-M1]The first term represents the upper path, the second term is the middle path through the delay, and the last term is the feedback going through the same delay!

So H14 is the same only if a = g and M1 = 1.

-

jameslo

posted in technical issues • read more@dreamer Oh! My bad. My post was probably made irrelevant by your previous post anyway.

-

jameslo

posted in technical issues • read more@ytt +1 to @Balwyn's idea, but I thought you were asking about how to eliminate the race condition whenever 2 or more valves are pressed at the same time.

trumpet valve control.pd

Edit: hmm, I probably overcomplicated things with the list tosymbol test. Maybe I should have converted the valve triplet to a number right away and then tested that instead. -

jameslo

posted in technical issues • read more@whale-av Hey! Don't you need to add the triggers on some of those outputs that have two connections? I'm sure your patch works because your instincts caused you to create those connections in the right order, but still!

-

jameslo

posted in technical issues • read more@ArduinoPrints3D Yes, I think you put the clone start flag in the wrong place so that the clone numbering is really going from 0 to 15. Try [clone -s 1 voicecloner 16]

-

jameslo

posted in technical issues • read more@c_c +1 to @whale-av, and you could also just fade out before changing the delay and then fade back in, e.g.

changing delay.pd

To demonstrate that it's actually doing something, delete the highlighted messages and choose a few more random delay times. Click click pop! -

jameslo

posted in patch~ • read moreA long time ago I needed something that would toggle between 1 and -1 at audio rate whenever its input decreases in value (as would happen when driven by a [phasor~]). This is what I came up with:

The blocksize has to be small enough to accomodate the highest frequency toggling that you need to support.I was convinced that this was the only way possible, and so was completely surprised when I stumbled across this:

flipflop2~.pd

flipflop2~.pd

Seems so obvious in retrospect!

Edit: OMG, I posted a similar solution 3 years ago and forgot about it. I'm now accepting nursing home recommendations.

-

jameslo

posted in technical issues • read more@whale-av Thanks! While searching for [coords( documentation I stumbled across [goprect( buried in the dynamic patching help. It looks like it's relatively new and doesn't set the dirty flag.

And unless there's a difference in how canvases are messaged, then it seems like the answer to my original question is that the [namecanvas] way let's you send to any accessible named canvas in the patch, whereas pdcontrol is just for the containing canvas and saves you the trouble of having to name it. Other than that they seem equivalent.

-

jameslo

posted in technical issues • read moreI'm sending a [donecanvasdialog....( message to a canvas to resize its GOP. It appears that I can either use [namecanvas] to create a receive on a particular canvas, or I can use the [sendcanvas...( message with [pdcontrol]. Which one should I use?

-

jameslo

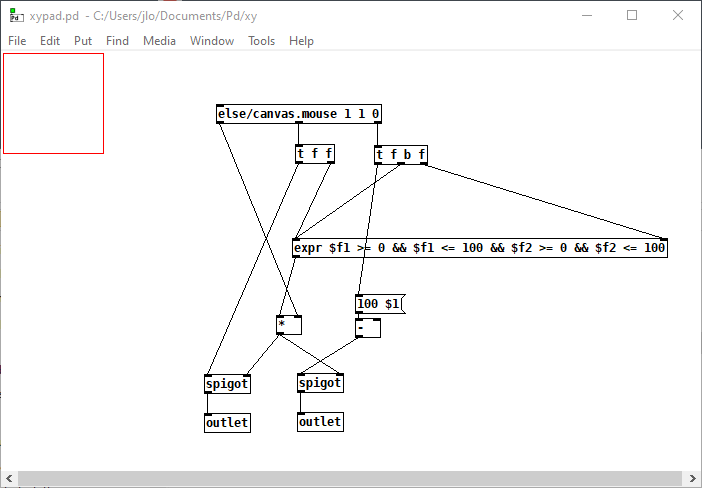

posted in technical issues • read more@ben.wes I like your mouse click logic + clipping better because you can drag outside the control to make things maximum or minimum.

For anyone else is trying to understand your patch, I want to correct my faulty explanation. Firstly, there are 2 canvases in the GOP area, one is the grey background that fills it, and one is a smaller white foreground. This latter one receives messages sent to $0-cnv, not the silly thing I wrote before, and its configured size has to be 1 so that vis_size can go to 1.

-

jameslo

posted in technical issues • read more@ben.wes Woah! Look how similar my patch is and how little is missing:

I'm really more amazed by the missing part because it really drives home what I don't know! Wait, that didn't come out right. I mean it's like I'm standing next to the Empire State Building asking people "hey, where's is the Empire State Building?"

I can't find (in 10 minutes of happy hour searching) where either the pos or vis_size messages are documented, so here are my guesses from looking at your code:

- sending to $0-cnv sends messages to the containing canvas

- the visibility window just happens to have the exact same color scheme as Reaktor?!!! Unbelievable coincidence.

- the visibility window is anchored relative to its upper left corner, so that's why you did the pos message that way

- and the size of the window extends down and to the right of the visibility window anchor

How did I do, professor? All kidding aside, thanks so much.

-

jameslo

posted in technical issues • read more

This array of squares is allegedly from Reaktor 6, and they're xy controls. The lower left-justified white rectangle denotes where in the grey square the control point is.How would you recommend I approach making something like this? I'd like the enclosing square to be GOP from an abstraction. I made something that gets the xy coordinates using else/canvas.mouse, but it doesn't display what the current xy value is. Do I have to try to learn data structures (again)?

PS: the actual sq/rectangle colors don't matter to me. I don't see an easy way to paint the GOP window some other color than white.