i/o-errors in pd

@whale-av: thats interesting.

Details about my system and configuration:

OS.: arch

pd-version: Pd-0.49.0 ("") compiled 17:22:56 Sep 26 2018

pd config:

audioapi: 5

noaudioin: False

audioindev1: 0 2

audioindevname1: JACK

noaudioout: False

audiooutdev1: 0 2

audiooutdevname1: JACK

audiobuf: 11

rate: 41000

callback: 0

blocksize: 256

midiapi: 0

nomidiin: True

nomidiout: True

path1: /home/samuel/.sgPD

npath: 1

standardpath: 1

verbose: 0

loadlib1: sgCLib/sgScriptLib

loadlib2: sgCLib/sgInputC

loadlib3: zexy

nloadlib: 3

defeatrt: 0

flags:

zoom: 1

loading: no

sound-server: jackd

jack config:

> jack_control dp

--- get driver parameters (type:isset:default:value)

device: ALSA device name (str:set:hw:0:hw:0)

capture: Provide capture ports. Optionally set device (str:set:none:hw:0)

playback: Provide playback ports. Optionally set device (str:set:none:hw:0)

rate: Sample rate (uint:set:48000:44100)

period: Frames per period (uint:set:1024:256)

nperiods: Number of periods of playback latency (uint:set:2:2)

hwmon: Hardware monitoring, if available (bool:notset:False:False)

hwmeter: Hardware metering, if available (bool:set:False:False)

duplex: Provide both capture and playback ports (bool:set:True:True)

softmode: Soft-mode, no xrun handling (bool:set:False:False)

monitor: Provide monitor ports for the output (bool:set:False:False)

dither: Dithering mode (char:set:n:n)

inchannels: Number of capture channels (defaults to hardware max) (uint:notset:0:0)

outchannels: Number of playback channels (defaults to hardware max) (uint:notset:0:0)

shorts: Try 16-bit samples before 32-bit (bool:set:False:False)

input-latency: Extra input latency (frames) (uint:notset:0:0)

output-latency: Extra output latency (frames) (uint:notset:0:0)

midi-driver: ALSA MIDI driver (str:notset:none:none)

root-priority: Hmm. I guess no. I'll try your suggestion, and see if it helps!

Configuration is pretty standard, I guess.

My impression is that certain events in my patch cause some messages being sent to multiple destinations, which causes pd to get out of sync.

It's slightly difficult to isolate the problem(s) into a small example, since I am using my own library of abstractions for parsing/generating recursively structured messages and automatically/dynamically connecting objects. I use this e.g. to let one object track properties of another one. It's nothing too fancy, all just native pd.

The amount and length of messages is still very moderate. It's disappointing to see pd struggle already.

(e.g. a "sample recorder" would record audio and update the "sample" object, by sending a "length" of the recording in ms, which in turn also distributes this message to ~3 "sample player" objects).

I will try to make a small example, that shows the issues I am facing.

Thank you for your help so far!

Any suggestions are welcome

Some questions regarding a loop station

@whale-av

I'm using a RME Fireface uc with it's own Asio driver on Win7/10. On Purr Data or vanilla I can set latency to 2 ms as well, but the roundtrip latency tester gives me the same results for 2-5 ms (around 440 samples measured with cable). With a 48 sample buffer instead of 64 on my soundcard I receive even worse values.

But on pd-extended it just doesn't work. Don't know why, but as it's not updated anymore, I prefer working with vanilla and Purr Data.

Anyway - in the manner I'm trying to loop, latency doesn't really matter, but you have to know it's length. Even with a latency of 50 ms or more you won't notice any offset in the recorded loops as long as you know the exact length of the latency.

Tracks get recorded and shifted by the latency afterwards, so latency should be compensated.

You're right, latency won't add up in Pd. But I don't use an in ear system. So if you change your reference from a metronom to a recorded track and from that track to the next recorded one, you will add up latency when the latency isn't compensated.

Xaver

Some questions regarding a loop station

I changed my first post and uploaded a zip at the end.

Works with Purr Data or pd (plus zexy)

@whale-av

Thanks for your patch. I tested it with extended, but I get latency issues. When I record saying something like "tik" on the beat, the "tik" is heavily delayed to the metronom on playback. But on pd extended I can't set the latency lower than 24 msecs. So there might be some issues with extendend on my machine. (pd or Purr Data let me set the latency to 5 msecs without problems.)

You wrote: The biggest problem with (not noticing) an edit will be a difference of volume (power). Our ears are very sensitive to that.

The second biggest problem will be the quality.... timbre..... but as you say a crossfade could help. But the bigger the difference the longer the crossfade needs to be.

I thought about starting recording a little bit earlier and stop somewhat later, so I can crossfade between these extra samples. And maybe it's easier than I thought.

@LiamG

Thanks. How can I trigger between blocks? I thought messages are only send once during a block, aren't they?

About 3): I was wondering, if it is a problem, not to be able to set the exact metronom tempo

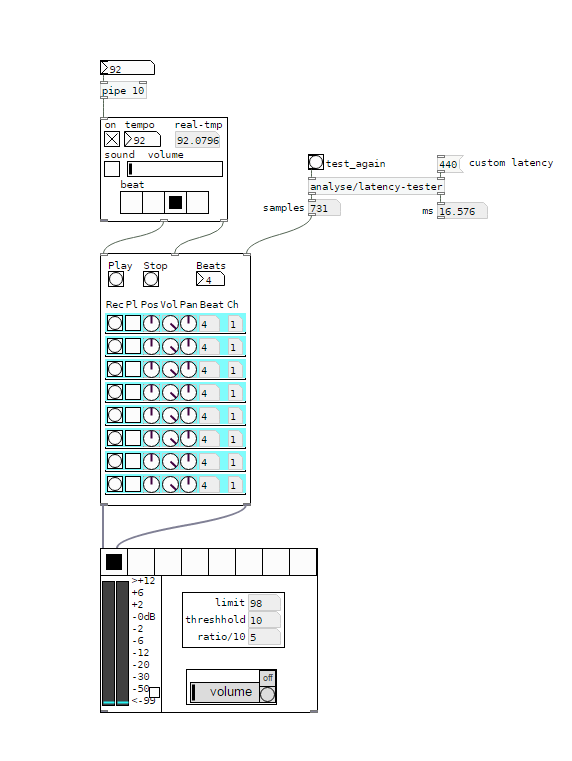

And here's a little screenshot to see what it looks like:

I would say, anything above 5 ms latency between the metronom and some voices starts to feel strange. And when you start to change your reference - say you start recording with the metronom, change to first recorded line as a reference, than to the second etc, - the latency will sum up and increase.

I wonder how latency is treated on hardware loop stations.

And I'm still asking myself how latency should be treated to get it right.

Regards,

Xaver

Some questions regarding a loop station

Hi,

I'm creating a loop station and I've got several questions about different issues.

- Latency

- Fading/Cutting recorded samples at start and end

- Metronom settings

1) Latency

I'm using the latency tester from the repo.

http://www.pdpatchrepo.info/patches/patch/92

Latency might be calculated with different methods:

a ) Connecting the output of the soundcard directly to the input via cable. This way the lowest possible latency is received. (Around 440 samples in my case)

b ) Calculating via microphone and speakers. Latency will be higher compared to a ). Also the bigger the distance between mic and speakers, the higher the latency will be.

Which calculation should be used?

(To me it seemes to be logical, to use the latency calculated by b ), as that is the method which reflects the real circumstances. But I'm not shure and it's very hard to tell by what I hear.)

2) Fading/Cutting recorded samples at start and end

When the recorded sample contains a phrase which is completly finished before the next 1, doing a fade with [vline~] sounds OK. But when you record a continuous sound (like a string pad) you will always hear that fade of [vline~].

Compared to Reaper: Cutting a sound and looping it, will result in a continuous sound. To me it seemes like some kind of crossfade between the beginning and the end of the sample is done.

Does someone know more about this? How should it be done correctly?

Crossfading seemes to be a quite difficult task in my eyes, as a lot of calculation has to be done. (Recording has to start a bit earlier and to stop later, sync has to start earlier, and so on)

3) Metronom settings

This loop station depends on a metronom and works similar to Ableton Live. As pd uses blocks of 64 samples, the tempo is corrected to a tempo which corresponds to an array with a size multiple of 64. So playing can easily be kept in sync.

I'm not shure about this. Is it a good idea to do it like this?

But changing this to a manner, where the tempo might be chosen freely, will result in much more complicated calculations. Is it worth it?

Thanks for any comments in advance,

Xaver

My patch is still in development and can be found here:

https://github.com/XRoemer/puredata/tree/master/loop-machine

(downloading from github: go up one folder and use the download/clone button

https://github.com/XRoemer/puredata/tree/master)

TouchOSC direct USB connection finally possible with Midimux?

Hi folks,

I'm posting here on the theory (and also based on various threads I've seen here & around the internet) that I'm not the only PD user who is interested in using TouchOSC on an iPad to control PD on a Mac over a stable, wired USB connection rather than a sketchy ad-hoc network (which can suffer latency & dropouts when used in venues/areas with wifi interference, and prevents TouchOSC from being a reliable live performance interface).

Most of my searching turned up the same answer: networking over USB is not possible without jailbreaking the iOS device & installing a tethering app (I don't have the option of jailbreaking due to compatibility concerns with other apps I use professionally). I also discovered a free app called midimittr which creates a MIDI bridge with a server application on the OS X side via USB, which is great, but alas my control setup is dependent on OSC messages rather than MIDI.

Now for the (almost) exciting development: far down in search results I learned of the $7 Midimux app/server, which promises both MIDI & OSC connectivity directly via the standard Lightning=USB cable, no jailbreaking required! After buying the app, installing the server, configuring my firewall, checking port settings on all sides—I was thrilled when I was able to send OSC messages from PD to TouchOSC on the iPad! But...... no such luck in the other direction, TouchOSC cannot send to PD. I've tried a variety of port/hostname/IP settings, and fiddled with all of the OSC/UDP receive objects in PD to see if any connection is being made in the iPad->Mac direction, with no success. The MIDI part of the app seems to work just fine both ways.

I am not proficient in advanced networking, so I don't have any other methods for testing to see what the issue may be. I also realize that the problem almost certainly lies on the Midimux/TouchOSC side of things rather than with PD, but as mentioned before, I figure there must be other PD users who would be happy to learn of the potential for a wired TouchOSC interface, and it seems the possibility may be tantalizingly close. If anyone has any thoughts/experiences/suggestions on the subject, I'd be delighted to hear them.

Thanks!

MIDI Controller experiences/suggestions

@whale-av any experience using touchOSC on a hosted/ad-hoc network?

I can get touchOSC and my laptop to communicate whilst they are both on the same wi-fi(internet) network..

The problem is want to use a hosted network(wlan) as for live performance you can't

depend on the internet.

So I have manually configured a hosted network on my laptop and my tablet can connect to it with no problem. TouchOSC can find TouchOSC Bridge etc.. The issue is my laptop is not receiving packets from my tablet.... I have tried with both Pure Data and Renoise..

The issue is not with touchOSC because when I try sending packets when my tablet and laptop are on the same wi-fi network it works fine..

So the problem is with the hosted network....

I have tried automatically configuring an ip, manually configuring one...to no avail

I am not sure if this matters, however, when use a normal internet connection, a default gateway is configured...with the wlan hosted network, none...I have also tried to manually configure that as well, I had no success with the one attempt....

I am sure there is some setting that I am unaware of that needs fixing..

Edit : Typing specs as we speak

Asus F550l

Windows 8 64 bit

Intel(R) Core(TM) i7-4500 CPU @ 1.80 GHZ 2.40GHZ

3.89 usable RAM out of 4.0

The tablet is an ASUS ZENPAD(Android) ...ill write the specs later

If anyone else has any ideas/suggestions/experience I am all ears...thxx

Low audio latency in windows 7

Hi all, I'm struggling a bit on audio latency in pd (windows), so I wanted to have a clearer picture.

Apparently other applications seems to perform quite well (Reaktor), but in pd I'm experiencing serious problems.

I did a simple test, the result is here:

This is the test setup:

- pd .042.5 extended

- pd patch: 7.stuff/tools/latency.pd, measurement quite precise (Miller says ~1.5 ms)

- audio board: Cakewalk Sonar VS-100 (roland), USB 2.

- ASIO4ALL driver (portaudio)

- line out (1) cable jack connected to line in 1 (mic)

- windows 7 (64 bits)

Pretty instructive, at least for myself. I put a Scope~ object to visually see the output pulse fed back into input of the audio board:

the "sharp" pulse is the one generated by the patch, the "blurred" one that follows is the one fed back into the audio board.

The distance between the two is the "latency" of the system.

In summary, these are the major results I found:

- lowest true (measured) usable latency ~ 30 ms, corresponding to "delay (msec)" set to ~ 8 ms

- lowest true (unusable) latency ~ 20 ms, audio "destructed"

"delay (msec)" set to ~ 3 ms

"delay (msec)" set to ~ 3 ms - Original HW driver is totally unuseful, only ASIO4ALL seems working ok (why? anyone has an idea?)

- I probably used very bad cables (see the bad pulse shape following the one created by the latency patch)

- changing the ASIO4ALL buffer size didn't change anything in terms of latency (why?)

Note: the only way I was able to lower down the "delay (msec)" values to few msec was using ASIO4ALL driver.

I'd like to hear your experience (I read about Domien playing "delayed") and if anyone was able to succeed to lower

the latency in win to unnoticeable values (~10 ms or even less).

Alberto

PD sending to two devices?

You can assign discreet inputs and outputs but not multiple outputs across different audio devices. Sound software has to access sound hardware through it's drivers and it can't handle 2 sets of calls to two different drivers (if someone has the proper tech jargon, please step in).

There are ways of cheating but they all stink in some way:

On windows: VAC/audio repeater combo

You can use 'Virtual Audio cables' to create virtual devices that you can use for input and output between software. You can piggy-back that audio to different hardware devices by using the audiorepeater utility. So you can set up VAC to make 2 cables (VAC1 and VAC2). PD will use VAC1 and VAC2 as outputs. (Since VAC1 or VAC2 aren't hooked up to real hardware, you don't hear anything.) Then you run 2 instances of audiorepeater. One instance routes VAC1 to 'Onboard speaker out' and the other instance will route VAC2 to 'USB audio device out'.

Downside: Latency, Latency, Latency

The sound is delayed through each step in the chain, and the audio repeater latency can only go but so low before you get scratchy static and dropouts.

On Linux: ALSA virtual device

You can make a virtual soundcard using ALSA that can combine the outputs of two audio cards into one

'virtual' one and then set JACK to output to that device (the setup of which is too complex to explain here).

Downside: Latency/Timing

Each soundcard has it's own timing, and there's no way to keep them in synch, so you can control the latency and you'll have clicks, pops, dropouts, and tons of underruns.

On Mac OSX:

I haven't tried it, but I believe you can also make an aggregate device

There is also a VAC/audiorepeater type program you can use called Soundflower, but like VAC it's really only for routing sound between apps, not between hardware.

Long story short, between the latency introduced by the software and the clock timing differences between soundcards, you can really get anything usable.

TouchOSC \> PureData \> Cubase SX3, and vice versa (iPad)

So, tonight have sussed 2 way comms from TouchOSC on my iPad to CubaseSX3, and thought I'd share this for those who were struggling with getting their DAW to talk back to the TouchOSC App. These patches translate OSC to MIDI, and MIDI back to OSC.

Basically, when talking back to OSC your patch needs to use:

[ctrlin midictrllr# midichan] > [/127] > [send /osc/controller $1] > [sendOSC] > [connect the.ipa.ddr.ess port]

So, in PD:

[ctrlin 30 1] listens to MIDI Controller 30 on MIDI Channel 1, and gets a value between 0 & 127. This value is divided by 127, as TouchOSC expects a value between 0 & 1. We then specify the OSC controller to send it to and the result of the maths ($1), and send the complete OSC packet to the specified IPAddress and Port.

This can be seen in LogicPad.vst-2-osc.pd

All files can be found in http://www.minimotoscene.co.uk/touchosc/TouchOSC.zip (24Mb)

This archive contains:

MidiYoke (configure via Windows > Start > Control Panel)

PD-Extended

touchosc-default-layouts (just incase you don't have LogicPad)

Cubase SX3 GenericRemote XML file (to import).

Cubase SX3 project file.

2x pd files... osc-2-vst, and vst-2-osc. Open both together.

In PD > Midi settings, set it's Midi Input and Midi Output to different channels (eg: Output to 3, Input to 2). In Cubase > Device Settings > Generic Remote, set Input to 3, and output to 2.

Only PAGE ONE of the LogicPad TouchOSC layout has been done in the vst-2-osc file.

Am working on the rest and will update once complete.

As the layout was designed for Logic, some functions don't work as expected, but most do, or have been remapped to do something else. Will have a look at those once I've gotten the rest of the PD patch completed.

Patches possibly not done in most efficient method... sorry. This is a case of function over form, but if anyone wants to tweak and share a more efficient way of doing it then that would be appreciated!

Hope this helps some of you...

PD Extended crush when trying a custom layout on TouchOsc

Hello there,

I've made my desired TouchOsc layout, but now am frustrated because every time I load the Layout and try to pair it with PD Extended, PD crashes.

Have made a layout with TouchOsc Editor and with the TouchOsc2Pad tool provided on the hexler website (TouchOsc creators).

Every time i touch any button or knob on TouchOsc PD Extended immediately crashes without an error message.

I have provided the PD file and my TouchOSC layout.

Thank you.

Please help me out.