Optimizing pd performances to fit an RPI 3

Well I do not see what is the main difference with the result I've shown before, maybe now we know that vfprintf function call is linked to printf_chk, that I didn't seen before while profiling. Also we got a malloc call and some dac call, so if you got any clue of what it could meant it would be great. Then I have to agree profiling isn't easy(it's my first time doing it ^^) but trying to fix the problem as such low level coudl be the best way to find a real fix to this bottleneck.

@mnb Hello ! First I thank you to have uploaded your patches, they're great ! Then to answer you, no I'm using the groovebox 2, the one you recommend. So the problem isn't from the filter object. But since you coded this patch you maybe could run it on your config and report here how much CPU power it takes on your side. By doing this we could identify if the problem is coming from the code or my hardware(I remember reading somewhere that embed intel GPU could have crappy result with pd, so with a bit of luck i could find somekind of driver fix to this issue).

@EEight Well, when I'll get something running fine on my laptop I'll port it to the RPI and of course I'll use -nogui, even switch~ if it's possible. But on the two version of my ptach I got either 50% of my first core(whith a 2Ghz CPU) or on the v2 of the patch 4 cores running at peak over 50%. Considering that my Pi is using a Broadcom BCM2837, with 1,2 Ghz on four cores and that the frequency is lock by the system around 900 MGhz if I'm right, it's just impossible to run my patch now on the rpi. I thinked that in theory splitting the audio processing to spread the calculation over the processor I would get 1ghz of calculation devided in four and then I could reach 250-300 MHz on each cores, that could run very smoothly on a rpi. But because there is something bugging somewehre I've just multiply the audio processing ressources by FOUR ! And -no-gui(that I already use on my laptop) or other tip for optimization such as latency doesn't help the CPU on my laptop, so I don't even think of its efficency on a RPI. I also noticed that when I increased the latency of my pure data instances, CPU use goes up ! So it's not something I could use for optimization. And didn't find ways to run pd on alsa on my Pi the only way I could get it to run without crahsing when dsp is on is with jack.

Optimizing pd performances to fit an RPI 3

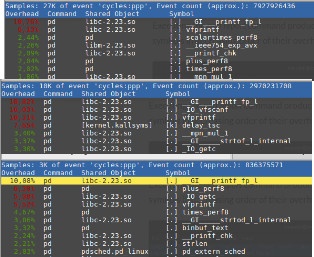

@Nicolas Danet Thanks for the tip, I didn't knew about Perf on linux it's very handy. I ran a test on each patch/subpatch and got the following:

So I will serach about -GI__printf_fp_l _IO-vfscanf vfprintf plus_pcrf8 and delay_tsc to see to what they match in PD. But if GI_printf and vfprintf are calls used by PD for the GUI object then @alexandros was right and I will replace all GUI object by text ones.

@EEight My problem with switch~ is not that I don't know how to use its syntax but more because splitting each sound processing patch in my patch would divide it into at least 16 different subpatch(probably more). And as far as I experimented the pd~ object I have noticed that running 9 subpatches took more ressources than just running 3. Considering this I'm not sure that implementing switch~ to my patch will introduce a significant gain of processing power. And it is the case it would be effecient if I only used few tracks out of the 8 track grroovebox that I'm working on, disable Fx ect.. and as soon I want to use the full capabilities of the patch it would crash because all the audio processing patch would run with the DSP and the CPU performances would rise again to what I got now.

Maybe I didn't explain it very clearly, so I will rapidly explain it again. My main problem here is that my intitial patch(we'll called it v1) uses 10-12% of CPU, and my motherpatch for different processes(let's call it v2) uses 18-20% + subpatch 1 running at 12-15% and subpatch 2 8-10%. So I got a v1 is runniingn 50% on one core and V2 and its subpatches is running at 30-40 with peak at 60% on four cores. Which is kind of the worst optimization of the history of computing ^^. Though ginving the Perf result I have something to investigate to see where the problem come from. I'll give you update as soon as I found out what to do.

EDIT: I did a bit of search it seems that IO-getc, GI-printf, IO-vfscanf and vfprintf are all functions used to manages stram of data. I'm not sure but I'm sending the value from my GUI/input patch to my auddi processing patch via netsend, maybe the way I'm doing it is not optimal, maybe I should switch to osc~ because it's more effective to stream large numbers of value. Maybe I messed up the way to through values into the subpatches(because if my netsending method is messy it does not explain why I see the same problematic function calls in the subpatches). It coould also be link to the GUI elements since data streaming could be used in in those. But it does not explain why there's the function call in my mother patch that isn't using any GUI elements. To get better result I will find directly in PD source code.

EDIT 2: I found vfprintf in this PD source file "pa_debugprint.c" so it don't validate the few hypothesis I made before, but in my pd window I got repeated messages like "output snd_pcm_delay failed: Unknown error 476 astate 3" they could be the reason of this. Then I also found plus.perf8 here "d_arithmetic.c" it seems relied to operators (/ * = -) I use them to set value to my synth ect... so getting rid of them would be very difficult if not impossible.

Optimizing pd performances to fit an RPI 3

Hello everyone, I'm currently working on somekind of "fork" of a patch I found(it's a groovebox patch coded by Martin Birkmann). It consume quite a lot of CPU, on my current Laptop equiped with an Intel® Core™ i3-6006U CPU, it ran up 50% on the first core. Since i want to be able to run it on a Raspberry Pi 3, running the patch at about 1 ghz on a single core is just not possible. Then I looked up how I could optimizes the use of the cpu, I tried to use some puredata command flag, tried to simplify and hide most of the GUI and even tried to split up the patch with subprocesses with pd~.

First flags, changes of priority, realtime ect... doesn't do anything for the use of CPU. Secondly hiding some GUI maybe help to grab 1% or 2% of CPU. Finaly, I had great hope that pd~ would allow to spread the CPU all across the proccessor allowing me to run it on the RPI 3, but no it's strange the mother patch of the subprocesses is running at 18-20% of CPU use(about 60% on a single core) consuming more than the initial patch that runs at 10-12% of CPU use. And when I'm running the pd subprocesses they also grasp a load of cpu use, They consume about as much as the mother patch, which is quite the opposite of what I wanted...

So is it normal that the use of pd~ just increases the need of CPU time for pd ? Is there another way to split the audio processing in different pd instances s the calculation is shared by different cores ? Is there some things I could improve in the audio processing section that could help lowering the use of CPU ?

Then I have another problem whenever I wanted to activate the dsp when I was on my RPI pure data craches. Is there someone here that knows why ? What command should I use to use the dsp without crash on a rpi ?

If you don't see any solution to my problem then you maybe can help me in an another way? Since my problem is mainly optimisation, if you have some recommandation to gave about some pure data open source groovebox patch that run well on low spec system it would be great.

Building a Linux Desktop

@cheesemaster I don't think much matters with a modern computer.

This is interesting for Linux...... http://puredata.info/docs/faq/how-can-i-run-pd-with-realtime-priority-in-gnu-linux/?searchterm=linux audio but as it says...... probably out of date now with Jack.

You can set realtime priority for audio in Linux..... which helps a great deal..... but unless to are running [pd~] as well to spread load across cores Pd will only use one.

I have 6 Toshiba I5 Notebooks..... this.zip

that do everything for me on shows including running video and Pd at the same time with wireless osc control.

But I also have an I3 HD3000 machine (same model) that I run a show monitoring system on...... Pd based with massive amounts of OSC and monitor mixing and backing track playback........ in windows..... with no problems.

I paid $100-$200 each for them on Ebay.

However....... the fan noise is not great...... so don't buy any of those for your use.

Large RAM can be useful, and an SSD obviously, but processor speed is irrelevant for audio nowadays.

Ports...... USB...... more than one on-board hub..... because if you connect a USB1 device to the same hub running USB2 audio it will knock the whole hub down to USB1.

USB2 is enough for at least a 30in 30out soundcard...... avoid firewire as it will break expensively at some point.

Beware of Wi-Fi cards that could behave badly causing interrupts and so clicks.... that might not be true in Linux though..... but do some research.

Again..... USB...... Macs connect their keyboards and cd drives through an onboard hub so it is essential that they have a second one free for any soundcard. If they have a USB3 then that can be used for a USB2 soundcard though.

Native instruments only had advice for windows before, but have this for OSX...... which could be relevant being UNIX....... https://support.native-instruments.com/hc/en-us/articles/210296445-Mac-Tuning-Tips-for-Audio-Processing

David.

P.S. @Eeight has just come on line and is an expert so.........

PD's scheduler, timing, control-rate, audio-rate, block-size, (sub)sample accuracy,

Hello,

this is going to be a long one.

After years of using PD, I am still confused about its' timing and schedueling.

I have collected many snippets from here and there about this topic,

-wich all together are really confusing to me.

*I think it is very important to understand how timing works in detail for low-level programming … *

(For example the number of heavy jittering sequencers in hard and software make me wonder what sequencers are made actually for ? lol )

This is a collection of my findings regarding this topic, a bit messy and with confused questions.

I hope we can shed some light on this.

- a)

The first time, I had issues with the PD-scheduler vs. how I thought my patch should work is described here:

https://forum.pdpatchrepo.info/topic/11615/bang-bug-when-block-1-1-1-bang-on-every-sample

The answers where:

„

[...] it's just that messages actually only process every 64 samples at the least. You can get a bang every sample with [metro 1 1 samp] but it should be noted that most pd message objects only interact with each other at 64-sample boundaries, there are some that use the elapsed logical time to get times in between though (like vsnapshot~)

also this seems like a very inefficient way to do per-sample processing..

https://github.com/sebshader/shadylib http://www.openprocessing.org/user/29118

seb-harmonik.ar posted about a year ago , last edited by seb-harmonik.ar about a year ago

• 1

whale-av

@lacuna An excellent simple explanation from @seb-harmonik.ar.

Chapter 2.5 onwards for more info....... http://puredata.info/docs/manuals/pd/x2.htm

David.

“

There is written: http://puredata.info/docs/manuals/pd/x2.htm

„2.5. scheduling

Pd uses 64-bit floating point numbers to represent time, providing sample accuracy and essentially never overflowing. Time appears to the user in milliseconds.

2.5.1. audio and messages

Audio and message processing are interleaved in Pd. Audio processing is scheduled every 64 samples at Pd's sample rate; at 44100 Hz. this gives a period of 1.45 milliseconds. You may turn DSP computation on and off by sending the "pd" object the messages "dsp 1" and "dsp 0."

In the intervals between, delays might time out or external conditions might arise (incoming MIDI, mouse clicks, or whatnot). These may cause a cascade of depth-first message passing; each such message cascade is completely run out before the next message or DSP tick is computed. Messages are never passed to objects during a DSP tick; the ticks are atomic and parameter changes sent to different objects in any given message cascade take effect simultaneously.

In the middle of a message cascade you may schedule another one at a delay of zero. This delayed cascade happens after the present cascade has finished, but at the same logical time.

2.5.2. computation load

The Pd scheduler maintains a (user-specified) lead on its computations; that is, it tries to keep ahead of real time by a small amount in order to be able to absorb unpredictable, momentary increases in computation time. This is specified using the "audiobuffer" or "frags" command line flags (see getting Pd to run ).

If Pd gets late with respect to real time, gaps (either occasional or frequent) will appear in both the input and output audio streams. On the other hand, disk strewaming objects will work correctly, so that you may use Pd as a batch program with soundfile input and/or output. The "-nogui" and "-send" startup flags are provided to aid in doing this.

Pd's "realtime" computations compete for CPU time with its own GUI, which runs as a separate process. A flow control mechanism will be provided someday to prevent this from causing trouble, but it is in any case wise to avoid having too much drawing going on while Pd is trying to make sound. If a subwindow is closed, Pd suspends sending the GUI update messages for it; but not so for miniaturized windows as of version 0.32. You should really close them when you aren't using them.

2.5.3. determinism

All message cascades that are scheduled (via "delay" and its relatives) to happen before a given audio tick will happen as scheduled regardless of whether Pd as a whole is running on time; in other words, calculation is never reordered for any real-time considerations. This is done in order to make Pd's operation deterministic.

If a message cascade is started by an external event, a time tag is given it. These time tags are guaranteed to be consistent with the times at which timeouts are scheduled and DSP ticks are computed; i.e., time never decreases. (However, either Pd or a hardware driver may lie about the physical time an input arrives; this depends on the operating system.) "Timer" objects which meaure time intervals measure them in terms of the logical time stamps of the message cascades, so that timing a "delay" object always gives exactly the theoretical value. (There is, however, a "realtime" object that measures real time, with nondeterministic results.)

If two message cascades are scheduled for the same logical time, they are carried out in the order they were scheduled.

“

[block~ smaller then 64] doesn't change the interval of message-control-domain-calculation?,

Only the size of the audio-samples calculated at once is decreased?

Is this the reason [block~] should always be … 128 64 32 16 8 4 2 1, nothing inbetween, because else it would mess with the calculation every 64 samples?

How do I know which messages are handeled inbetween smaller blocksizes the 64 and which are not?

How does [vline~] execute?

Does it calculate between sample 64 and 65 a ramp of samples with a delay beforehand, calculated in samples, too - running like a "stupid array" in audio-rate?

While sample 1-64 are running, PD does audio only?

[metro 1 1 samp]

How could I have known that? The helpfile doesn't mention this. EDIT: yes, it does.

(Offtopic: actually the whole forum is full of pd-vocabular-questions)

How is this calculation being done?

But you can „use“ the metro counts every 64 samples only, don't you?

Is the timing of [metro] exact? Will the milliseconds dialed in be on point or jittering with the 64 samples interval?

Even if it is exact the upcoming calculation will happen in that 64 sample frame!?

- b )

There are [phasor~], [vphasor~] and [vphasor2~] … and [vsamphold~]

https://forum.pdpatchrepo.info/topic/10192/vphasor-and-vphasor2-subsample-accurate-phasors

“Ive been getting back into Pd lately and have been messing around with some granular stuff. A few years ago I posted a [vphasor.mmb~] abstraction that made the phase reset of [phasor~] sample-accurate using vanilla objects. Unfortunately, I'm finding that with pitch-synchronous granular synthesis, sample accuracy isn't accurate enough. There's still a little jitter that causes a little bit of noise. So I went ahead and made an external to fix this issue, and I know a lot of people have wanted this so I thought I'd share.

[vphasor~] acts just like [phasor~], except the phase resets with subsample accuracy at the moment the message is sent. I think it's about as accurate as Pd will allow, though I don't pretend to be an expert C programmer or know Pd's api that well. But it seems to be about as accurate as [vline~]. (Actually, I've found that [vline~] starts its ramp a sample early, which is some unexpected behavior.)

[…]

“

- c)

Later I discovered that PD has jittery Midi because it doesn't handle Midi at a higher priority then everything else (GUI, OSC, message-domain ect.)

EDIT:

Tryed roundtrip-midi-messages with -nogui flag:

still some jitter.

Didn't try -nosleep flag yet (see below)

- d)

So I looked into the sources of PD:

scheduler with m_mainloop()

https://github.com/pure-data/pure-data/blob/master/src/m_sched.c

And found this paper

Scheduler explained (in German):

https://iaem.at/kurse/ss19/iaa/pdscheduler.pdf/view

wich explains the interleaving of control and audio domain as in the text of @seb-harmonik.ar with some drawings

plus the distinction between the two (control vs audio / realtime vs logical time / xruns vs burst batch processing).

And the "timestamping objects" listed below.

And the mainloop:

Loop

- messages (var.duration)

- dsp (rel.const.duration)

- sleep

With

[block~ 1 1 1]

calculations in the control-domain are done between every sample? But there is still a 64 sample interval somehow?

Why is [block~ 1 1 1] more expensive? The amount of data is the same!? Is this the overhead which makes the difference? Calling up operations ect.?

Timing-relevant objects

from iemlib:

[...]

iem_blocksize~ blocksize of a window in samples

iem_samplerate~ samplerate of a window in Hertz

------------------ t3~ - time-tagged-trigger --------------------

-- inputmessages allow a sample-accurate access to signalshape --

t3_sig~ time tagged trigger sig~

t3_line~ time tagged trigger line~

--------------- t3 - time-tagged-trigger ---------------------

----------- a time-tag is prepended to each message -----------

----- so these objects allow a sample-accurate access to ------

---------- the signal-objects t3_sig~ and t3_line~ ------------

t3_bpe time tagged trigger break point envelope

t3_delay time tagged trigger delay

t3_metro time tagged trigger metronom

t3_timer time tagged trigger timer

[...]

What are different use-cases of [line~] [vline~] and [t3_line~]?

And of [phasor~] [vphasor~] and [vphasor2~]?

When should I use [block~ 1 1 1] and when shouldn't I?

[line~] starts at block boundaries defined with [block~] and ends in exact timing?

[vline~] starts the line within the block?

and [t3_line~]???? Are they some kind of interrupt? Shortcutting within sheduling???

- c) again)

https://forum.pdpatchrepo.info/topic/1114/smooth-midi-clock-jitter/2

I read this in the html help for Pd:

„

MIDI and sleepgrain

In Linux, if you ask for "pd -midioutdev 1" for instance, you get /dev/midi0 or /dev/midi00 (or even /dev/midi). "-midioutdev 45" would be /dev/midi44. In NT, device number 0 is the "MIDI mapper", which is the default MIDI device you selected from the control panel; counting from one, the device numbers are card numbers as listed by "pd -listdev."

The "sleepgrain" controls how long (in milliseconds) Pd sleeps between periods of computation. This is normally the audio buffer divided by 4, but no less than 0.1 and no more than 5. On most OSes, ingoing and outgoing MIDI is quantized to this value, so if you care about MIDI timing, reduce this to 1 or less.

„

Why is there the „sleep-time“ of PD? For energy-saving??????

This seems to slow down the whole process-chain?

Can I control this with a startup flag or from withing PD? Or only in the sources?

There is a startup-flag for loading a different scheduler, wich is not documented how to use.

- e)

[pd~] helpfile says:

ATTENTION: DSP must be running in this process for the sub-process to run. This is because its clock is slaved to audio I/O it gets from us!

Doesn't [pd~] work within a Camomile plugin!?

How are things scheduled in Camomile? How is the communication with the DAW handled?

- f)

and slightly off-topic:

There is a batch mode:

https://forum.pdpatchrepo.info/topic/11776/sigmund-fiddle-or-helmholtz-faster-than-realtime/9

EDIT:

- g)

I didn't look into it, but there is:

https://grrrr.org/research/software/

clk – Syncable clocking objects for Pure Data and Max

This library implements a number of objects for highly precise and persistently stable timing, e.g. for the control of long-lasting sound installations or other complex time-related processes.

Sorry for the mess!

Could you please help me to sort things a bit? Mabye some real-world examples would help, too.

Problem with running different Sample rates in different instances using [Pd~]

Hi all,

I'm trying to run different instances of my main patch using the [pd~] object using 2 different sample rates.

Main patch runs at 48khz:

1st) [pd~ -ninsig 1 -noutsig 1 -sr 48000]

2nd) [pd~ -ninsig 1 -noutsig 1 -sr 12000] ---> this runs at 48 kHz

Unfortunately the sample rate of the main patch that contains the 2 [pd~] objects as shown above affect the sample rate of the sub instances.

So if my main patch containing the 2 [pd~] is running at 48kHz the 2 sub instances run at 48kHz overriding the one set to work at 12kHz and vice versa if the main patch runs at 12kHz then the sub instance that is set to run at 48kHz runs at 12kHz.

Main patch runs at 48khz:

1st) [pd~ -ninsig 1 -noutsig 1 -sr 48000]

2nd) [pd~ -ninsig 1 -noutsig 1 -sr 12000] ---> this runs at 48 kHz

Main patch runs at 12kHz

1st) [pd~ -ninsig 1 -noutsig 1 -sr 48000] ---> this runs at 12 kHz

2nd) [pd~ -ninsig 1 -noutsig 1 -sr 12000]

do you have any idea how I can get the 2 instances to run at different samplerate~ ?

Thank you in advance!

*[list item](Samplerate[Pd~]pd.pd link url)

i/o-errors in pd

@EsGeh You should not be having these problems. At all. Except "resizing a table".

Even that should not cause a problem if it is hidden in a sub-patch and you are not reading from it as you resize.

I can run a 64ch in 64ch out mixer controlled by osc messaging from 64 tablets in Pd, at 3ms latency without a glitch (Extended on Windows7)

Are you running Pd at the same samplerate as your soundcard?

Are you trying for too small a buffer to improve latency?

Have you given Pd root priority (chmod 4755)?

Could you post some more detail..... os, soundcard, Pd version?

Chapter 2.5 ........ https://puredata.info/docs/manuals/pd/x2.htm/?searchterm=i/o error

explains what Pd gets up to, and why it is best to hide unused gui's, and there is more help on that site.

You can run more than one instance of Pd and communicate between them through ports, and that will sprout 2 instances of wish, which spreads the gui load as well. Whether your OS will run them all in the same processor I don't know. Recent OS's should automatically spread them about.

I have had problems trying to control a gui through more than 2 levels of gop.

If you wish to post a problem patch then we can see if it works well on our systems, which might narrow it down.

David.

Deferring messages for later processing in Puredata external?

Hi all,

Let's assume you have an external, with this kind of code in it:

...

typedef struct _MyExternal {

...

int var_a;

int var_b;

int var_c;

int var_d;

...

t_symbol* obj_name;

...

} t_MyExternal;

...

void MyExternal_calcandprint(t_MyExternal *x) {

x->var_d = x->var_a + x->var_b + x->var_c;

post("The external has obj_name %s with values %d (%d+%d+%d)", x->obj_name->s_name, x->var_d, x->var_a, x->var_b, x->var_c );

}

void MyExternal_seta(t_MyExternal *x, t_float f) {

x->var_a = f;

MyExternal_calcandprint(x);

}

void MyExternal_setb(t_MyExternal *x, t_float f) {

x->var_b = f;

MyExternal_calcandprint(x);

}

void MyExternal_setc(t_MyExternal *x, t_float f) {

x->var_c = f;

MyExternal_calcandprint(x);

}

...

class_addmethod(MyExternal_class, (t_method)MyExternal_seta, gensym("seta"), A_FLOAT, 0);

class_addmethod(MyExternal_class, (t_method)MyExternal_setb, gensym("setb"), A_FLOAT, 0);

class_addmethod(MyExternal_class, (t_method)MyExternal_seta, gensym("setc"), A_FLOAT, 0);

...

So, let's say I want to set these variables from PD, in a message like this:

[ ; <

[ recvobj seta 3; |

[ recvobj setb 4; |

[ recvobj setc 5; <

So, even if this content is in one message box, all of these message will be received individually, and so

- first

MyExternal_setawill run, callingMyExternal_calcandprint - then

MyExternal_setbwill run, callingMyExternal_calcandprintagain - then

MyExternal_setcwill run, callingMyExternal_calcandprintyet again

The thing is, these messages could come from different message boxes, but all sort of close in time, and this is the case I want to handle - I want each set* function to run individually as they do - but I'd want MyExternal_calcandprint to run only once, once all the variables have been set.

However it is kind of impossible to predetermine whether only a, or also b and c will be changed in a call. So I imagine, if there existed a function, say, pd_defer_ctrl which I could use like:

void MyExternal_setc(t_MyExternal *x, t_float f) {

x->var_c = f;

pd_defer_ctrl( 5, x->MyExternal_calcandprint );

}

it would help me with my problem, if it worked like this - if PD is not in "defer mode", then it enters it, and sets a threshold of 5 ms from now; then it adds MyExternal_calcandprint to a queue. Then when next set* message comes in, PD sees its already in "defer mode", sees it arrived before the threshold of 5 ms has expired - and so it first checks if MyExternal_calcandprint is already in the queue, and if it is, it does not add it again. Finally, once the threshold of 5 ms has expired, PD then runs all the functions in the defer queue.

Is there something like that I could use in a PD external?

EDIT: Turns out Max/MSP probably already has such an API function, because I can see in bonk~.c source:

...

#ifdef MSP

static void bonk_write(t_bonk *x, t_symbol *s)

{

defer(x, (method)bonk_dowrite, s, 0, NULL);

}

...

.... but I guess there is nothing like that for Pure Data...

Lissa Executable / ofxOfelia compile error (Solved)

@cuinjune I tried to compile the lissa seq patch. but when i open the executable it opens only a small empty window.

i also tried to compile a help patch for testing, with the same result.

but your example works fine(Win32Example).

Sysex program dump with random zeros

Okay ;that's what I suspected you were doing. It is possible to use the MTP serial directly with linux and the mtpav driver, though this requires a machine with true hardware parallel port access (USB adapters won't cut it) and is limited to MIDI output only as the driver is a hack that was never finished. I used to run mine that way with a second USB MIDI interface connected to one or more of the MTP's unused routed MIDI outputs (there is, BTW still no way to connect the MTP USB version directly to a Linux system as it doesn't use a standard USB MIDI driver)

As far as the kernel is concerned, I've only ever used lowlatency or RT kernels for MIDI stuff. I don't know how a generic kernel would affect this problem but it's probably best avoided as they are not specifically built for multimedia.

Now read closely as this is where it becomes stupidly complicated.

First, make sure that the problem is internal to Pd. Your interfaces are probably not the issue (since Pd is only seeing the UM-1 driver I don't think the MTP even figures in here). However, the chosen MIDI API can get in the way, specifically in the case of the unfinished JACK MIDI. JACK MIDI presently can only pass SYSEX as short realtime messages. SYSEX data dumps will disappear if sent into the current JACK MIDI system. ALSA works OK. OSS probably too, but I have not tried it.

If that stuff is ruled out and you are still getting problems, it probably has to do with a set of longstanding bugs/oversights in the Pd MIDI stack that can affect the input, output, or both.

On the output side there is a sysex transmission bug which affects all versions of Pd Vanilla before 0.48. The patch that fixed Vanilla had already been applied to L20rk/PurrData for some time (years, I think). The output bug did not completely disable SYSEX output. What it did was to miss-format SYSEX in a way that can't be understood by most modern midi applications, including the standard USB MIDI driver software (SYSEX output from Pd is ignored and the driver/interface will not transmit anything). You would not notice this bug with a computer connected directly to an MTP because the MAC and Linux MTP drivers are programmed to pass raw unformatted MIDI.

If this is the problem and you have to use a pre-0.48 version of Vanilla it can be patched. See:

https://sourceforge.net/p/pure-data/bugs/1272/

On the MIDI input side of Pd we have 2 common problems. One is the (annoyingly unfinished but nevertheless implemented) input to output timestamping buffer that's supposed to provide sample-accurate MIDI at the output (if it was finished). This can be minimized with the proper startup flags (for a MIDI-only instance of Pd, -rt -noaudio -audiobuf 1 -sleepgrain 0.5 works for me) or completely defeated with a source code tweak to the s_midi.c file. This may not be the source of your particular problem as it usually only affects the time it takes to pass messages from input to output, but is worth mentioning as it can be very annoying regardless.

With SYSEX dumps it is more likely that the problem lies with the MIDI queue size. This is very common and I experienced it myself when trying to dump memory from a Korg Electribe Ea-1.. The current version of Pd limits the queue to a size of 512 (even worse it used to be 20) and any input larger than that will get truncated w/no error warning. There is not yet any way to change this with startup flags or user settings. This can only be "fixed" by a different tweak to s_midi.c and recompiling the app.

The attached text files are derived from the Pd-list and will show the specific mods that need to be made to s_midi.c.