-

cfry

posted in technical issues • read more@oid said:

@cfry what is the source of the sequences in the cycle abstractions? Generated, entered in manually, gotten from somewhere else. something else? Do they share a sequence and each use different parts of it?

The abstractions for setting up the synth control in my original patch where all set up by hand during a composing/sound design phase. The idea was to set up sound gestures for each input pulse and then perform by playing these in different ways. Could compare it with triggering sample clips (which I also do but more so as "grains"). Another performance ("song", "composition") may require new gestures. I usually have one "engine" patch and then different "score" patches.

I want to be able to play the original performance but I am fine with reprogramming an approximation; It was pretty much built as an improvisation anyway. Then for the future, it may take whatever turn. It should.

So i short, all the sequences was entered by hand and are individual. I need to be somewhat backwards compatible in order to be able to do the performance, but now I am happy that we are coming up with a new structure for the handling! The original way is heavy handed so to speak, especially if you wan to go back and edit afterwards.

EDIT: I mean, one could picture all kinds of ways to create the sequences. They could be taken live and generated from an API for retrieving an arbitrary Low earth orbit satellites GPS location in combination with a python script. Any data could be used. Or they could be updated depending on how one is performing, a feedback type of arrangement.

-

cfry

posted in technical issues • read more@oid Im just jumping in here quick to say it indeed looks like we are on the same page. I think you should go ahead just as you describe. As long as the basic functions are retained (so I can update my original performance to be stable) I wont mind a zillion of new functions to explore. That will lead to new ideas, could not be better.

Let me know if you want the original pd patch for the performance. Or bits and pieces. It is a mess though.

"the output of [r chs-$0] is a two element list, channel number and a one or a zero?"

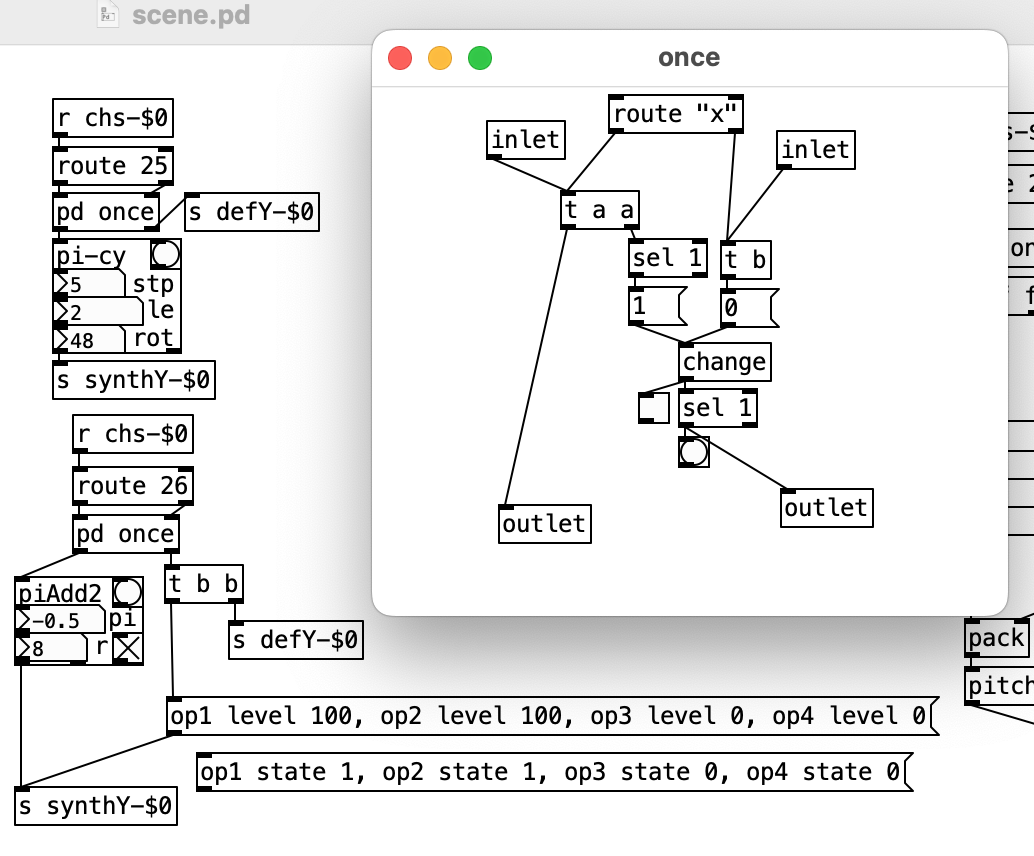

-yes exactly"What is the right outlet of your once? bang when reset or something else?"

-It bangs the first time a channel is active, in order to be able to, for example, send the initial settings, start pitch etc. This way I can be "monophonic"

"are they how you are implementing groups? this screen shot is one group with two synths?"

-no, actually the group handling never have been that much developed. Its more that I see that it can be useful. -

cfry

posted in technical issues • read more@oid oh, I totally confused things for you, sorry. Nothing has changed. I will clear this up asap. Stay tuned.

-

cfry

posted in technical issues • read more@oid I fixed the loop as per your instructions, works fine.

My primarily reason for this patch is to have a specific performance set I do technically solid, and updatable. So that I will use "[tracky]" for but I do not want to hinder your ideas (and mine) with this purpose. I want to keep it open and see where it goes.

That said here is an explanation on how I use it:

this video show the interface and how I perform with it:

(at 11:47 maybe it is easier to see what is happening, starting and stopping)

The coil springs you see act as switches (short ground to digital inputs of four Arduino). I control them by hand and by adding weights to get different pulses. In pd the switches have their own logic, how they affect the synthesis. In the default mode there are no automatic triggering at all, it is just me starting and stopping pulses.

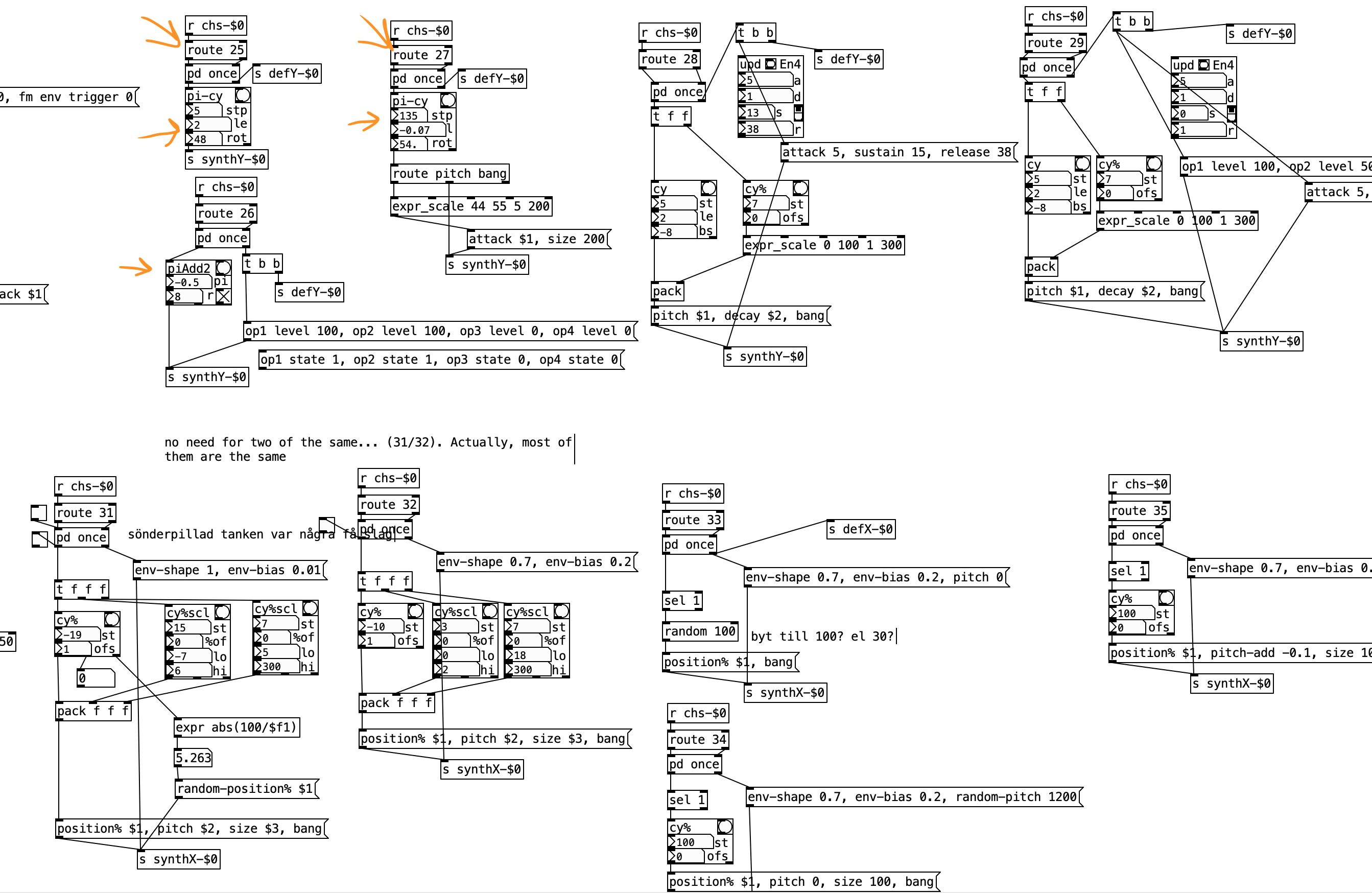

This is how this part of the patch looks. [r chs-$0]-[route 25] etc are the incoming pulses. The subpatches with "graph on parent" like [pi-cy] (=pitch cycle) and [piAdd2] (=add pitch) are what I want to replace with [tracky] (probably will rename it later btw. Depending on what it becomes!)

So these boxes all do "hard coded" functions that you edit with number2 atom that are set to retain values upon save. The benefit with this setup is that I can just copy these around even between patches. It is not totally bad, you can work quick with it and improvise it. But I think it is awkward to edit afterwards. I just believe [tracky] will be better for this. If I can load the text file with commands as an argument in [tracky] I can name the text so I can see what it is meant to do. One way to do it.

But what I am after is the functions, not this particular work flow or visual layout. I think of it that each of the switches (coil springs etc on the physical interface) trigger an independent channel as in a tracker where it trigger pitches and manipulations. This approach I like very much and intend to explore it further.

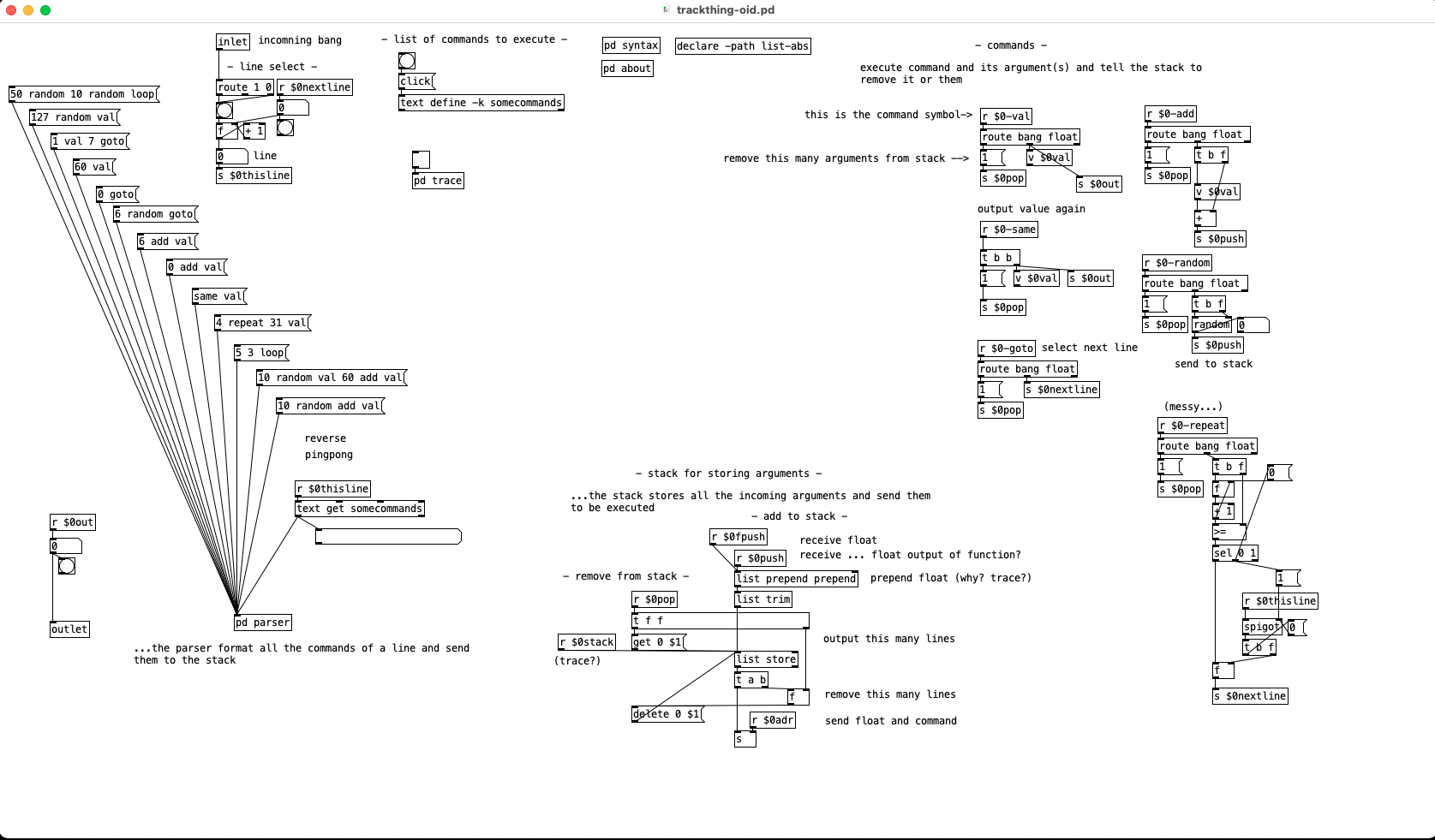

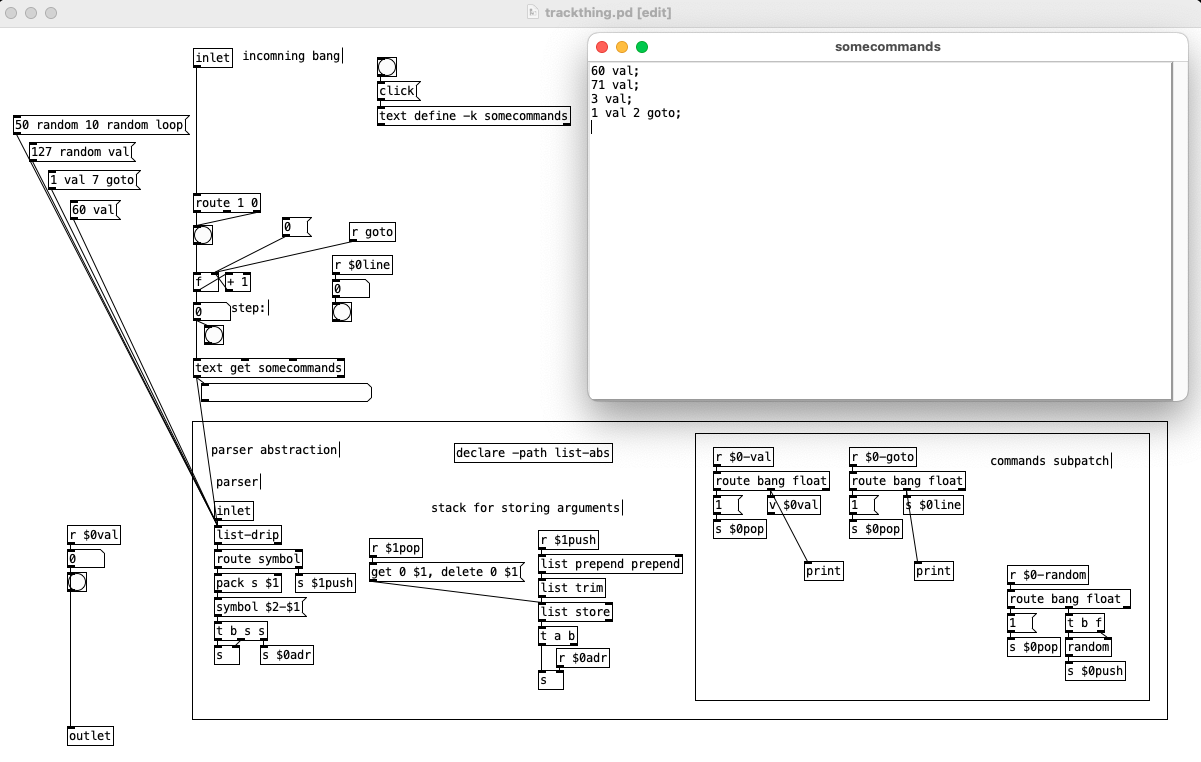

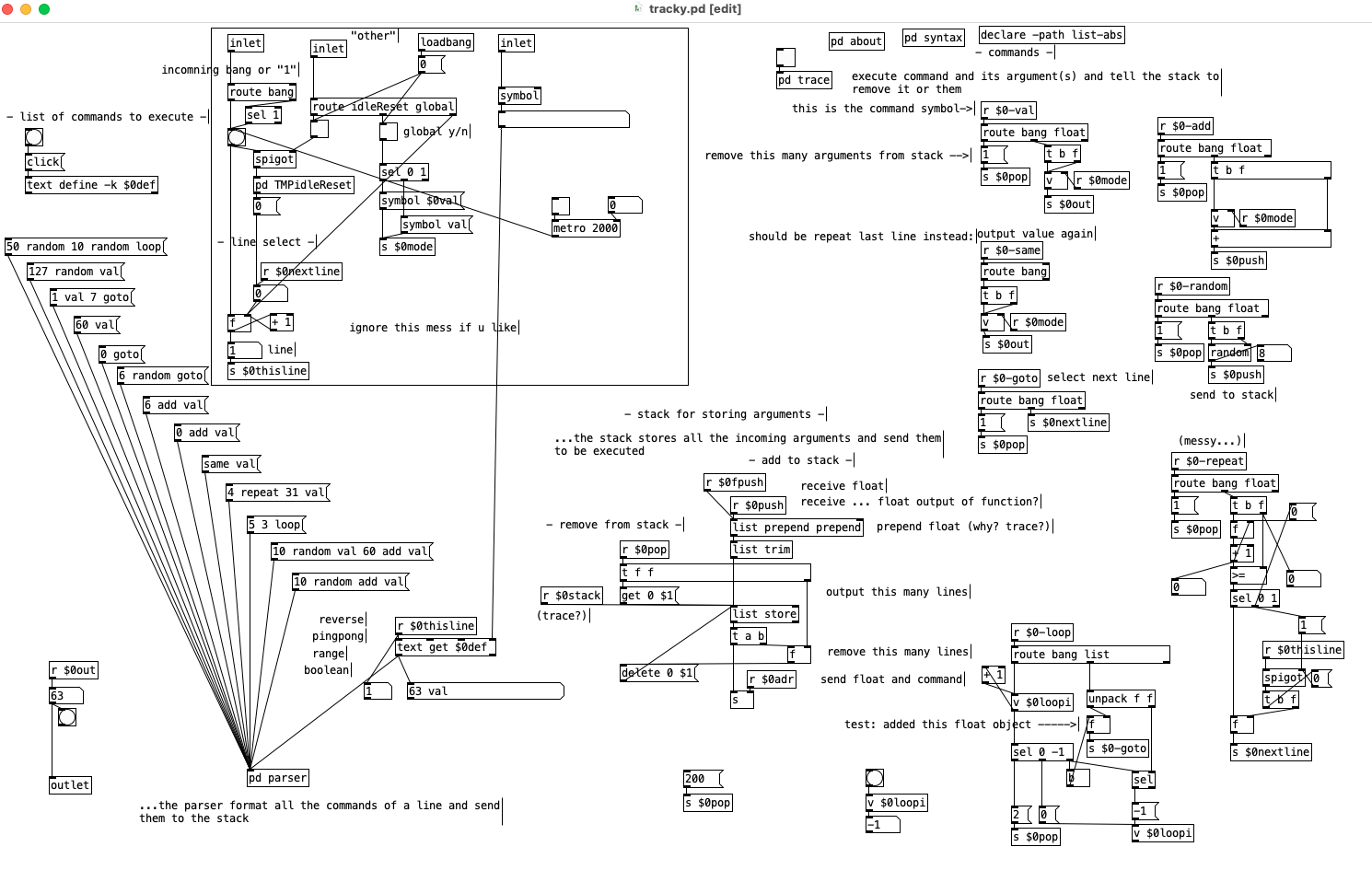

Current version of [tracky] over here: tracky.pd

...and sorry for being slow in response. I am moving between cites and it is chaos in general. In a week or so I will have more structure in my life.

-

cfry

posted in technical issues • read more@oid I have to return to treating variables. However, I would be fine with just editing a preferences text (the "registry"?)

@oid said:

Not sure what you mean by groups/exclusive groups, can you elaborate or show it with a patch?

Its midi programming language sort of, usually used when programming percussive instruments. If you have one closed and one open high hat you would like these to cut off each other. Not "poly". Same could go for the cymbals. So each of these would get their own exclusive group. High hat exclusive group 1, crash exclusive group 2 etc.

In my case I may have several instances of [tracky] (with different text files) triggering an fm synth. Here I want to have one or all of the variables to be shared. I may have a granular sampler controlled by several instances of [tracky] (with different text files), also sharing variables, but using another range (ex. 0-100, the fm synth using 0-1). The grain sampler also want its variables to be global, but not shared with the fm synth.

I could solve this by using different named variables for these different groups of global variables. But this will be convoluted and hard to get an overview. I think it may be better to use abstraction arguments to give them different groups: [tracky 0], [tracky 1], and [tracky] for not sharing variables at all.

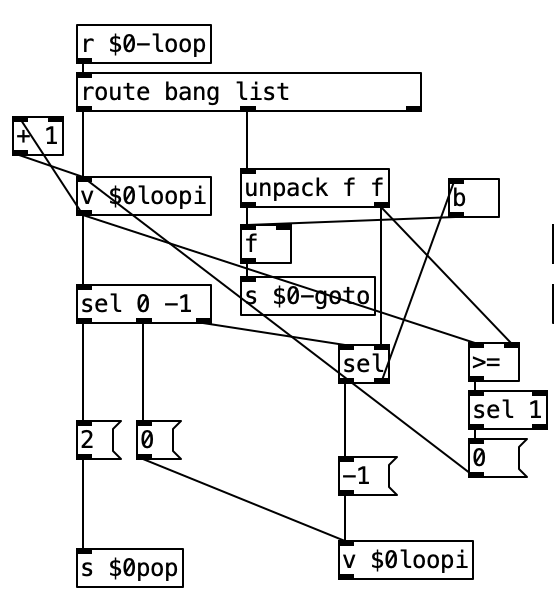

This hotfix make the loop work as intended but just keep adding to the stack.

Could it be that since [v] does not output anything until it get a bang problems arise?

Also not sure how the stack is supposed to work in this case.

-

cfry

posted in technical issues • read more@oid said:

I used [value] because add works off the last value that was output and [value] keeps things neater than tying lots of stuff to a single [float].

Yes its convenient, I have tried to add a global function to share the variable "var" between several instances. [v] is good for this purpose. Use case: for example one instance with a list with "add" +1, and another instance with a list with "add" -1. And they are triggered independently.

It would be good to be able to have different groups (like "exclusive" groups).

I also see a use case for several "var" (var0, var1, var2, var3 etc)

...adding a global mode, exclusive groups, idle-reset, and so forth are configuration settings, not part of the live output. Question is where this should go. In the text file also? Or in another text file? Or if they are just a few, maybe just as arguments in the abstraction. The config settings should be retained. It would also be good if one could write the text to use as an argument in the abstraction: [tracky mycommands]. Not sure how to do that.

Slightly annoying and could be fixed but would be less efficient, so I just remember that when patching commands which push a list, the list needs to be backwards or it will cause confusion elsewhere.

that is fine, I just need to include some instructions on how the syntax goes.

We will deal with that in the next post and start really exploiting that this is a programming language and will save you work as any programming language should.

The problem that arise is that when I start to improvise I kind of "break the (your) concept". And I would like to avoid ending up in another patch that is so messy that I can not use it if I bring it up after a half a year or so. Lets continue working on it!

Edit2: No, that loop will not quite work, for got to resend the float to $0-goto on each iteration, only loops once.

I have given your loop code several shots but I can not figure out how to get it working. I managed to (in a messy way) to have it repeat for x times, or loop forever, or loop just once. But never loop x times and then be ready again to to loop x times.

Current patch:

-

cfry

posted in technical issues • read more@oid Thank you so much. It is coming along quite well.

Question time:

Could we make a 2 argument command, like the loop function? I could not figure out how to use 2 arguments.

Is there a specific reason why you used the value object? I found use for it, but I am just curious.

How would you send the final out of the patch? As I patched it now I I am not using the value object for this.

I think I may add more values later, like val1 val2 val3.

I commented all over in to help me understand what happens. Is there any spots where I totally misunderstood it?

If there are any awkward edits I made feel free to correct it or point it out. Pointer about suitable syntax is also appreciated.

-

cfry

posted in technical issues • read moreCan you help me to get this working? I tried to debug with [print] but I could not figure out the local variable hierarchy. (screen shot and patch is for visual reference)

And then maybe we can do a few new commands so I can handle it?

On a side note: No need for sudoku today! Pd and @Oid will keep me fresh now when chatgpt now make so complex code for my arduinos that I can not read it anymore.

-

cfry

posted in technical issues • read more@oid where is [list-drip] coming from? Deken says shadylib but it is not there.

edit: never mind, I found it in list-abs

-

cfry

posted in technical issues • read more@porres Hi, yes, they are interesting. else/sequencer also has the feature to be banged a step at a time. Cool.