@Load074 Over the past few years I've also been trying out various approaches along the lines of what you're looking for, and actually tried something rather similar to your idea: pulling only one sample per x samples of the source array and sending to a smaller display array. And indeed, it doesn't work because those values are just scattered snapshots of the signal & will be discontinuous with the neighboring display samples. I realized that some kind of averaging would be needed, started to work on it, then got sidetracked & never followed through...

But, I ended up finding two pre-baked solutions:

rh_wavedisplay from netpd

pp.waveform from AudioLab

I can't quite wrap my head around how rh_wavedisplay works from looking inside the abstraction, but the result looks nice. From the help patch:

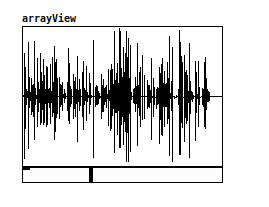

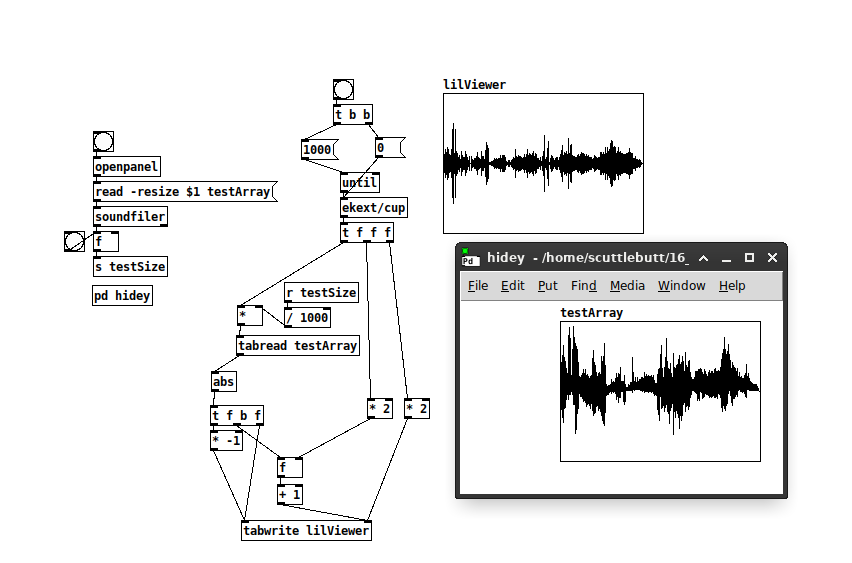

This abstraction finds the minima and maxima for each section of the source table that covers one pixel of the display array. The result is a good looking waveform that does not suffer from aliasing.

I also like that it allows for only a section of the source array to be used for display (which is important for how my performance patch uses arrays). In practice it still causes audio dropouts when updating large source arrays, unfortunately.

pp.waveform uses data structures, which is why the result looks so different visually. I don't understand data structures enough to play around with this or try to adapt it for my uses, but I would be definitely curious about whether it's any more efficient than those other graphical array manipulation methods.

I also wonder if there's simply no method that will prevent audio dropouts without some kind of array manipulation that can run on a different thread. I've been looking into the shmem library to see if it's possible to outsource the array processing to another Pd instance (similar to the Pd~ approach that @alexandros suggested), haven't gotten very far so I still don't know if there will be a bottleneck when dumping a huge array to memory.

/home/scuttlebutt/Pictures/Screenshot_2024-01-23_21-42-53.png

/home/scuttlebutt/Pictures/Screenshot_2024-01-23_21-42-53.png