Final Solution: Anyone looking to control Ableton Live...easily

Hi All

A little bit of work to set up but forget midi mapping...google it if you dont believe me.

After a lot of time spent trying to get a simple but sophisticated way (using a minimal 8 button floorboard) to control Live on w10, I thought I would share this particular solution to possibly help others (especially after the help offered here on this forum). I tried a number of scenarios, even buying Max 4 Live, but it turns out a lot simpler than that. It needs 3 main areas set

FOOT CONTROLLER BEHAVIOURS/GESTURES

Create pd patch that gives you 'behaviours' per switch. Ill be happy to share mine but Im just cleaning them up atm.

eg I have 4 standard behaviours that dont take too much time to master

- Action A: A quick click (less than 500ms) Always the primary action

- Action B: Long click ie 1 click down and pedal up after 500ms. I use this eg always as a negative ramp down for things like lowering volume but if its just held down and released in a natural way, it is the secondary action of the switch

- Action C: 3 Click ie 1 quick down, up and then hold down. I use this for a positive ramp eg as volume up

4 Actiion D: Double click, Always a cancel

These are all mapped to note/ctrl outs that match the 'Selected Track Control' below

PLUGIN

Use PD VST to create a plugin version of your patch. This is loaded into Live as a control track. Live manages the connection of your floor board etc into the actual track so you dont wrestle with the io. I always use track 1 for click (forget Live metronome, this is much more flexible and can have feel/swing etc) so I dedicate track 2 to control.

Use LoopMIDI to create a virtual midi cable that will go from this track and be fed into the remote script.

REMOTE SCRIPT: 'Selected Track Control'

Download latest from http://stc.wiffbi.com/

Install to live and make sure your notes/control conform.

Enable this as a control surface in live and connect midi in from the plugin. Think about giving the guy a donation...massive amount of work and he deserves it!

I use it to control 8 tracks x 8 scenes and is controlled by 3 switches

- Scene control up and down (A = down, B = up)

- Track control same as scene

- Rec/Fire/Undo Volume up and down (A = fire/rec, B = Volume Down, C = Volume Up, D (Dbl Click) = Undo

The scenes and tracks wrap so there isnt too much foot tapping

There is quite a bit more to it of course...its and maybe no one else needs this but it would have saved me a couple of weeks of time so Im happy to help anyone wanting to achieve gigging without a massive floor rig and an easy way to map and remember.

HTH someone

Cheers

mark

Audio triggering samples with env~

@greglitch Yes, you are correct, when a module is triggered it sends it's trigger value to [s me_bigger_or_smaller]. The other abstractions see the value arrive, and if they are not the one that sent the value (it is not equal to their value) they send the [stop( message to their [readsf~]. The module that sent the message sees the same value arrive, and does not stop. So a bigger trigger, or a smaller trigger will stop all of the others. Because there is a "de-bounce" in [threshold~] this can only happen after the de-bounce time has elaplsed. Because you are playing the tracks in the same space as the microphone it is likely that a smaller value will not be sent while a track is playing..... and so it is always a "louder" track that is triggered (even though your selected "louder" tracks are in fact "quieter") until that track ends naturally........ confused?

Here is the same thing again with [env~]......... micprova4.zip

As you still need the debounce of [threshold~] I have built you a replacement called [threshold_env~] which does the same thing using [env~]....... nearly.....

- [threshold~] was peak to peak, and [env~] outputs rms.

- The "set" message can no longer be sent to the audio inlet, so there is an extra inlet in the middle that will receive the message.

If you want to use [env~] outside of an abstraction you would need to copy and paste the contents of [threshold_env~] (probably without the "rest" part) many times across your patch, with different trigger values and attach them to many [readsf~] objects. It would become really hard.....very quickly.... to see what is going on......that is the advantage of abstractions......especially as if you need to change something for every abstraction you only need to do it once.

See......http://forum.pdpatchrepo.info/topic/9774/pure-data-noob...... the first screenshot gives you an idea about what you would end up with on your screen without using abstractions! You can very quickly run out of screen space.

The fact that I was able to just "drop in" [threshold_env~] in place of [threshold~] should convince you of the advantage of abstractions. Without them I would have had to copy it numerous times, and if it didn't work correct it many times, and then reconnect everything up... with many opportunities to make a mistake. You can now copy [module_play_env~ xxx nn] as many times as you wish........

David.

Playing sound files based on numbers/sets of numbers?

@Alexita Hello again. You don't absolutely need abstractions, but they make the building of the patch much quicker (a lot less typing). Putting 2 or 3 digit numbers is more difficult.... I will think about that. Stopping with a second enter will be possible.

The patch is already able to play only a selection of the 17 tracks...... although they must all "exist".

If the tracks are in the same folder as the patch the paths are not necessary, so it becomes much more portable.

But portable on a flash drive means putting Pd on the flash drive as well (in a portable format), and the tracks as well........ so a big enough flash drive. I am assuming that the computer the flash drive will be plugged into will not have Pd already installed. Will it always be a PC? or a Mac? or Linux? Or will it have some flavour of Pd installed already? That would be useful.

It will be much easier to build with abstractions if the tracks are named 1.wav, 2.wav, 3........ 17.wav.

What are the two, three and four digit numbers? How do they choose between the 17 files? 1-17 is easily understandable. Can you post a "table" of what they should be........ like?

1 - 33

2 - 41

3 - 123

4 - 18

5 - 6072

or is there supposed to be some sort of a random function...... where you are not really selecting at all? The task makes no sense to me with this requirement (at the moment). You would need to remember the 17 large numbers!

I will post an abstraction version, assuming all of the tracks in the same folder. A "sub" folder is easy as well (for the tracks).

............................ 17 tracks with loop abstractions.zip ...... now in "loop" mode already when it starts.

I have added metering, just for fun.

You must put 17 tracks named 1.wav ... 17.wav in the same folder.

The keyboard numbers 1 - 9 will work (for the moment, and for the first 9 tracks)

David.

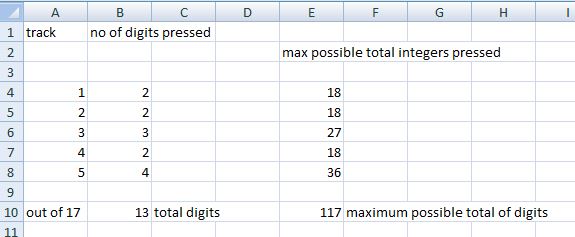

PS..... looking for a pattern...... anyone?

Assuming...... only keys 1-9 or 0-9 used.

Of course! It's easy......... if 0 is not allowed

2 digits..... minimum total 2 max 18........ range 17

3 digits......minimum total 3 max 27........ divide by 1.5 and subtract (about) 1........ range 17

4 digits......minimum total 4 max 36........ divide by 2 and subtract (about) 1........ range 17

Need to round up or down..... look at results.....

For now.... 17 tracks loop enter start stop.zip starts and stops (stops tracks dead.... should the sequence complete?) with enter.

David.

2016_05_06 - Trip Tech, a Trio for Three Strings and one Voice

Part of me wishes I had not given this piece a name, yet do as I must, it is now time for me to release it Into The Wild.

I recorded it in 4 separate improv takes, each one adding a new layer to the previous ones as I listened with Audacity passthru, with vocals last.

The first track was using only vcompander and the DIY2 compressor.

The second track was using the previous settings + Puckette's reverb2.

The third track was using the first settings but not the second and adding mmb's resonant filter.

The final (vocal) track was as clean as I could get it with just a little reverb.

The intention was to come as straight from the Heart as I could and see if I could layer/fold my musical ideas onto themselves and it still resonate as a cohesive whole.

In the end that will be (mostly  ) for others to decide, but for me I Did achieve that goal with about 95-6% accuracy.

) for others to decide, but for me I Did achieve that goal with about 95-6% accuracy.

Peace and good will to all you.

-svanya

2016_05_06 - Trip Tech, a Trio for Three Strings.mp3

p.s. i deducted the 4-5% for the sound quality of the first track because it sounded really garbled: any advise/suggestions on that front would be much appreciated.

Multiple loops syncing

@sglandry said:

Thanks for the patch. How does sending out multiple master_bang 's work? Does whatever loop you start playing first basically become the "master" track that controls all the other ones? Or is there some other global master bang logic that I'm not seeing.

If i remember correctly, I usually just set "track1" to be the "master track". I would record this track first, and however long it was (in ms) would be the length of the master loop. Whatever you decide is going to be the master loop should be the longest recorded sound, because when it repeats any other tracks that are longer will cut off and repeat as well (if you choose to sync them).

When the "master track" repeats, it sends a bang (via [s master_bang]) out to all the other tracks with the "sync_to_master" box checked (well, it gets sent to all tracks regardless, but only tracks that are synched let this bang pass through). The "sync_to_master" box essentially just controls the [multiplex] object, which switches between the sources of the bangs that tell the track when to repeat. You can have a slave track repeat when the master track repeats, otherwise you can just have it repeat itself asynchronously, or just manually when you click on the bang itself.

Whats the difference between [timer] and [realtime]? Do they essentially do the exact same thing?

As far as I know, [timer] measures logical time, and [realtime] measures elapsed real time. Someone smarter than me on here can tell you the difference.

Loading a folder of audio files

@RonHerrema Hello again Ron......

Are you planning to have a main folder, with sub-folders all containing tracks, or are you expecting to just pick folders at random from anywhere on your computer.

If you want to pick from absolutely anywhere then you have three problems....

Most folders will have no wav files.........

Some folders will have wav files at the wrong sample rate....

Most folders containing wav files will contain other files as well... album art, etc.

Anyway..... in "cart" the playlist window is cleared and re-populated as each folder is opened.

If you want to bang a message box you need to give each one a [receive] object and then build a patch to send bangs to those objects. That patch is easy using [random]. The track name will always display in "cart" already. The number of tracks can be known (already the tracklist populator stops when it has created the messages in tracklist).

So you need to add (using the populator) a receive object for each message, place it into "tracklist" and attach (connect) each one to its message.

Either you should do this.........

The populator creates the message, connects it to the [s trackplay] object, creates the [receive 1] object and connects it to the message........ and moves on.

Or this....... the populator runs as in cart, but you add a counter, and when it has finished (how do you know, do you just put a delay and hope) it builds the [receive objects and connects them.

I reckon the first is easier. [s trackplay is object 0 on the page and each message is 1-n for the connect message. You need to change the numbers for the connect messages.......

the populator will build..

[s trackplay] (this is object 0)

[message 1] (this is object 1)

connect object 1 to object 0

[receive 1] (this is object 2)

connect object 2 to object 1

[message 2] (this is object 3....... in "cart" it was object 2 but [receive 1 has been created first)

connect object 3 to object 0

[receive 2] (this is object 4)

connect object 4 to object 3

etc. and it will stop when no more names arrive.

You will need a counter though (don't forget to reset it when the next folder is loaded) so that random can have the right argument. [random x].

You can use the right outlet of [readsf~] to bang out the next number from [random] and start the next track..............another counter and a calculation (when tracks played == track count +1) load the next folder.......... etc.......

Have Fun!

David.

Video tracking

Hi guys,

i'm trying to implement a system to track robots by a webcam. My problem is, it is difficult to track more than one robot with the PIX basic objects.

My system comport three robots identified by plates of different vivid colors, red, green and blue, and a platform with amoeba form that probably will be white or black in the final.

I've already tried to implement the track with pix_movement and it worked very well with just one robot. To work with two robots i thought it would be better to separate the RGBA matrix, to focus in each color, and have a functional track of each robot separately. BUUUT, it did not work very well, because the separated matrix did not present a good contrast of the predominant color, as a nice and big red mark over the red robot, for example, Instead of it, i had a very messy image caused by the interference of the background colors.

to filter and track the image i used:

pix_separator - to use 3 different images

pix_threshold - to keep just the wanted color, if it is high enough

pix_gain - to intensify the required color

pix_movement - to track the movement of the robot

pix_blob - to track the center of mass

One solution i thought its to use a filter that can recognize the image in another format, not RGBA, a format that differ colors, saturation and highness in different values and scales. Does it exists?

Thanks very much for reading it, i would be very glad if you could help me! =)

Beatmaker Abstract

http://www.2shared.com/photo/mA24_LPF/820_am_July_26th_13_window_con.html

I conceptualized this the other day. The main reason I wanted to make this is because I'm a little tired of complicated ableton live. I wanted to just be able to right click parameters and tell them to follow midi tracks.

The big feature in this abstract is a "Midi CC Module Window" That contains an unlimited (or potentially very large)number of Midi CC Envelope Modules. In each Midi CC Envelope Module are Midi CC Envelope Clips. These clips hold a waveform that is plotted on a tempo divided graph. The waveform is played in a loop and synced to the tempo according to how long the loop is. Only one clip can be playing per module. If a parameter is right clicked, you can choose "Follow Midi CC Envelope Module 1" and the parameter will then be following the envelope that is looping in "Midi CC Envelope Module 1".

Midi note clips function in the same way. Every instrument will be able to select one Midi Notes Module. If you right clicked "Instrument Module 2" in the "Instrument Module Window" and selected "Midi input from Midi Notes Module 1", then the notes coming out of "Midi Notes Module 1" would be playing through the single virtual instrument you placed in "Instrument Module 2".

If you want the sound to come out of your speakers, then navigate to the "Bus" window. Select "Instrument Module 2" with a drop-down check off menu by right-clicking "Inputs". While still in the "Bus" window look at the "Output" window and check the box that says "Audio Output". Now the sound is coming through your speakers. Check off more Instrument Modules or Audio Track Modules to get more sound coming through the same bus.

Turn the "Aux" on to put all audio through effects.

Work in "Bounce" by selecting inputs like "Input Module 3" by right clicking and checking off Input Modules. Then press record and stop. Copy and paste your clip to an Audio Track Module, the "Sampler" or a Side Chain Audio Track Module.

Work in "Master Bounce" to produce audio clips by recording whatever is coming through the system for everyone to hear.

Chop and screw your audio in the sampler with highlight and right click processing effects. Glue your sample together and put it in an Audio Track Module or a Side Chain Audio Track Module.

Use the "Threshold Setter" to perform long linear modulation. Right click any parameter and select "Adjust to Threshold". The parameter will then adjust its minimum and maximum values over the length of time described in the "Threshold Setter".

The "Execution Engine" is used to make sure all changes happen in sync with the music.

IE>If you selected a subdivision of 2, and a length of 2, then it would take four quarter beats(starting from the next quarter beat) for the change to take place. So if you're somewhere in the a (1e+a) then you will have to wait for 2, 3, 4, 5, to pass and your change would happen on 6.

IE>If you selected a subdivision of 1 and a length of 3, you would have to wait 12 beats starting on the next quater beat.

IE>If you selected a subdivision of 8 and a length of 3, you would have to wait one and a half quarter beats starting on the next 8th note.

http://www.pdpatchrepo.info/hurleur/820_am,_July_26th_13_window_conception.png

Rosegarden as a Pd Music Notation Device

Hi all!

Problem solved. It is about RG indeed and not exactly a problem. Nevertheless, below, I register the resolution given by Julie S. (and Mr. Cannam as well) from RG list:

[ quote

de Julie S <msjulie_s@yahoo.com>

para rosegarden-user@lists.sourceforge.net,

Alexandre Reche e Silva <alereche@gmail.com>

data 17 de fevereiro de 2011 22:32

assunto Re: [Rosegarden-user] Puredata Rosegarden connection through Jack

enviado por yahoo.com

assinado por yahoo.com

ocultar detalhes 22:32 (18 horas atrás)

Hello ARS

I bet there is a simple fix.

* Select track 1

In Track Parameters:

Select Recording Filters->Channel: 1

* Select Track 2

In Track Parameters:

Select Recording FIlters->Channel: 2

Try that and see if there is joy.

Sincerely,

Julie S.

--- On Thu, 2/17/11, Alexandre Reche e Silva <alereche@gmail.com> wrote:

From: Alexandre Reche e Silva <alereche@gmail.com>

Subject: [Rosegarden-user] Puredata Rosegarden connection through Jack

To: rosegarden-user@lists.sourceforge.net

Date: Thursday, February 17, 2011, 9:54 AM

- Ocultar texto das mensagens anteriores -

Hi.

I made a patch in Puredata (PD) which sends midi outputs through the channels 1 and 2, i. e., [noteout 1] and [noteout 2].

I try to record them in Rosegarden (RG), but the two midi channels of PD are sent to the same midi channel of the RG, as it seems.

I checked the connections again and attached a snapshot for you to take a look, because until now the two tracks of the RG record the two PD midi channels together.

All I want is to record them separately in each track of RG.

Regards,

a r s

PS: Unlike OpenMusic, PD hasn't an object to depict musical notation. Many of us use RG for this purpose as well. It will be nice to have this issue resolved and archived here on the list. Tnx.

end of quote]

http://www.pdpatchrepo.info/hurleur/PD-RG_connection-fix.jpeg

Rosegarden as a Pd Music Notation Device

Hi all!

Problem solved. It is about RG indeed and not exactly a problem. Nevertheless, below, I register the resolution given by Julie S. (and Mr. Cannam as well) from RG list:

[ quote

de Julie S <msjulie_s@yahoo.com>

para rosegarden-user@lists.sourceforge.net,

Alexandre Reche e Silva <alereche@gmail.com>

data 17 de fevereiro de 2011 22:32

assunto Re: [Rosegarden-user] Puredata Rosegarden connection through Jack

enviado por yahoo.com

assinado por yahoo.com

ocultar detalhes 22:32 (18 horas atrás)

Hello ARS

I bet there is a simple fix.

* Select track 1

In Track Parameters:

Select Recording Filters->Channel: 1

* Select Track 2

In Track Parameters:

Select Recording FIlters->Channel: 2

Try that and see if there is joy.

Sincerely,

Julie S.

--- On Thu, 2/17/11, Alexandre Reche e Silva <alereche@gmail.com> wrote:

From: Alexandre Reche e Silva <alereche@gmail.com>

Subject: [Rosegarden-user] Puredata Rosegarden connection through Jack

To: rosegarden-user@lists.sourceforge.net

Date: Thursday, February 17, 2011, 9:54 AM

- Ocultar texto das mensagens anteriores -

Hi.

I made a patch in Puredata (PD) which sends midi outputs through the channels 1 and 2, i. e., [noteout 1] and [noteout 2].

I try to record them in Rosegarden (RG), but the two midi channels of PD are sent to the same midi channel of the RG, as it seems.

I checked the connections again and attached a snapshot for you to take a look, because until now the two tracks of the RG record the two PD midi channels together.

All I want is to record them separately in each track of RG.

Regards,

a r s

PS: Unlike OpenMusic, PD hasn't an object to depict musical notation. Many of us use RG for this purpose as well. It will be nice to have this issue resolved and archived here on the list. Tnx.

end of quote]