Hello there,

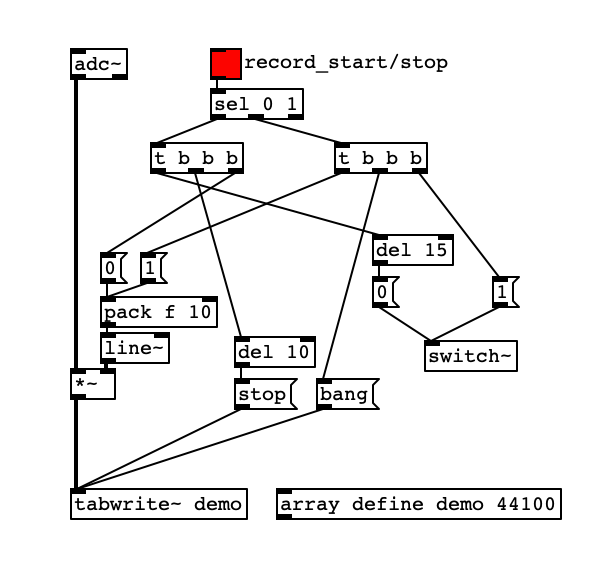

I started developing a sampler/looper with Purr Data a couple of months ago (and I've used this forum a lot!), to be used with a 8*8 MIDI launcher. In order to avoid audio drop-out and glitches, I spent the past 2 weeks separating the UI and DSP patches, communicating via OSC (so the UI can be launched with -nrt and -noaudio while DSP is using -rt -jack -nogui) (I'm on Debian 9.0).

To avoid dynamic patching and array creation, I then have 64 instances of the track's DSP abstraction, waiting for the UI to use them, even if only 3 or 4 tracks are actually used.

I find however that the idle DSP instance of Pd - all tracks' abstractions loaded, but not recording or playing any audio - uses 28-29% of my CPU when monitoring with Pd's load meter, while the idle UI instance uses only 5% (I'm on an old machine, but still). I have narrowed it down to the tracks abstractions, since I get only 5% CPU use (DSP side) when there is only one idle track patch instead of 64.

Looking deeper into it, I find that if I delete the 2 [tabread4~] objects (each track has 2 arrays for stereo) I significantly reduce the CPU load, from ~28% to ~17% with 64 idle patches. 17% is still significant, but I couldn't identify any other object with such an important impact.

Is it known that [tabread4~] (tabread~ does no better) uses significant CPU even if idle, both with a phasor~ at 0Hz or not connected at all to the phasor~? Is there a way to make it "sleep" completely? or is there no/little risk of audio drop-out if I create that tabread4~ object dynamically once I actually need the track?

The CPU load increases too ~40% when I turn DSP off, I guess because of errors or confused messages with empty arguments when processing audio signals.

Any other advice to reduce the CPU load is of course also welcome

Thank you!

(funny thing, I also hear tons of clicks and glitches when opening the emoji panel to write this message, so I suppose it really is a hardware shortfall).

(funny thing, I also hear tons of clicks and glitches when opening the emoji panel to write this message, so I suppose it really is a hardware shortfall).