I'm stumped (bi-directional guitar pedal patch with Mobmuplat editor frontend))

Hi, All.

I'm stumped...

Here's what I have so far (see "TiGR-Resurrected-20160709.zip" below, requires Mobmuplat development environment):

a (19 page) Mobmuplat (MMP) frontend, that I use as the GUI, which I run on my laptop thru the MMP editor (which someone sort of suggested, since my handheld can Not handle the load of the app on its own-Note: I have tried OSC and it does not work as well as this setup);

the first page is the Main Page;

the subsequent 18 pages each have one unique pedal/effect on them with all of its respective controls;

the Main Page has 7 (18 slot) vsliders with each value representing one of the pedals represented by one of the mmp pedal pages;

the Main Page also has a (currently not implemented, but I will probably put it to use once I figure out this issue first) a 7-slot multislider which will be used to change the preset of any pedal in its respective slot;

all pages, including the Main Page, have a preset control abstraction (mine) which saves and loads the page's primary control values;

the Main Page is a "stack" so the guitarist can build a rack of >1 pedal and up to 7 effects (triggering no pedals is just a bypass);

different Main Page slots may be filled with the Same effect, in which case, the most recently applied parameters for a pedal are applied to all of its chosen slots.

GOAL:

While I am playing, be able to smoothly change the "voice", i.e. slot arrangements and pedal parameters (via their presets) of my guitar without taking my hands off the strings/neck.

Work so far:

I used a "Logitect Dual Action (usb game) Controller" and hid library to change the effects (left joystick slots and right joystick pedals). But because I had not setup presets, yet I had it randomizing parameters and while interesting the volume spiking and chances the multiplied gains would be too low was Awful!!!

Needed help:

How would I go about making a (what I am thinking of as a 2-d) stompbox that would let me rearrange the pedals in each slot AND/OR change any given slot's pedal parameters.

I'm not going to have a problem with the logic of it, as I can see clearly how that can be accomplished. But I am unclear how to build or use Or buy a piece of hardware that will allow me to do this.

My first Bad guess, or at least place to start, is it is an array of stompboxes. I can intuitively tell this is way wrong, But I can not, for the life of me, visualize/feel the better or possibly even optimal solution for this.

Little help, please.

So your suggestions, advise, insight, encouragement would all be very, very, very welcome.

Peace and thanks in advance.

Sincerely,

svanya

Little help please, "TiGR_Resurrected", smart phone guitar rack, (beta) to be issued for free

I returned to this project after a haitus and more learning and while it has become much, much more streamlined and higher headed (:---) it is still having the same issues running inside MobMuPlat: garbled sound.

If you have or can make the time, please, have a look at it, and tell me how I can resolve this problem, as I think it would of benefit to the world-at-large, thru the guitarists in the world, and as there are so few guitar apps, for Android.

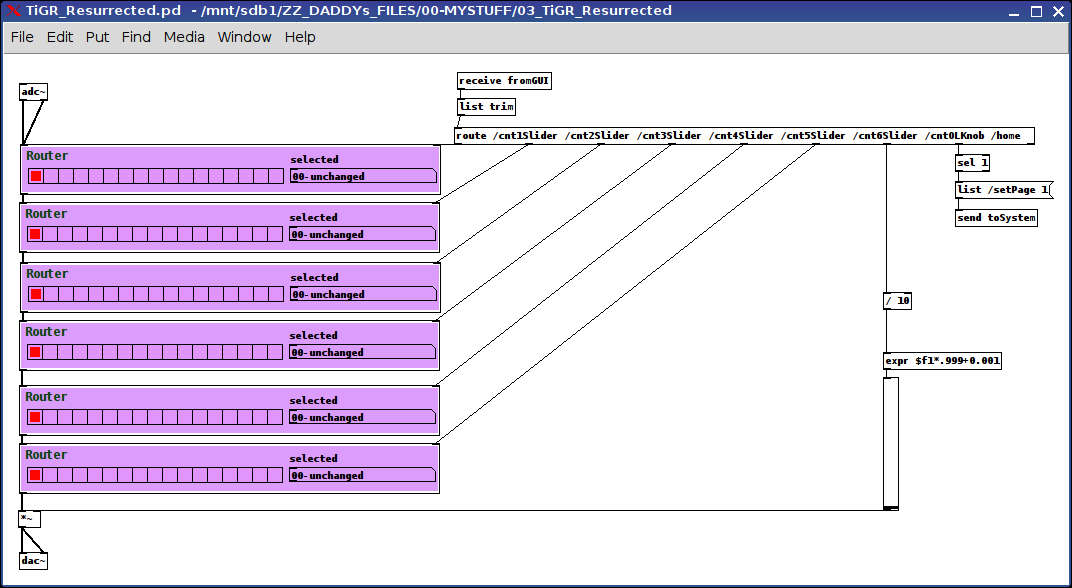

It has a simple master patch, largely based on the "Router", into which all of the pedals (16) have been embedded.

And taking whale's (?) advise I added switch~'s to all the pedals so dsp only turns on when they are activated.

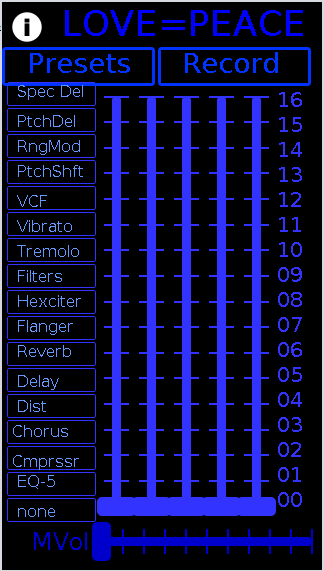

The first (MobMuPlat) page is the "rack", TiGR=The (i) Guitar Rack, which each pedal being on a subsequent page (swipe at the top) (note: currently "Presets" and "Record" do not do anything).

The hardware I am using which may be the issue is a Motorola Moto E (2nd Generation) with 4G LTE, Android 5.1, Kernel 3.10.

And I can't tell if it is my hardware's problem or libpd is just not up to the task to handle the load.

Instructions:

You select the pedal from the first page (the sliders, so you can stack multiples of the same pedal)

And

Each page has it's own settings/controls and a bypass.

(Note: more "slots" could be added, if 6 is not enough  , by just adding sliders and Routers.)

, by just adding sliders and Routers.)

I thank you so very, very much in advance for any advise you can offer.

Maybe if enough of us chime in we can get this (free) into the hands of all those guitarist who do not have enough supplementary income to spend on a rack.

-svanya

p.s. as to credits, it depends on a lot of other people's work. To name a few, Guitar Extended, hardoff's DYI2 library, tb_compressor, etc., etc.

And to them I say: "Thank You. It is so wonderful to walk on the shoulders of giants who are so kind, generous, and loving. -Peace"

Screenshots:

touchOSC page change bug

I'm trying to use touchOSC to control some items in pd_extended using the mrpeach libraries. everything works fine except that when changing page in touchOSC, pure data crashes.

I maybe figured out why since when changing page it sends just "/page" instead of "/page/item value", and since I'm using some cascaded "routeOSC" to address the value to the item I want to change, only the first "routeOSC" sees a value, but then the others receive just a bang.

How can I correct this behaviour? How can I ignore the message when just changing page?

Simple Math Question: Decimal to two integer ratio

oh yeah, it gets even worse with them, doesn't it.

Jack midi port question

Hi to all,

I have a patch that convert launchpad midi note to midi CC to an Yamaha RM1X all connected with jack, so i have:

Jack connection :

Launchpad Out ------> Pure Data Midi-in 1 (for the PD patch)

Pure Data midi-Out 1 ----> midisport (for the yamaha)

if i want to use the led of launchpad for visual feedback:

Pure Data midi-Out 2 ----> Launchpad IN

The problem is that the launchpad get and send midi to ch 1, if i send to the lauchpad i send also to the Yamaha, how i can separate this two things ? what object i have to use, if is possible ?

Thanks

Novation Lunchpad on Linux (ubuntu maverick) WORKS !!

Hi to everyboy,

i forgot to post a better guide:

I tested this on linux ubuntu maverick:

before you have to install:

$ sudo apt-get install libusb-1.0-0

$ sudo apt-get install libusb-1.0-0-dev

$ sudo apt-get install libasound2-dev

then extract the sources (from https://github.com/jiyunatori/launchpad, click Downloads, and take the .tar.gz)

cd in the extracted directory compile with:

$ make clean

$ make lpmidi

if you didn't get errors plug the launchpad and run:

$ sudo ./lpmidi

then open jack or alsamidi ports...and you'll find the launchpad midi device.

you can take the lpmidi apps and put where you want, and make a launcher (i put it on the gnomepanel)

I hope this works also for the linux user here, let me know....

All this instruction came from here: http://www.renoise.com/board/index.php?showtopic=26229

thanks

Call for participation - Pure Data improvisation- Marseille - France

Call for participation at the performance audio Blank Pages #13

Lieu/Place : ZINC Friche la Belle de Mai, Marseille, France

Date : 10-07-2010

Heure/Time : 9pm - 10pm

We search for sound artists or musicians who work with the graphical programming environnements Pure Data or Max/MSP to interpretate the Blank Pages score. This score requires participants to play together and improvise without prior preparation. The Blank Pages score, describes the situation in 4 points : (http://blankpages.fr/score.html) :

60'00''

Pure Data

Blank Page

No load/No Save

Participants are asked to bring a laptop with the software Pure Data or Max / MSP installed.

More infos :

www.blankpages.fr

If you are interesting send me an e-mail with your name surname or nickname.

thomas_thiery (at) laposte (dot) net

Cheers Thomas Thiery

Pure Data Concert - Call for Participants - Munich

April 02.+03. 2010 - each 8.pm

Blank Pages - http://blankpages.fr

CALL FOR PARTICIPANTS

Blank Pages Sessions use Pure Data or Max/Msp for creating sounds/video by live programming, starting from a blank page, without loading or saving patches. Participants make sounds/music for one hour, after that the session ends.

There will be two Blank Pages Sessions in Munich. To participate, please fill out the registration form, which can be found on the Blank Pages homepage. No hosting possible. The Blank Pages Session in Munich is integrated in a Pure Data Forum, which is part of the Kunstfest Prosume Conduce in Munich, Germany - for details see http://prosumeconduce.info (english text available).

Launchpad mappings

i've also used the launchpad on two little projects.

you mean whatever it's possible to control the launchpad's leds from pd? it's easy: just use the same midi note # as the pad sends to pd. for example, sending midinote 16 /w velocity 15 will turn first pad on the second row to bright red.

this will explain it all (progammes reference at the bottom)

http://www.novationmusic.com/support/launchpad/

Need help with Pd patch for TouchOSC...

Hey guys,

Just discovered this forum for PD. Great info and valuable documentation.

I need help with this patch that i created for ToucOSC with Pd extended. ( actually a modification of the Keys layout that comes with TouchOSC )

Its basically a 5 page layout. 1 to 4 being a midi keyboard controller of one octave for each page that can trigger any note out for Ableton Live VSTi's ( for my use ), and the last page being a bunch of controllers.

Problem is, the first and second page are working as they should, but the third page triggers wrong info from the TouchOSC interface on ipodtouch. when i play C# it reflects as being a G in PD and so on...like all the notes are not properly assigned. The 4th and 5th page not triggering at all. Nada, zilch!

Im quite new to PD extended and its language, ive done some research on the net, and im feeling quite lost in all of this. Im sure you know the feeling...so ive included my patch here. If anybody can have a look, and maybe tell me what ive done wrong, and can indicate me as how i can correct the matter, that would be immensely appreciated.

Cheers,

VP

http://www.pdpatchrepo.info/hurleur/Keys_2_-_4_octaves.touchosc.pd